We created a workflow to generate hundreds of videos with Stable Video Diffusion in one command; and show example results in the video and grid below. Click around to get a sense of the kinds of videos you can generate and keep reading to learn how you can integrate generative video, powered by open-source models, in your own workflows and products using Metaflow!

Text-to-music-video: AI cowboy (lost in the code)

To demonstrate the power of emerging AI tools, we experimented with a combination of ChatGPT, Suno AI, and Stable Video Diffusion to generate a music video only from text prompts.

GenAI video dispenser: click to explore!

Subject

Action

Location

Style

We at Outerbounds provide tools that allow you to build differentiated, unique ML/AI-powered products and services based on open-source models. This time, we’ll show how to build production-grade video generation applications such as the one above, using Metaflow and other open source software.

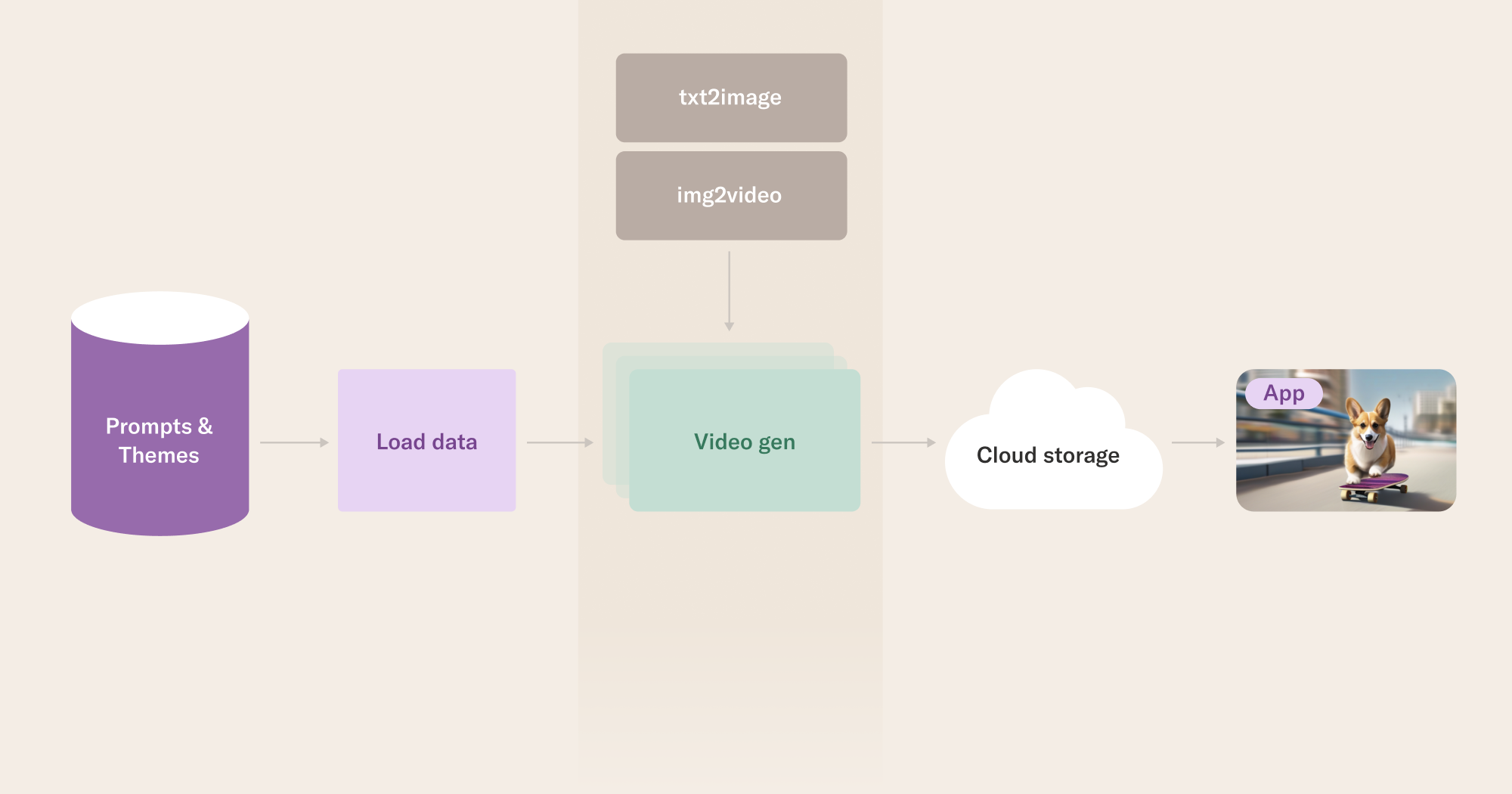

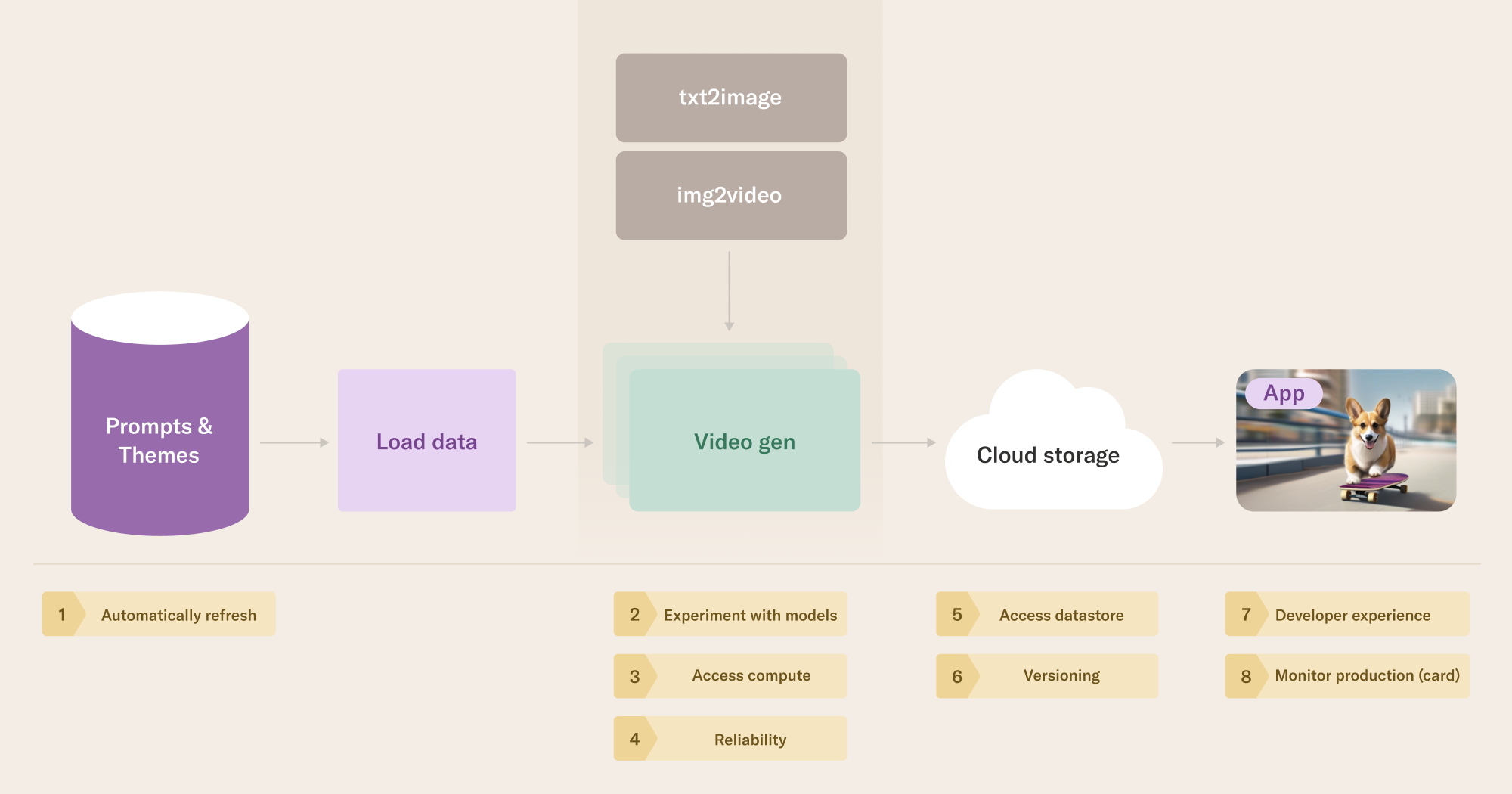

While it is surprisingly easy to create quick demos and POCs of this kind, it is equally easy to underestimate the number and the quality of the components that are required to make the application production-ready. Even a simple system consists of a number of moving parts, from themes and prompts stored in databases, to text-to-image models, image-to-video models, cloud storage, and application UI layers:

Most data scientists and ML/AI engineers are aware of the need for (often a non-trivial amount of) compute these days, but much more goes into building a maintainable production system:

- new data needs to automatically refresh models;

- workflows need to be orchestrated;

- data, code, models, and output needed to be versioned and accessible;

- models need to monitored;

- developers need to rapidly explore and experiment with new models and data and

- this all needs to be an ergonomic user experience:

In this post, we'll introduce the essential open source tools required to develop applications possessing these characteristics.

The sprawling world of foundation models

In August 2022, the GenAI revolution really started to heat up with Stability AI’s public release of Stable Diffusion (SD), which allowed any developer with an internet connection and a laptop (or cell phone!) to use Python to generate images by providing text prompts, for example, in a Colab notebook. A month later, we showed how to take such foundation models beyond proof of concept and embed them in larger production-grade applications.

For more information about techniques related to Generative AI, you can take a look at many articles we have written about large language models, most of which apply to multimodal cases too. You can read about the role of foundation models in the ML infrastructure stack, how to fine-tune custom models, build reactive systems around them, and how to leverage modeling frameworks effectively. If you want to try the techniques hands-on, check out our LLMs, RAG, and Fine-Tuning: A Hands-On Guided Tour or a code-along example Whisper, a speech-to-text model.

This time, we focus on a text-to-video use case, motivated by Stable Video Diffusion (SVD) that was released by Stability.AI as open-source two weeks ago. It is exciting to be able to start building innovative, custom applications around generative video, inspired by highly credible videos created with tools such as Runway, Pika Labs, and Synthesia.

Stable video diffusion: Stability AI’s first open generative AI video model

There are several ways you can start playing with SVD immediately, such as

- Using Google Colab to leverage cloud-based GPUs for free with notebooks such as this;

- Using a service such as Replicate to use a GUI to go from image-to-video;

- If you have GPUs on your laptop, you can use your local system to follow the instructions in this Github repository README.

These methods are both wonderful approaches to getting up and running and they allow you to generate videos, but they are relatively slow: with Colab, for example, you’re rate-limited by Google’s free GPU provisioning and video generation takes around 10 minutes. These options are fantastic for exploration and experimentation, but what if you want to embed your SVD models in enterprise applications? Or put them in a pipeline with SD to move directly from text to video? Or if you want to scale to use more GPUs, how do you do that trivially?

Just as when working with SD models, as you expand to produce significantly more videos, possibly by several orders of magnitude, it becomes crucial to effectively manage versions of your models, runs, and videos. It's not to diminish the value of your local workstation or Colab notebooks; they serve their intended purposes admirably.

However, a key question arises: What strategies can we employ to greatly enhance our SVD video production scale, systematically organize our model and image versions, and develop a machine learning process that integrates seamlessly into broader production applications? Enter open source Metaflow, a solution for these challenges that encourages us to build systems instead of isolated scripts by structuring code in Directed Acyclic Graphs (DAGs).

| Scenario | Workflow | Feasibility | Throughput | Compute cost |

|---|---|---|---|---|

| One off make a few dozen video clips | Notebook-as-a-service | medium | low | $10s |

| One off make a few dozen video clips | DAG workflow | high | medium | $10s |

| Produce a batch of 2000 video clips daily | Notebook-as-a-service | low/impossible | NA | NA |

| Produce a batch of 2000 video clips daily | DAG workflow | high | high | $1000s |

Using Metaflow to parallelize video generation

As an example, we’ll show how you can embarrassingly parallelize video creation over subjects, activities, locations, and styles, as you can in the above application. Metaflow allows us to solve these challenges by providing an API that affords:

- Define workflows easily in pure Python,

- Large-scale parallel processing with foreach,

- Track and version all code, data, and models,

- Production orchestration,

- Integrating workflows to their surroundings via events, and

- Monitoring with custom reports.

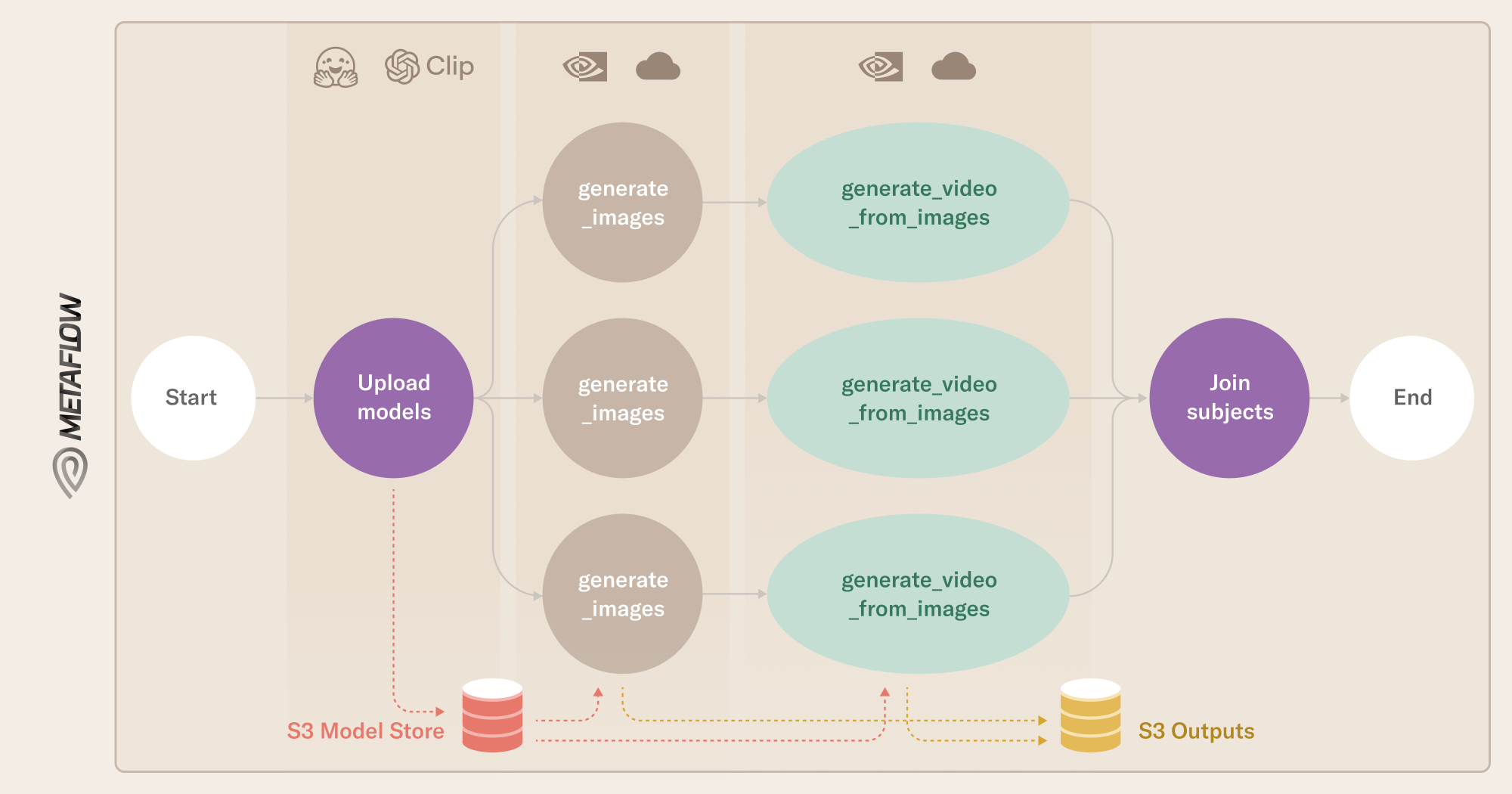

To generate images (or videos) using Stable (Video) Diffusion with Metaflow, we will define a branching workflow, in which

- each branch is executed on different GPUs, and

- the branches are brought together in a join step.

The generate_images step is the same as in the previous post, except we have upgraded the model to use the more recent stable-diffusion-xl-base-1.0.1 (note that it was trivial to switch Stable Diffusion out for Stable Diffusion-XL in our original Metaflow flow!).

To then generate video, the key addition is using the open-source Stable Video Diffusion repository in the generate_video_from_images step. Chained together, the image and video generation steps demonstrate how Metaflow connects versioned cloud object storage to remote GPU workers and replicates this connection in many parallel threads while keeping environments consistent and matching your local workstation.

The key runtime operations in the generate_video_from_images step include:

- download the cached Stability AI diffusion model and OpenAI Clip model,

- download the relevant images for this task, produced in the previous task,

- for each image, for each motion bucket id, generate a video,

- push videos to S3, and

- set the

self.style_outputsmember variable to hold the prompt combination and corresponding S3 paths to the generated images and videos.

We can see the above operations in a pseudocode mirroring the actual workflow’s structure.

@kubernetes(

image=SGM_BASE_IMAGE,

gpu=1,

cpu=4,

memory=24000,

)

@gpu_profile(artifact_prefix="video_gpu_profile")

@card

@step

def generate_video_from_images(self):

...

model_store = self._get_video_model_store()

model_store.download(self.video_model_version, "./checkpoints")

self.style_outputs = []

image_store = ModelStore(self.stored_images_root)

image_store.download("images", _dir)

...

for (im_path, im_bytes, vid_bytes, motion_id) in ImageToVideo.generate(*_args):

...

self.style_outputs = [...]

self.next(self.join_subjects)

Deploying to a production workflow orchestrator

The above is all you need to massively parallelize video production on the cloud from your laptop or local workstation. To take this to the next level, you may want to deploy them and orchestrate them on the cloud. This is a key step in moving from research to production as it allows you to close your laptop and still have your workflow running in a highly-available, easily observable, secure, and maintainable manner.

Metaflow makes it trivial to deploy workflows to a popular Kubernetes-native, production-grade workflows orchestrator, Argo Workflows. All that is required is a command line change. Instead of

python text_to_video_foreach_flow.py --package-suffixes .yaml run --config-file ./style_video.yaml

You execute

python text_to_video_foreach_flow.py --package-suffixes .yaml argo-workflows create

python text_to_video_foreach_flow.py --package-suffixes .yaml argo-workflows trigger --config-file ./style_video.yaml

Once the argo-workflows create command deploys the workflow, we can even run the equivalent of the second command entirely through the Outerbounds UI:

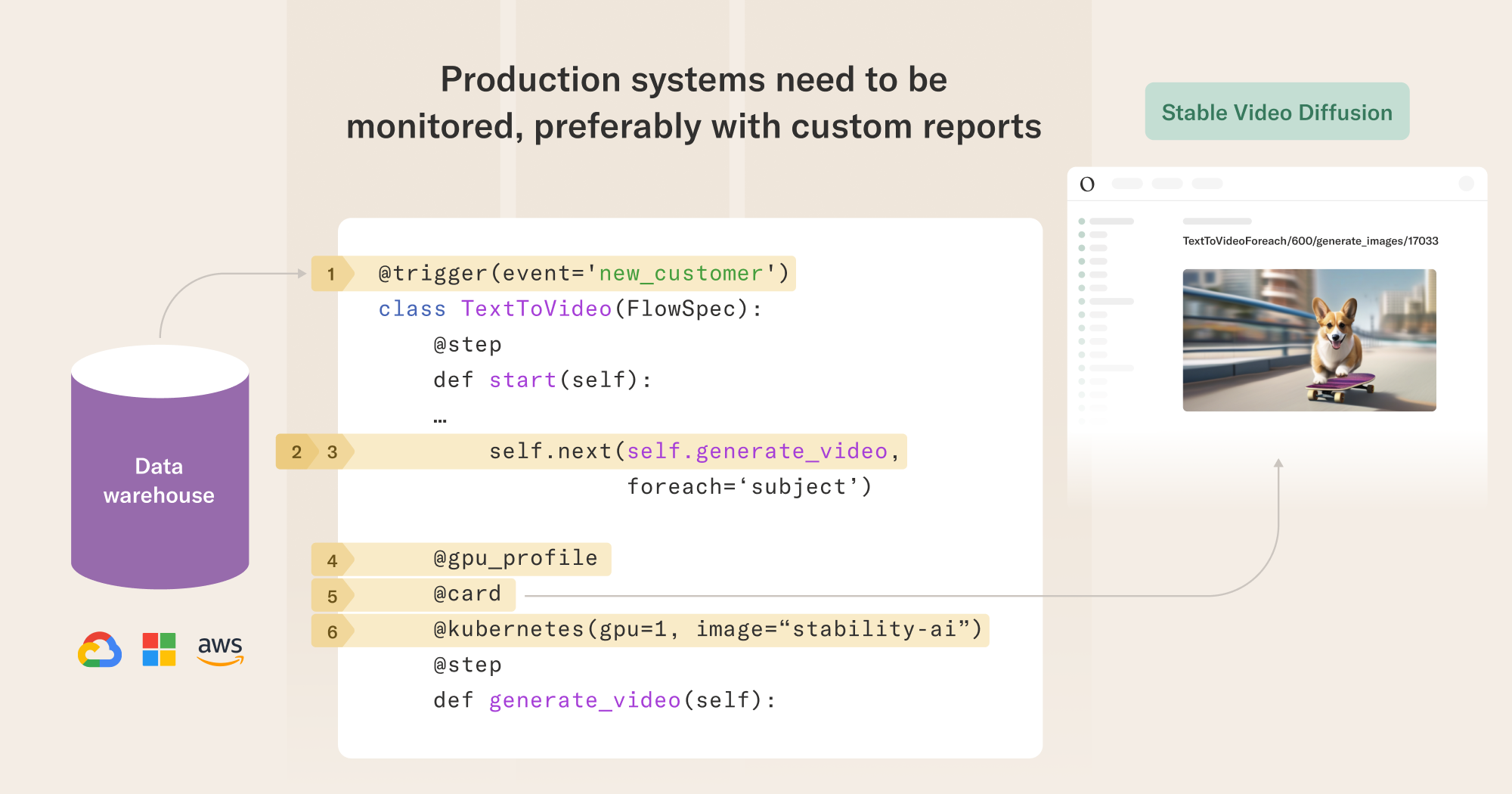

With Metaflow, such workflow orchestrators can also be used to build reactive machine learning and AI systems with event triggering! For example, you could trigger your video generation flow whenever

- New prompts, images, or other data end up in your data warehouse or

- Another flow has been completed, such as an image generation flow.

These affordances also allow you to decouple organizational concerns, such as when different teams are responsible for data, image, and video generation.

In the following schematic, we see that updates in the data warehouse trigger our TextToVideo flow, which

leverages GPUs on a Kubernetes cluster,

- Helps us visualize and monitor our models using Metaflow cards,

- Monitors compute using the GPU profile, and

- Parallelizes our genAI video production using

@foreach.

Next steps

In this post, you learned how to scale video generation workflows and run them in a consistent and reliable manner. We showed how you can use an open-source pipeline to generate complete music videos in an afternoon.

To get started making your own movies, fork the code respository and run some flows. If you want to do it in a highly scalable, production-grade platform, you can get started with Outerbounds in 15 minutes. To learn more about building ML and AI systems, join us and over 3000 like-minded data science and software engineering friends on Slack.