Previously, we wrote about instruction tuning, an LLM fine-tuning approach, which you can read about here. In this post, we give an update on how we have since improved the implementation by following generally useful patterns for moving ML projects from prototype to production.

Take a look at our updated repository that includes the following improvements:

- Config-driven machine learning to shorten debugging cycles and make it easier to modify experiment trials and observe changes.

- Decoupled workflows to build reactiveness into the fine-tuning system.

- Model optimization to make models smaller and training them less expensive.

Config-driven machine learning

A common pattern that emerges as ML-powered software projects develop is that increasingly many parameters need to be set for each end-to-end run of the system. As parameters are added, it is increasingly important to consider how configuration files fit into developer workflows. Earlier in this project, developers passed parameters for each workflow run in the command line.

python flow.py

--base-model="yahma/llama-7b-hf"

--macro-batch-size=128

--micro-batch-size=4

...

run

Changing values in this format becomes inconvenient as configuration options accumulate. Instead, we prefer end users specify everything in one config file and pass it to the command as one entity. In our project, the configuration file defines the following parameter groups:

DataParamsTokenizationParamsPromptTemplate

ModelParamsTrainingParamsLoraParamsWandbParams

Under the hood, we use OmegaConf because it has simple APIs to load, structure, and merge configuration objects from .yaml files in Python.

A benefit of the config-driven workflow is to reduce cognitive overhead in viewing and updating a run’s parameters. The configuration settings are also versioned with the corresponding Metaflow run as one entity, making it easy to track and use in cross-experiment comparisons. This affords scientists increased flexibility to separate how they design experiment trials from how to run them.

Decoupled workflows for event-driven ML

As complexity of the experiment pipeline increases, it is easier to debug and develop components if complex workflow steps are decoupled. To this end, another structural change to the code in our project was to separate data preparation and model training workflows. Among other benefits, this enables an event-driven system where fine-tuning jobs can react to newly prepared data.

Data preparation

As before, these workflows access data and models from HuggingFace. We use the same databricks/databricks-dolly-15K dataset as the base example. Unlike before, gathering data and fine-tuning are decoupled.

There are several benefits to this modularity. First, developers can iterate on isolated components, improving debuggability and composability. Second, the data preparation workflow is written to publish events, enabling the desired event-driven pattern.

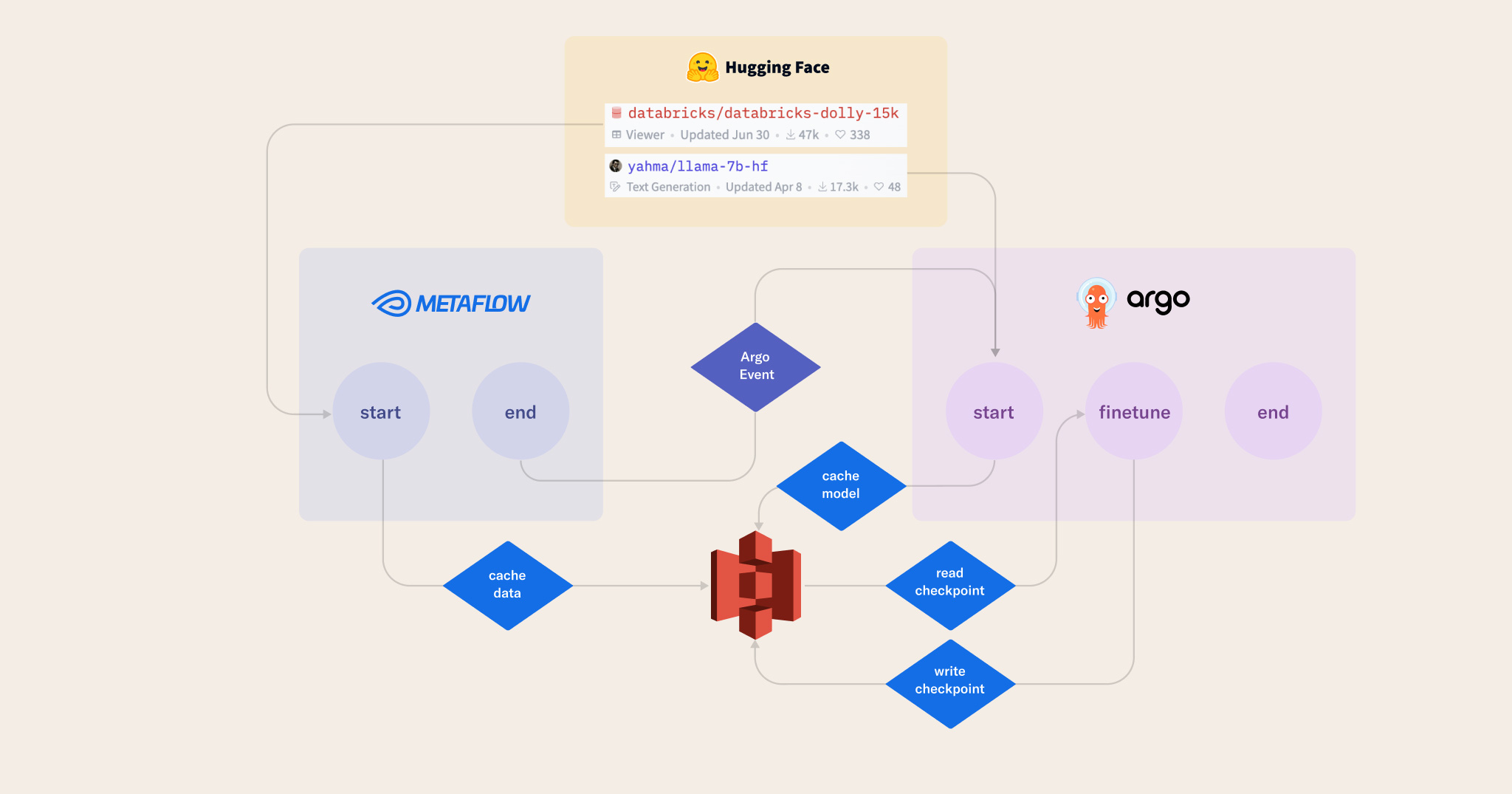

Consider this diagram of the DataPrepFlow.

Data ingestion and transformation happen in the start step. Based on the result, for example a successful data validation routine, the end step may publish an ArgoEvent. The following section describes an example of a subscriber to this event.

Fine-tuning Llama

When DataPrepFlow publishes an event, the LlamaInstructionTuning workflow is automatically run because the flow code subscribes to the {project}.dataprep event in the @trigger decorator.

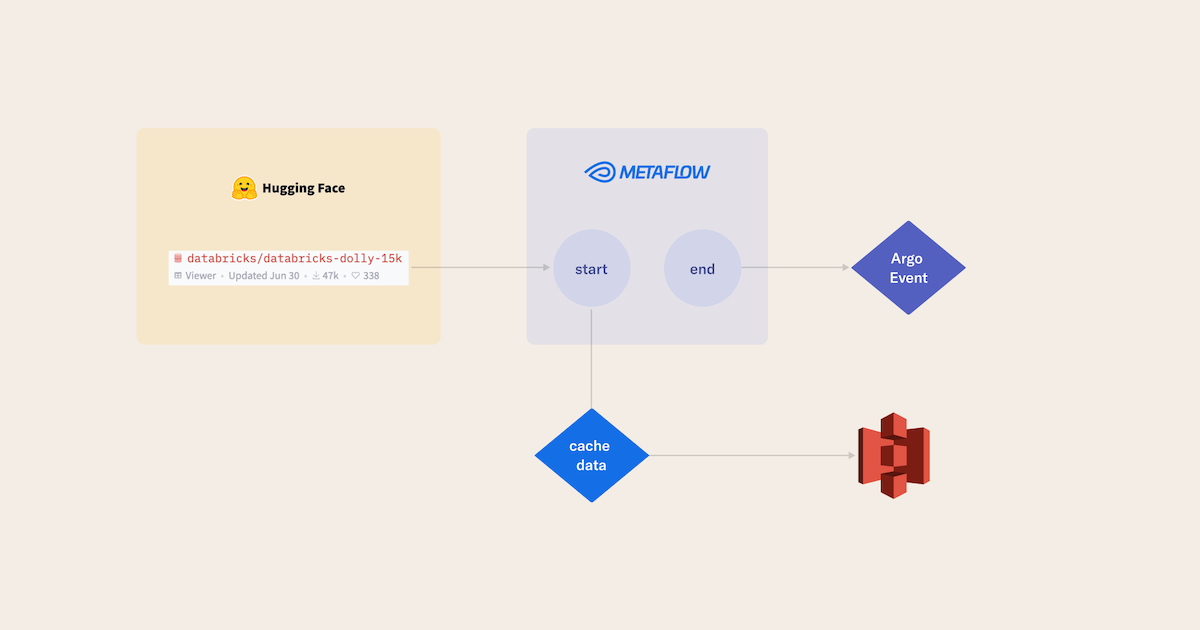

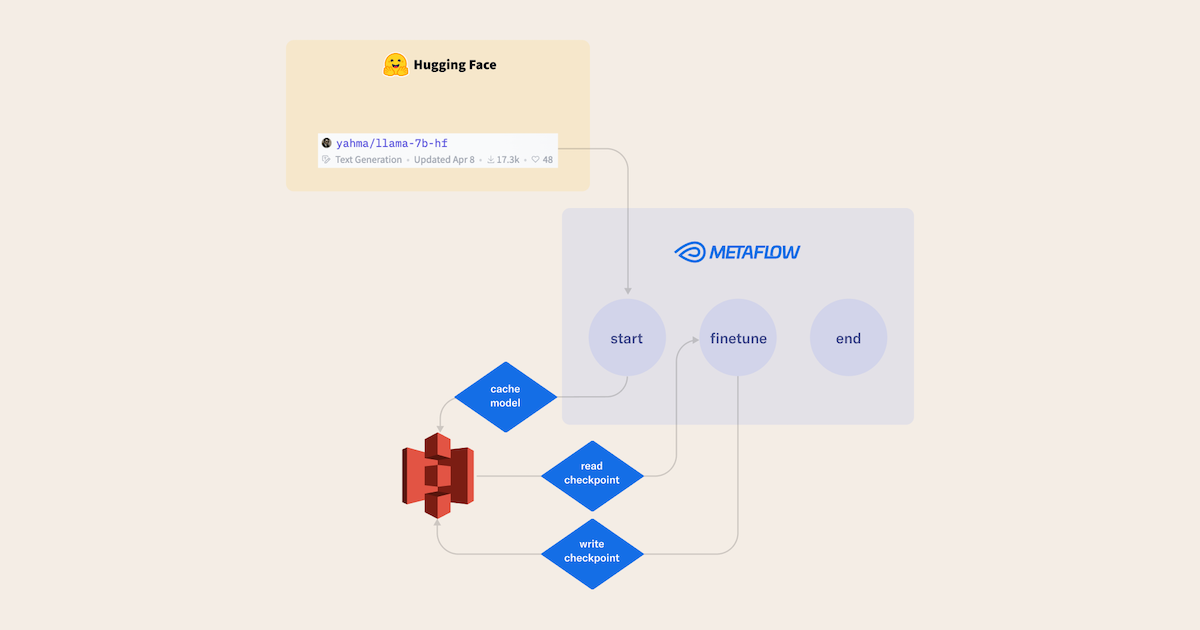

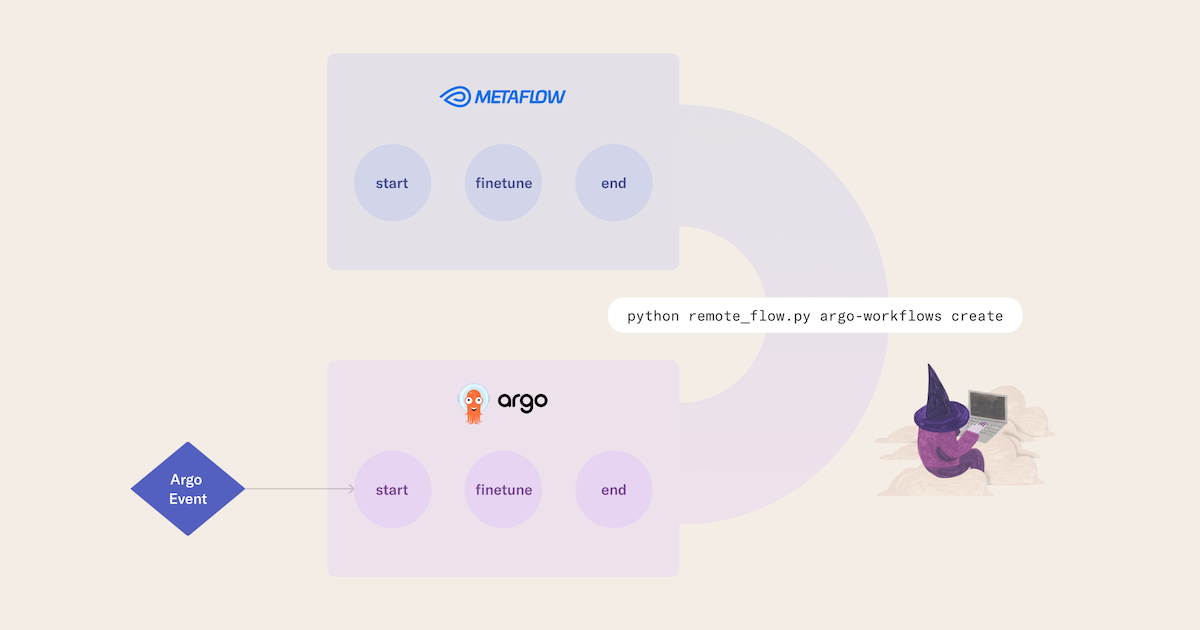

Through decorators like @trigger and simple commands, Metaflow makes this kind of event-driven workflow straightforward to implement. First, we write a Metaflow workflow in the typical Python file structure and annotate it with @trigger. For example, here is a flow diagram of this project's LlamaInstructionTuning flow.

Collaborators can manually change the steps and run one-off flows to experiment, debug, and test. Using the Metaflow @project feature, developers can deploy workflows without interfering with each other's stable namespaces. Additionally, end users can create multiple copies of deployments that train models with different pre-trained models and hyperparameter combinations while listening to the same data preparation event. With this confidence, and when satisfied with their experimental runs, a developer or CI/CD process runs a single Metaflow command to deploy the workflow to Argo.

In addition to Argo, Metaflow can compile DAGs to run on AWS Step Functions and Airflow.

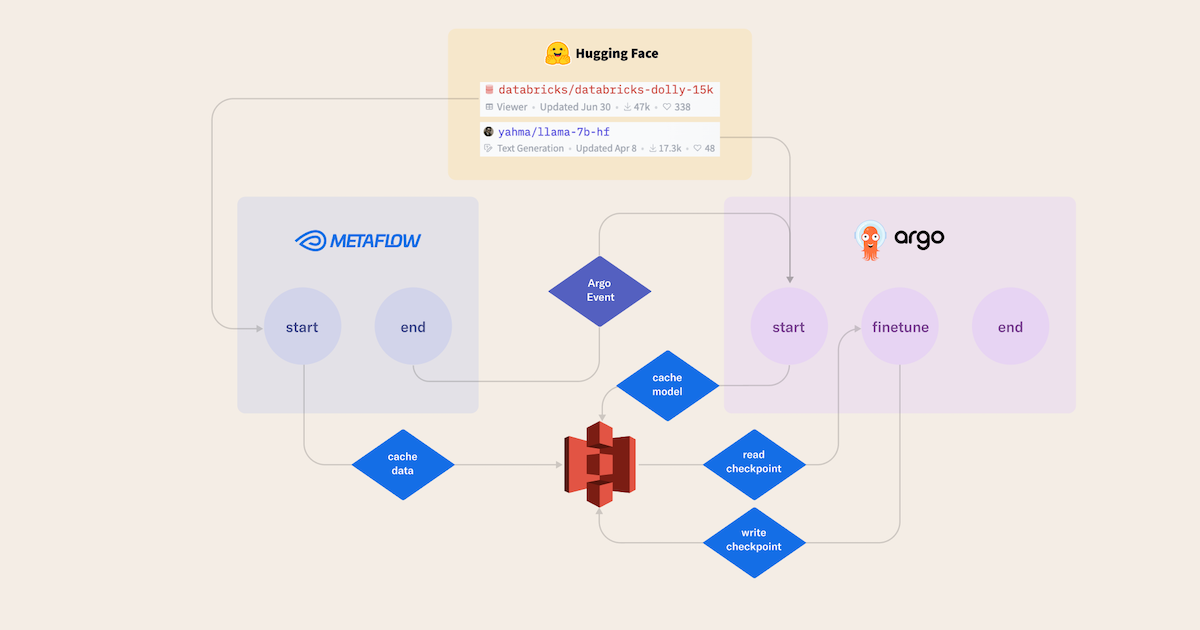

With the workflow deployed to Argo, the LlamaInstructionTuning flow automatically runs when an event is emitted. Let’s zoom out and look at the full system.

The end-to-end flow from gathering new data to fine-tuning an LLM can be launched with one command from a developer’s laptop, automated on a schedule, or triggered by an event. This is how you can use Metaflow to build reactive fine-tuning workflows.

The system has several other new connections to the outside world.

For example, the start step of LlamaInstructionTuning now uses a model store.

@step

def start(self):

...

if not store.already_exists(base_model):

self.download_model_from_huggingface(local_path)

store.upload(local_path, base_model)

self.next(self.finetune)

The code checks if a suitable model exists in the S3 store; if not, the model is downloaded from the HuggingFace Hub and cached in S3. Caching makes it faster to start training in the Metaflow task in subsequent runs because moving the model from S3 to EC2 is faster than downloading from HuggingFace.

The finetune step loads the data and model requested, runs the job (using the torchrun command under the hood), and finally, the tuned model is uploaded to S3.

@step

def finetune(self):

...

hf_model_store.download(base_model, model_path)

self.run(base_model_path=model_path, dataset_path=dataset_path)

trained_model_store.upload(model_save_dir, base_model)

...

Model optimization

With a more stable workflow, we can refocus our attention on optimization. Although conceptually fine-tuning is a small part of a much larger system, technically, it can be the most expensive step to execute, as LLM training takes significant GPU horsepower. By default, GPU requirements grow as model parameter counts grow, and fine-tuning state-of-the-art models gets more expensive. This makes fine-tuning (or any deep learning) a critical performance optimization target.

To train the same (larger) models with smaller (the same) footprints, we made improvements based on methods for efficient fine-tuning called quantization and low-rank adaptation (LoRA). Together, costs of one run are reduced by around 50%. Because of how LoRA works, cost savings at inference time can be considerably more extreme. The smaller model representation means less required GPU memory, enabling fine-tuning the same-sized pre-trained model and serving the result on a more affordable category of devices.

Quantization

Instead of expressing each number representing a parameter of a neural network with 32-bit precision, you can lower the precision of each number in memory.

Consider Llama-7B. A computer needs 9 GB of VRAM to load it from disk.

| Model | VRAM Used | Minimum Total VRAM | Card examples | RAM/Swap to Load* |

|---|---|---|---|---|

| LLaMA-7B | 9.2GB | 10GB | 3060 12GB 3080 10GB | 24 GB |

| LLaMA-13B | 16.3GB | 20GB | 3090 3090 Ti 4090 | 32 GB |

| LLaMA-30B | 36GB | 40GB | A6000 48GB A100 40GB | 64 GB |

| LLaMA-65B | 74GB | 80GB | A100 80GB | 128 GB |

Quantization trades numerical precision for reducing VRAM requirements, so a more approximate representation of a previously-too-big model can be represented on the same device without triggering a GPU memory overflow.

To implement quantization, we use HuggingFace’s Parameter Efficient Fine-Tuning (PEFT) library, which has a function prepare_model_for_int8_training that our trainer applies to the HuggingFace model object.

LoRA

Where quantization reduces precision of each number representing a parameter, LoRA leverages linear algebra insights to reduce the number of parameters that are fine-tuned and used at inference time. The LoRA hypothesis is that parameter updates learned in fine-tuning have a low “intrinsic rank”, meaning there are few linearly independent columns in the update matrix. The goal is to find low rank decomposition matrices that hold fewer parameters representing important dimensions in the update matrix.

To achieve this, LoRA constructs a matrix of zeros and a matrix of random noise that when multiplied together produce a matrix of the same shape as the parameters adapted in fine-tuning (the original paper describes applying LoRA only to transformer self-attention modules). These matrices can be used for fine-tuning and have far fewer parameters to update.

Combined with quantization, LoRA can drastically reduce model training and operational costs. This section barely scratches the surface of useful things that can be done with LoRA. To deepen your knowledge, consider how to design systems to:

- leverage the fact that LoRA checkpoints are orders of magnitude smaller than entire LLM checkpoints;

- decide which parameter layers in a pre-trained network to apply LoRA to;

- stack rank decomposition matrices from various LoRA fine-tuning jobs;

- find the optimal rank of adapted matrices in fine-tuning domains relevant to your products; and

- combine LoRA with retrieval-augmented generation.

Summary

In this post, we walked through how we improved our instruction-tuning workflows through the following general patterns for improving ML-powered software as it moves from prototype to production:

- config-driven Metaflow,

- decoupled workflows for event-driven ML, and

- model optimization.

As a result, we have a more ergonomic approach to LLM fine-tuning, and it is easier to reproduce each other’s experiments. Moreover, we can automate workflows and optimize modular steps, making the modeling pipeline more widely useful and cheaper to operate.

You can get started with the code here.

For more examples of cutting-edge AI platforms built using Metaflow, check out Rahul Parundekar's recent Metaflow Office Hours session: Crafting General Intelligence: LLM Fine-tuning with Metaflow at Adept.ai.