Over the past years, we have been helping companies deploy a wildly diverse set of ML workloads in production. Last year, we added open-source large language models (LLMs) in the mix. Continuing the line of research we started with NVIDIA, we recently collaborated with Hamel Husain, an LLM expert at Parlance Labs, to explore various popular solutions to model serving in general, LLM inference in particular. In this article, we share our decision rubric for model deployments using LLM inference as an example.

The diverse landscape of ML and AI

ML and AI models come in various shapes and sizes. A simple regression model may be enough to forecast demand for, say, sunscreen. A suite of tree models may be used to recognize fraud. Or, you can perform motion detection in real-time using OpenCV with a few lines of code. As icing on the cake, today you can hack a mind-bogglingly capable chatbot with open-source large language models.

Every application relates to underlying models differently. Some need to handle millions of requests per second, whereas others are low-scale but need to be highly available. The following chart illustrates some examples that we have come across in terms of three typical requirements.

The axes show scale and reliability, the size of the dot depicts the importance of being able to iterate quickly:

You can hover over the dots to learn more about each use case. In case you wonder, the chart is implemented as a Metaflow card showing a VegaChart.

The many faces of deployments

Besides the three dimensions, scale, reliability, and the speed of iteration, there are other dimensions that have a major impact on the shape of the solution. Consider the following:

| Speed (time to response) | Slow: Results needed in minutes e.g. portfolio optimization | Fast: Results needed in milliseconds e.g. high-frequency trading |

| Scale (requests/second) | Low: 10 request/sec or less e.g. an internal dashboard | High: 10k requests / sec or more e.g. a popular e-commerce site |

| Pace of improvement | Low: Updates infrequently e.g. a stable, marginal model | High: Constant iteration needed e.g. an innovative, important model |

| Real-time inputs needed? | No real-time inputs e.g. analyze past data | Yes, real-time inputs e.g. targeted travel ads |

| Reliability requirement | Low: Ok to fail occasionally e.g. a proof of concept | High: Must not fail e.g. a fraud detection model |

| Model complexity | Simple models e.g. linear regression | Complex models e.g. LLMs |

If your model and use case fall into the left column, you are in luck! Plenty of tools exist to satisfy your requirements. For instance, you can precompute predictions as a batch job or deploy your model as a Shiny or Streamlit app on a small server. In contrast, if you are in the right column, you may be building OpenAI or a self-driving car. You are bound to have significant engineering challenges ahead, both in terms of developing the system and operating it.

In practice, most use cases are between the two extremes, having a bespoke mixture of requirements. Details matter.

For instance, if you were asked to build a system with high-speed and high-scale requirements, you might consider it a prototypical use case for real-time inference. Yet, if the inputs were not real-time - think e.g. recommending movies based on the history of titles the user has watched over the past year - a better approach might be to precompute predictions and store them in a high-performance cache layer. This solution can deliver the lowest latency and the highest scale compared to any other approach by minimizing the number of moving parts in the request path.

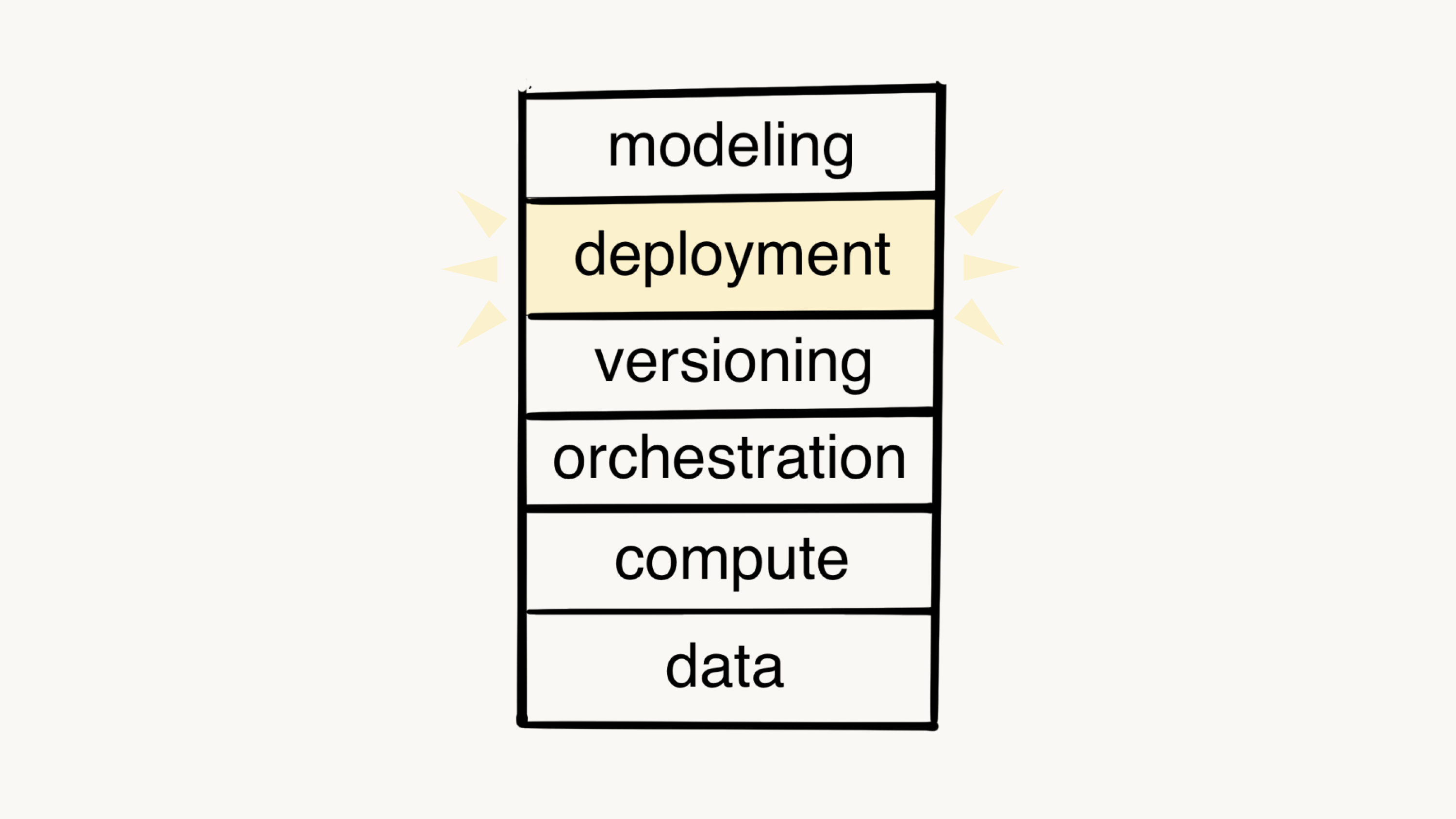

Deciding how to deploy

If you were asked to ship an ML/AI project with its bespoke set of requirements to production, how should you go about doing it? When it comes to preprocessing data, producing features, and training models behind the scenes, Metaflow has got your back. In this article, we focus on the deployment layer of our ML/AI infrastructure stack.

When it comes to deployments, it is useful to start by answering two key questions that have a major impact on the chosen technical approach, as shown in this decision tree:

A key consideration is how large is the set of all inputs. If the set is finite enough (e.g. personalizing responses based on the user’s past behavior) precomputing responses is a viable option. You can simply have another Metaflow flow to run batch inference with the set of inputs. If the set of inputs is large, you can shard the inputs and process them in parallel using foreach, making it possible to produce even billions of responses in a short amount of time.

If the input set is too large, often due to combinatorial explosion (e.g. the set of stocks in an investment portfolio), precomputing can become infeasible. The next key question is how quickly you need the responses after a specific set of inputs are known. If you can afford to produce the results asynchronously, taking 5-15 minutes to produce the results, you can trigger a response flow on the fly using event-triggering. The flow will then push results to a cache where they can be retrieved by the requester.

A benefit of both cases in green boxes is that no extra infrastructure is needed besides the usual Metaflow stack. The results can be highly available and in the case of precomputing, they can be available in an extremely low latency manner in a cache layer. To understand what this looks like in practice, see this tutorial that uses DynamoDB as the cache, or this simple movie recommender that uses a local cache with a Flask server.

Choosing systems for online model serving

What about the yellow box above, when we need responses quickly based on inputs received in real-time? This pattern requires a service that is able to receive requests, query the model, and produce responses in seconds or faster.

Depending on the complexity of the model, scale, latency, and high availability requirements, operating a service like this can vary from being a technical sideshow to a world-class engineering challenge. If you want the problem to go away with minimal effort, an easy solution is to rely on a managed model serving solution, such as Amazon Sagemaker or Azure AI endpoints.

The downsides of managed solutions are well known: Cost can be a concern, a managed solution is necessarily less flexible than an in-house approach, and sometimes vendors can’t deliver what they promise. Vendor-managed solutions tend to work best for average workloads: It is not hard to handle the simplest cases in-house and the gnarliest cases may be impossible to outsource.

Easy model serving

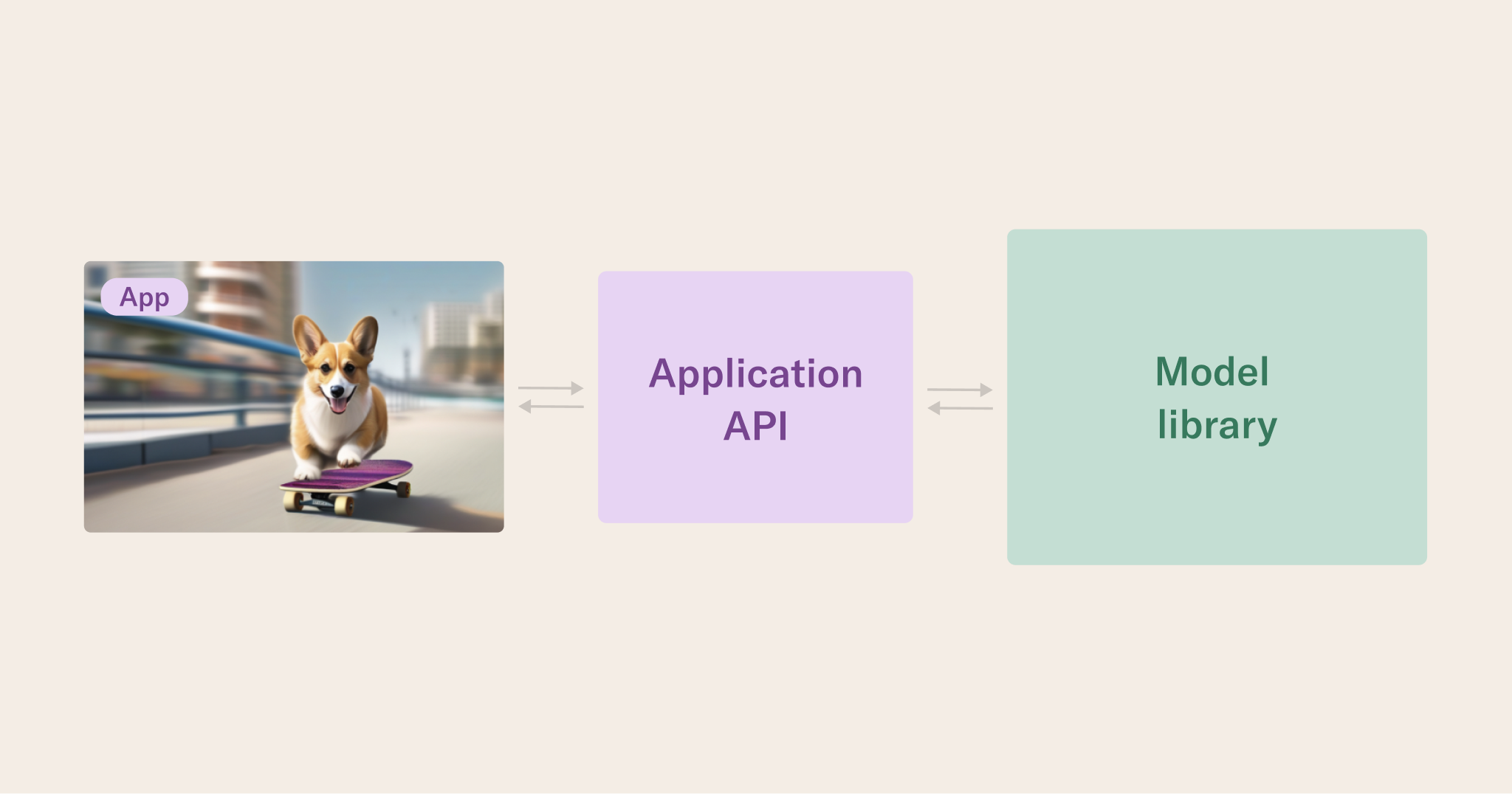

If you decide not to go with a managed solution, it is good to know how typical model serving solutions work under the hood. Let’s use this diagram to illustrate a simple service:

- On the left, you have your product or application (e.g. showing AI-generated videos of corgis).

- The app sends a request to the application backend (the purple box), which can be a simple microservice, e.g. built with the well-known, developer-friendly FastAPI. Typically, this layer extracts parts of the request and runs feature encoders to produce a feature vector that can be input to the model.

- The application API calls another process hosting a model-specific inference library, e.g. XGBoost or Scikit Learn to query the model.

- After receiving a response, the application API may post-process the model response before returning it to the application.

A simple service like this doesn’t differ much from any microservice that may be already powering your product. Extra care is required to keep feature encoding consistent with the training pipeline, which can be accomplished by deploying models and their encoders in lock-step with training - see our blog post with NVidia for inspiration.

A key benefit of this approach is its minimal infrastructure requirements: You can run a FastAPI server locally on your laptop or deploy it as a simple container next to your other microservices. To make the setup a bit more production-ready, you can hook up the service to your standard monitoring tools like Datadog, or, if the use case warrants it, invest in a specialized model monitoring tool.

Besides serving lower-complexity models like XGboost, this approach can work with more complex models too. Our benchmark below includes FastAPI with the fast vLLM library for straightforward LLM serving.

Advanced model serving

The limitations of the above simple approach become evident if your application requires very low latency, high scale, or high availability:

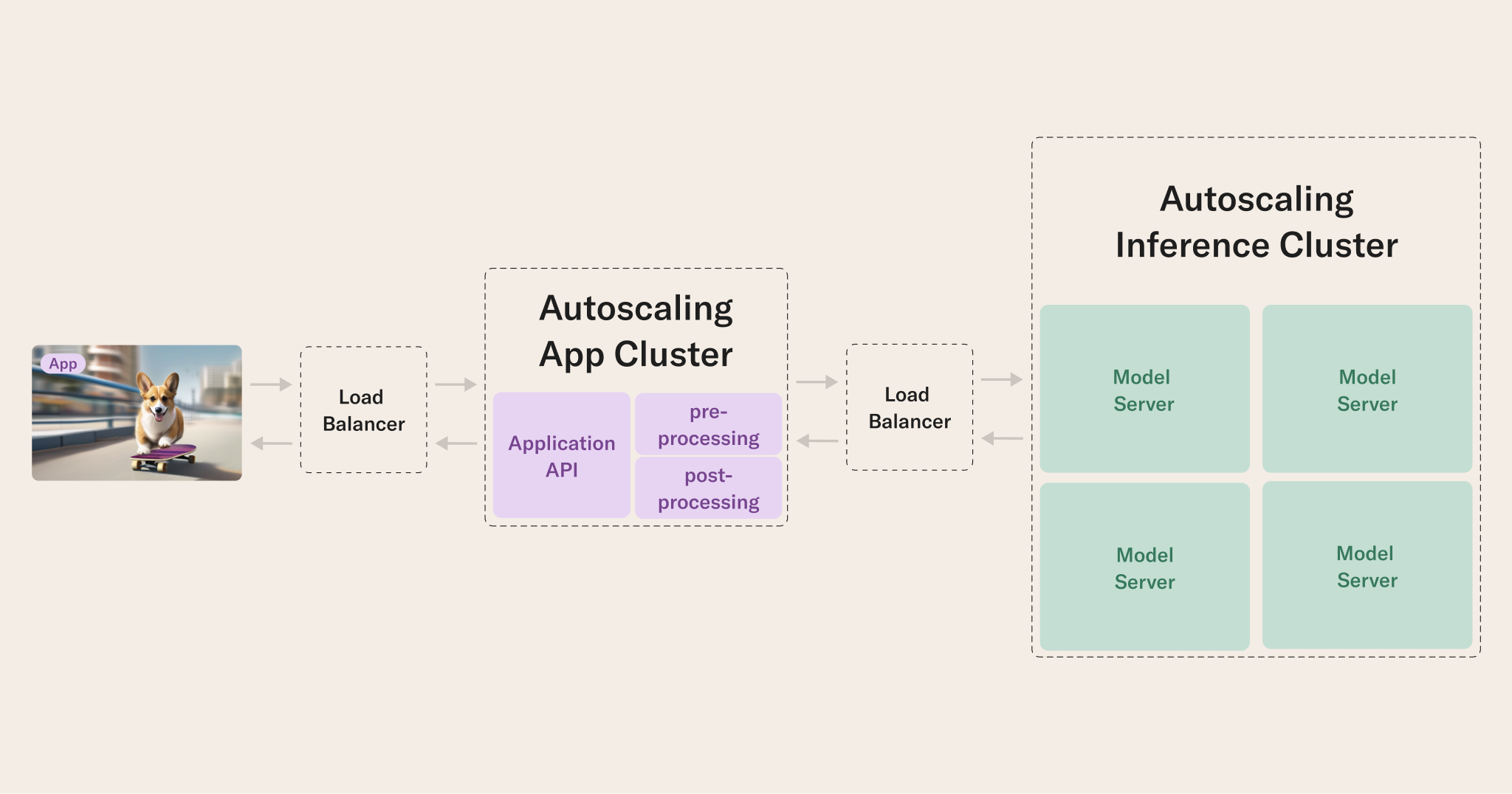

- To address high availability, you want to deploy multiple instances of the service behind a load balancer.

- To address a high number of requests per second, you can auto-scale the service based on demand. It may be beneficial to decouple the model inference layer (the green boxes), which may require beefier instances including GPUs, from the application layer (the purple boxes).

- To address low latency, you may want to push more processing to high-performance backends, instead of using Python which has inherent performance limitations.

All these points imply significant engineering effort and operational complexity, as highlighted in this diagram:

The extra effort may be justified if your use case demands it. An advanced infrastructure stack like this can also help rein in the proliferation of many bespoke models and microservices. One flexible stack can be simpler to develop and operate than a myriad of separate ones, as exemplified by these model consolidation efforts by Netflix and Instacart.

Advanced stacks in practice

As an example, the NVIDIA Triton Inference Server provides a high-performance, battle-hardened solution to the green boxes in the diagram. We have been working closely with NVIDIA to explore the Triton-Metaflow integration, as it is one of the most mature and versatile solutions for advanced model serving.

NVIDIA Triton Inference Server provides a separate inference frontend, which handles incoming requests, and a model-specific backend that is responsible for inference. You can use the stack with tree-based models, a custom backend, PyTorch, Tensorflow, ONNX, or vLLM. Additionally, NVIDIA provides another solution called TensorRT-LLM for high-performance LLM inference, which can be paired with the inference server for state-of-the-art request throughput performance.

For LLM use cases specifically, another solution with a similar architecture is OpenLLM. It is easy to get started with, supports vLLM as a backend, comes with a long list of supported models, and supports state-of-the-art optimizations like PEFT and LORA.

Example: A chatbot powered by a custom LLM

Let’s consider a fun, real-world example to illustrate the decision tree in practice: a chatbot driven by a custom LLM. We can use Metaflow to fine-tune an open-source LLM but how should we deploy it? Let’s walk down the decision tree:

- Do we know inputs in advance? No, the user may type anything.

- Can it take minutes to produce responses? No, the bot needs to reply in a few seconds.

- Are we comfortable with operating the service by ourselves? Maybe.

- Do we require large-scale or low latency? Hopefully one day!

Regarding model complexity, LLMs are at the extreme complex end of the spectrum. Correspondingly, the approaches for serving open-source LLMs are an active topic of research and development, so one can expect the solutions to evolve quickly too. Take this example as an illustration of the decision-making rubric rather than a prescriptive approach to LLM serving.

We compare three approaches, reflecting the fact that we are not sure whether the benefits of operating an in-house solution - easy or advanced - justify the extra effort:

- Managed solutions: Amazon Sagemaker and Anyscale Endpoints.

- An easy self-hosted approach: FastAPI and OpenLLM.

- An advanced self-hosted approach: NVIDIA Triton Inference Server with various optimizations.

Measuring performance

Our study measures the time it takes to make requests with a batch size of one. While it is possible to analyze the throughput vs latency frontier, we focus on measuring the latency of single requests and using this result to compute and compare the token throughput (tokens per second) across setups.

Note that the choice of metric reflects our use case: We want to provide a snappy user experience to every user, but we haven’t reached a scale where throughput would matter. To find more complex examples and different perspectives on these measures, we suggest this post from Perplexity.ai and this study from Run.AI.

Each trial in the experimental results of this post has the following characteristics:

- Run on a single RTX 6000 Ada GPU card.

- Use meta-llama/Llama-2-7b-hf, with a common set of input prompts.

- Max new output tokens are limited to 200.

- Batch size = 1, with eight different requests, over which we average our results. We always send one request prior to the eight requests to ensure that the inference server is “warmed up”.

- We measure the time it takes to return the input + output tokens, averaged over the eight requests.

We focus on measuring tokens per second, as it is a determining factor of the response latency of LLM calls.

Results

Without further ado, here are the results - the higher the bar, the higher the performance:

It would be tempting to interpret the results as a simple ranking of the solutions, but the reality is more nuanced:

- Sagemaker is probably the easiest managed solution to get started with. While it may not provide the highest performance, convenience may be a decisive factor.

- Another managed solution, Anyscale, provides performance that is competitive with all straightforward self-service options.

- In this simple setup, there isn’t a significant performance difference between using FastAPI, OpenLLM, or Triton to front requests to a vLLM backend. Triton with TensorRT leads by a small margin in the unoptimized case.

- Applying various optimization techniques, like various forms of weight quantization, can yield significant performance improvements when using an advanced stack like Triton.

If you are curious, here’s how we got the highest performing results: In the two top-performing cases, we used 4-bit quantization and Activation-aware Weight Quantization (AWQ), which both the TensorRT-LLM and vLLM backends support. In the third case, we used the INT8 weight-only quantization that is built into TensorRT-LLM.

In a sense, the results are unsurprising: If you are motivated to spend time optimizing your model serving stack, a versatile tool like NVIDIA Triton Inference Server can yield great results. Or, you can enjoy good enough performance with various stacks, including managed ones. Your requirements determine the most suitable approach.

Conclusion

Models, or ML/AI workflows in general, don’t produce value in isolation. To benefit from this sophisticated machinery, it needs to be connected to its surroundings. This connection - model deployment - can happen in many ways, the exact path of which depends on the specifics of the application.

Instead of treating one model serving approach as a universal hammer that makes everything look like nails, it is beneficial to have an easy-to-use toolbox of various deployment patterns at your disposal. If you have any questions or feedback about model deployments, inference, or LLM serving, join us and over 3000 other ML and AI developers on the Metaflow community Slack!