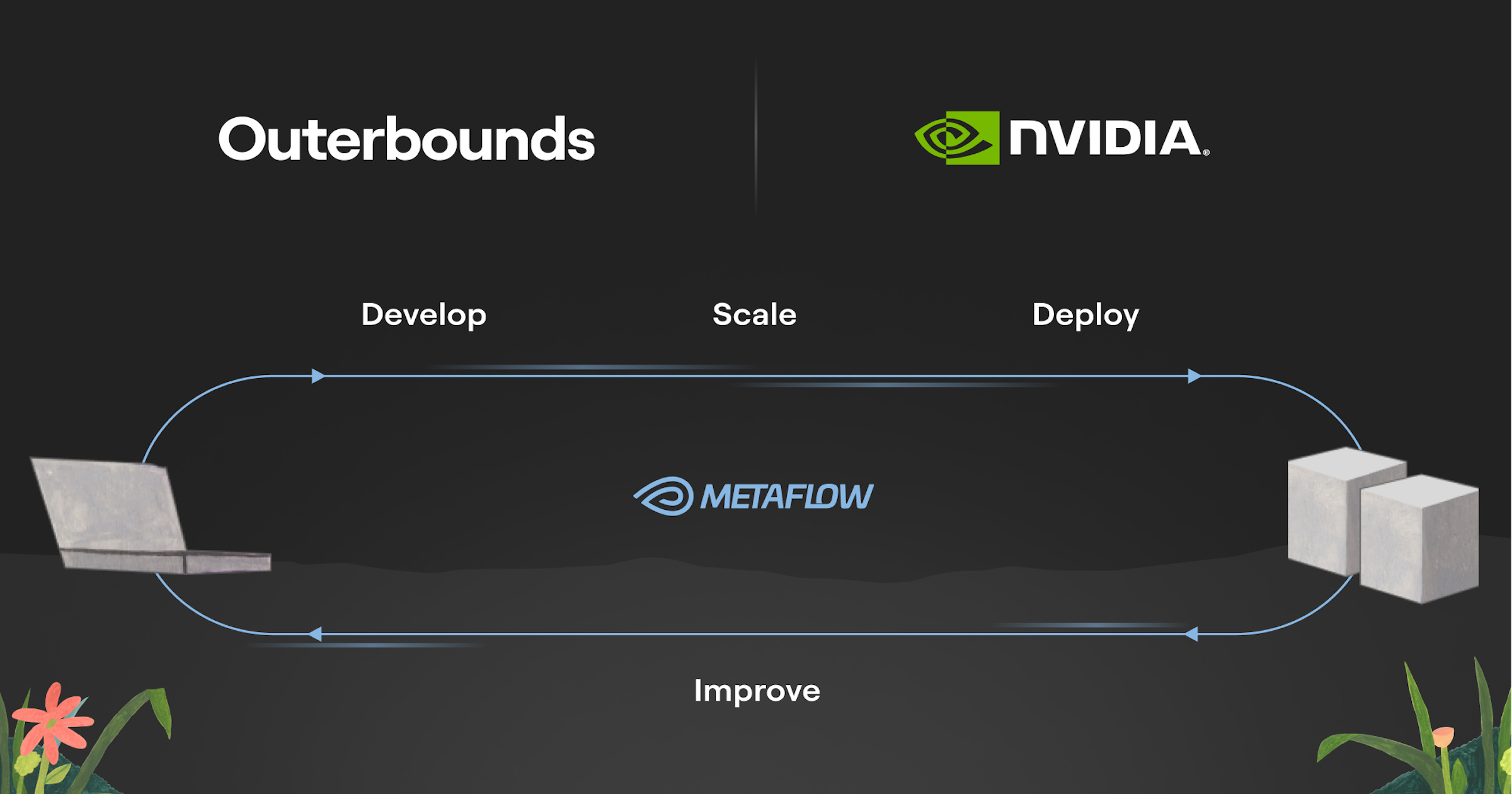

Outerbounds and NVIDIA are collaborating to make NVIDIA’s inference stack, NVIDIA Triton Inference Server, more easily accessible for a wide variety of ML and AI use cases. Outerbounds develops Metaflow, a popular full-stack, developer-friendly framework for ML and AI, which has its roots at Netflix. The combination of the two open-source frameworks allows developers to develop ML/AI-powered models and systems quickly and deploy them as high-performance, production-grade services, as shown in this article. This post was originally published in the NVidia blog.

Challenges of production-grade ML

There are many ways to deploy ML models to production. Sometimes, a model is run once per day to refresh forecasts in a database. Sometimes, it powers a small-scale but critical decision-making dashboard or speech-to-text on a mobile device. These days, the model can also be a custom large language model (LLM) backing a novel AI-driven product experience.

Often, the model is exposed to its environment through an API endpoint with microservices, enabling the model to be queried in real time. While this may sound straightforward, as there are plenty of frameworks for building and deploying microservices in general, serving models in a serious production setting is surprisingly non-trivial.

Consider the following typical challenges (Table 1).

| Model training | Model deployment | Infrastructure |

|---|---|---|

| Can you train and deploy models continuously without human intervention? | Can the deployment handle various types of models you want to use? | - Is the deployment highly available enough to support the SLA target? - Does the deployment integrate with your existing infrastructure and policies? - Does the infrastructure provide secure access to sensitive datasets and models? |

| Can you develop new models easily, test deployments locally, and experiment confidently? | Can the model produce responses quickly enough to support the desired product experience? | - Does the deployment utilize hardware resources efficiently? - Can you deploy models in a cost-efficient manner? - Can infrastructure scale to meet compute needs? Does cost go to zero when it isn’t being used? |

| Can you manage features during development and deployment consistently? | What happens if the responses are bad? Can you trace the lineage and the model that produced them? | - Can you monitor the requests and responses easily? - Can the deployment scale to enough requests per second? - Can users tune performance knobs, such as GPU card types and amounts, to reduce TCO? |

From Prototype to Production

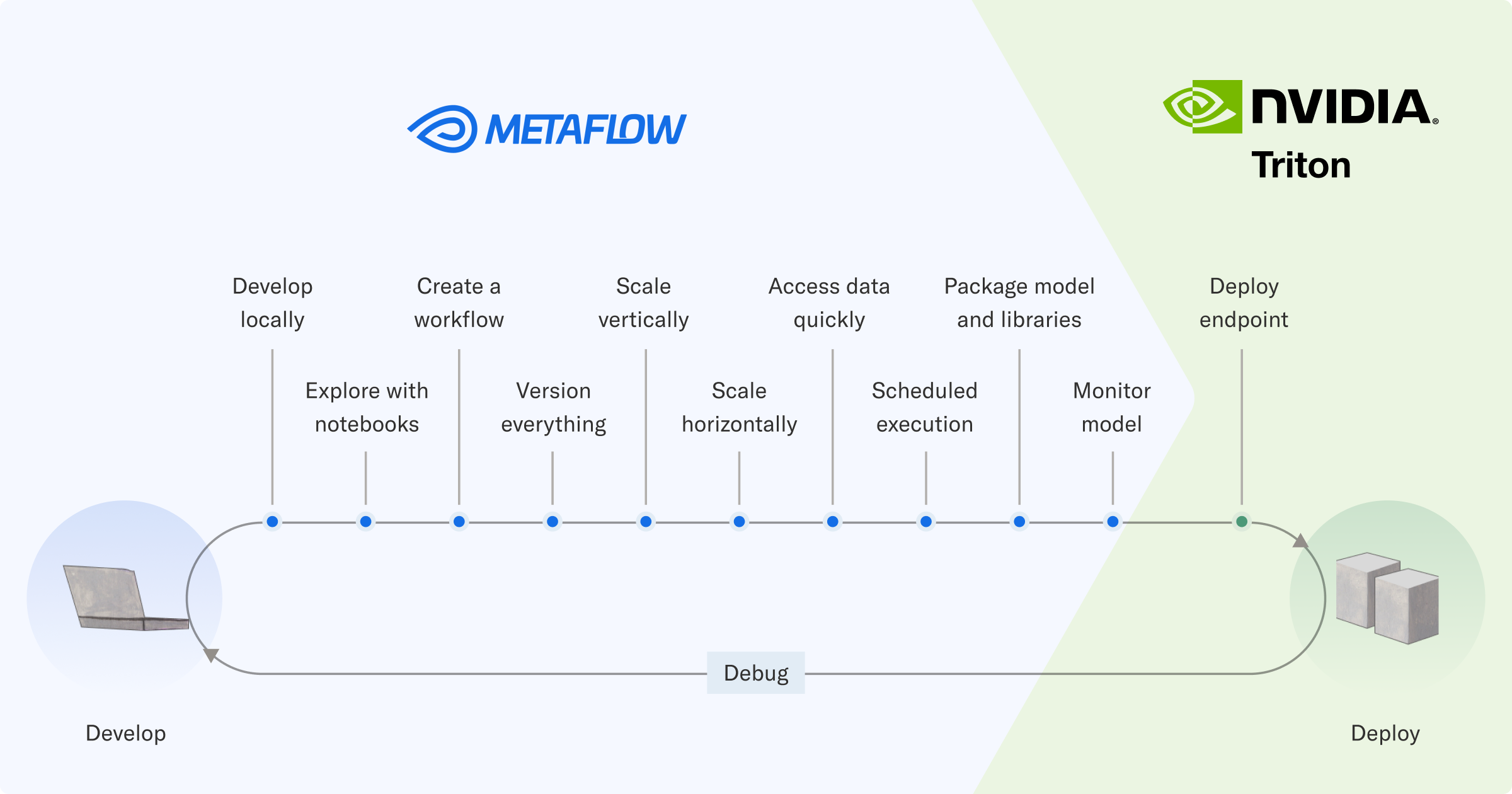

To address these challenges holistically, consider the full lifecycle of an ML system from the early stages of development to the deployment (and back).

While you could cover the journey by adopting a separate tool for each step, a smoother developer experience and a faster time to deployment can be achieved by providing a consistent API that connects the dots.

With this vision in mind, Netflix started developing a Python library called Metaflow in 2017, which was open-sourced in 2019. Since then, it has been adopted by thousands of leading ML and AI organizations across industries from real estate and drones to gaming and healthcare.

Metaflow covers all the concerns in the first part of the journey: how to develop production-grade reactive ML workflows, access data and train models easily at scale, and keep track of all the work comprehensively.

Today, you can adopt Metaflow as open source, or have it deployed in your cloud account with Outerbounds, a fully managed ML and AI platform, which layers additional security, scalability, and developer productivity features on top of the open-source package.

With Metaflow, you can address the first three challenges related to developing and producing models. To deploy the models for real-time inference, you need a model serving stack. That’s where NVIDIA Triton Inference Server comes into play.

NVIDIA Triton Inference Server is an open-source model serving framework developed by NVIDIA. It supports a wide variety of models, which it can handle efficiently both on CPUs and GPUs.

Outerbounds and NVIDIA are collaborating to make the NVIDIA inference stack more easily accessible for a wide variety of ML and AI use cases. The combination of the two open-source frameworks enables you to develop machine learning and AI-powered models and systems quickly and deploy them as high-performance, production-grade services.

Deploy on an NVIDIA Triton Inference Server

To support production AI at an enterprise level, NVIDIA Triton Inference Server is included in the NVIDIA AI Enterprise software platform, which offers enterprise-grade security, support, and stability.

While a number of model serving frameworks are available, both as open-source and as managed services, NVIDIA Triton Inference Server stands out for several reasons:

- It is highly performant, thanks to it being implemented in C++ and capable of using GPUs efficiently. This makes it a great choice for latency– and throughput-sensitive applications.

- It is versatile, capable of handling many different model families thanks to its pluggable backends.

- It is tested and tempered, thanks to years of development and large-scale usage driven by NVIDIA.

These features make NVIDIA Triton Inference Server a particularly capable model serving stack to which workflows can push trained models.

Metaflow helps you prototype models and the workflows around them and test them at scale, while keeping track of all the work performed. When the workflow shows enough promise, it is straightforward to integrate it to surrounding software systems and orchestrate it reliably in production.

While Metaflow exposes all the necessary functionality through a simple, developer-focused Python API, this is powered by a thick stack of infrastructure. The stack integrates with data stores like Amazon S3, facilitates large-scale compute on Kubernetes, and uses production-grade workflow orchestrators like Argo Workflows.

When models have been trained successfully, the responsibility shifts to NVIDIA Triton Inference Server. Similar to the training infrastructure, the inference side requires a surprisingly non-trivial stack of infrastructure.

Infrastructure for model serving

It is not too hard to implement a simple service that exposes a simple model, such as a logistic regression model, over HTTPS. A basic version of something like this is doable in a few hundred lines of Python using a framework like FastAPI.

However, an improvised model serving solution like this is not particularly performant. Python is an expressive language but it does not excel at processing requests quickly. It is not scalable without extra infrastructure: A single FastAPI process can handle only so many requests per second. Also, the solution is not versatile out of the box, if you want to replace the logistic regression model with a more sophisticated deep regression model, for example.

You could try to address these shortcomings incrementally. But as the solution grows more complex, so does the surface area of bugs, security issues, and other glitches, which is motivation for a more tempered solution.

NVIDIA Triton Inference Server addresses these challenges by deconstructing the stack into the following key components.

- A frontend that is responsible for receiving requests over HTTP or gRPC and routing them to the backend.

- One or more backend layers that are responsible for interacting with a particular model family.

NVIDIA Triton Inference Server supports pluggable backends, implementations of which exist for ONNX, Python-native models, tree-based models, LLMs, and a number of other model types. This makes it possible to handle versatile models with one stack.

The combination of a high-performance frontend, capable of handling tens of thousands of requests per second, and a backend optimized for a certain model type, provides a low-latency path from request to response. With NVIDIA Triton Inference Server, the whole request-handling path can stay in native code, reducing request latency and increasing throughput relative to a Python-based model serving solution.

Low-level server optimizations are important for applications that leverage custom LLMs, which require serving large deep learning models with low-latency inferences. To further optimize LLM inference, NVIDIA has introduced TensorRT-LLM. TensorRT-LLM is an SDK that makes it significantly easier for Python developers to build production-quality LLM servers. TensorRT-LLM works out-of-the-box as a backend with NVIDIA Triton Inference Server.

No matter the scale or server backend, an individual NVIDIA Triton Inference Server instance is typically deployed with a container orchestrator like Kubernetes. A separate layer, a deployment orchestrator, is needed to manage the instances, autoscale the cluster on demand, manage model lifecycle, request routing, and other infrastructure concerns, listed in the last two rows of Table 1.

Integrating the training and the serving stacks

While both training and serving require infrastructure stacks of their own, you want to align them tightly for a few reasons.

First, deploying a model should be a routine operation, which shouldn’t require many extra lines of code or worse, manual effort.

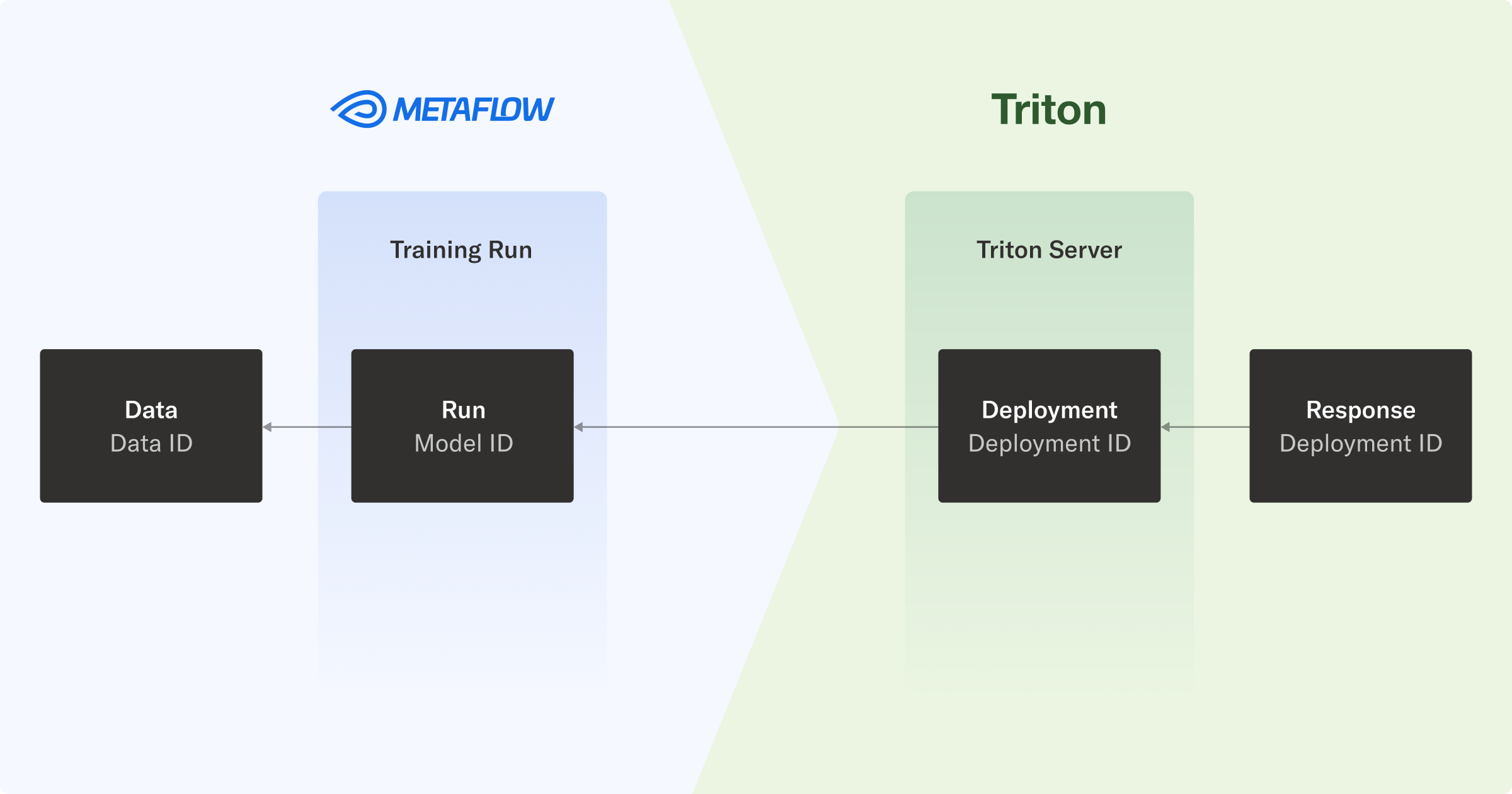

Second, you want to maintain a full lineage about deployed models, so you can understand the whole chain from raw data and preprocessing to trained models and finally deployed models producing real-time inferences. This feature comes in handy when dealing with A/B experiments, deploying hundreds of parallel models, or debugging responses from an endpoint.

In the workflow guiding this post, you deploy models to NVIDIA Triton Inference Server in a manner that carries the version information across the stacks. This way, you can backtrack inferences all the way to the raw data.

End-to-end lineage and debuggability means that when an endpoint hosting a model responds to a request, you can trace the prediction back to the workflow that produced the model and data it was trained on.

In practice, every response contains an NVIDIA Triton Inference Server deployment ID, which maps to a Metaflow run ID, which in turn enables you to inspect the data used to produce the model.

Example: Train and serve a tree-based model

To show the end-to-end workflow in action, here’s a practical example. You can follow along using the /triton-metaflow-starter-pack GitHub repo.

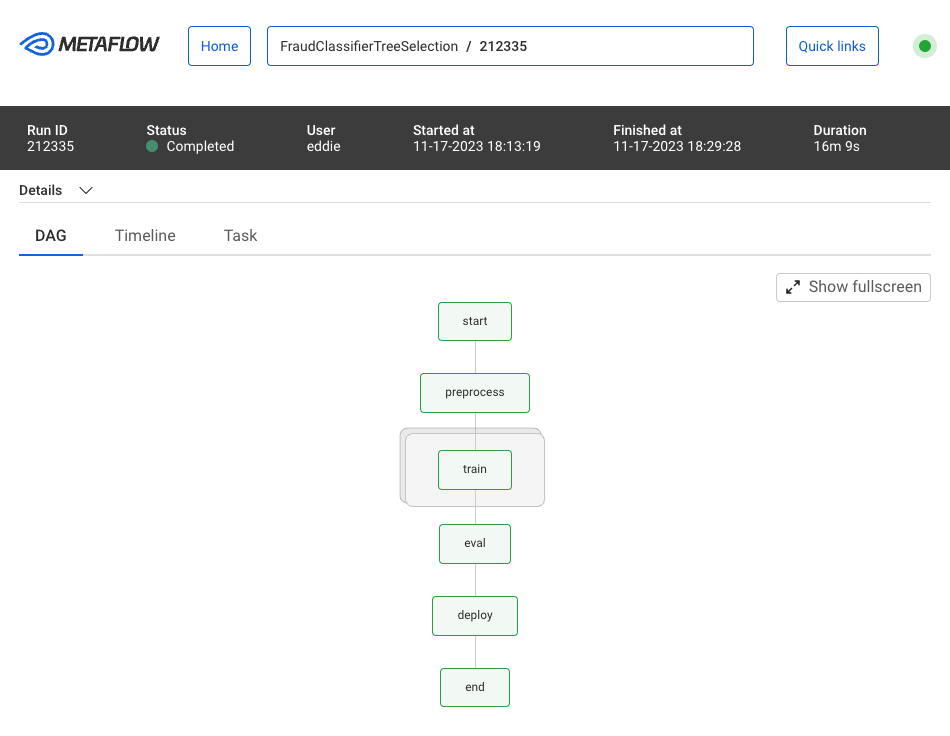

The example workflow addresses a fraud detection problem, a classification task for predicting loan defaults. It trains many Scikit-learn models in parallel, selects the best one, and pushes the model to a cloud-based model registry used by NVIDIA Triton Inference Server.

The Metaflow UI enables the monitoring and visualizations of your workflow runs, organizing workflows by a run ID and seamlessly tracking all artifacts that the workflow runs produce.

To give you an idea what the workflow code looks like, the following code example defines the first three steps: start, preprocess, and train.

class FraudClassifierTreeSelection(FlowSpec):

@step

def start(self):

self.next(self.preprocess)

@batch(cpu=1, memory=8000)

@card

@step

def preprocess(self):

self.compute_features()

self.setup_model_grid(model_list=["Random Forest"])

self.next(self.train, foreach="model_grid")

@batch(cpu=4, memory=16000)

@card

@step

def train(self):

self.model_name, self.model_grid = self.input

self.best_model = self.smote_pipe(

self.model_grid, self.X_train_full, self.y_train_full

)

self.next(self.eval)

The train step executes a number of parallel training tasks using Metaflow’s foreach construct. In this case, you execute the tasks in the cloud using AWS Batch, by specifying a @batch decorator. Run the workflow manually with the following command:

python train/flow.py run \

--model-repo s3://outerbounds-datasets/triton/tree-models/

When Metaflow is deployed in your environment, you don’t have to write any other configuration or Dockerfiles besides writing and executing this workflow. The workflows can be deployed using a single command to run on a schedule or be triggered by events in the broader system.

Preparing models for NVIDIA Triton Inference Server

The deploy step of the workflow takes care of preparing a model for deployment with NVIDIA Triton Inference Server. This happens through the following steps:

- Set the backend and other properties in NVIDIA Triton Inference Server’s model configuration. Some of these properties depend on what happens during training, so you dynamically create the file and include it in the artifact package sent to cloud storage during model training runs.

- NVIDIA Triton Inference Server also needs the model representation itself. Because you’re concerned about efficient serving, serialize the model using Treelite, a pattern that works with Scikit-learn tree models, XGBoost, and LightGBM. Place the resulting checkpoint.tl file in the model repository, and NVIDIA Triton Inference Server knows what to do from there.

- Serialized model files are pushed to Amazon S3 using Metaflow’s built-in optimized Amazon S3 client, naming them based on a unique workflow run ID that can be used to access all information used to train the model. This way, you maintain a full lineage from inference responses back to data processing and training workflows.

NVIDIA Triton Inference Server performance

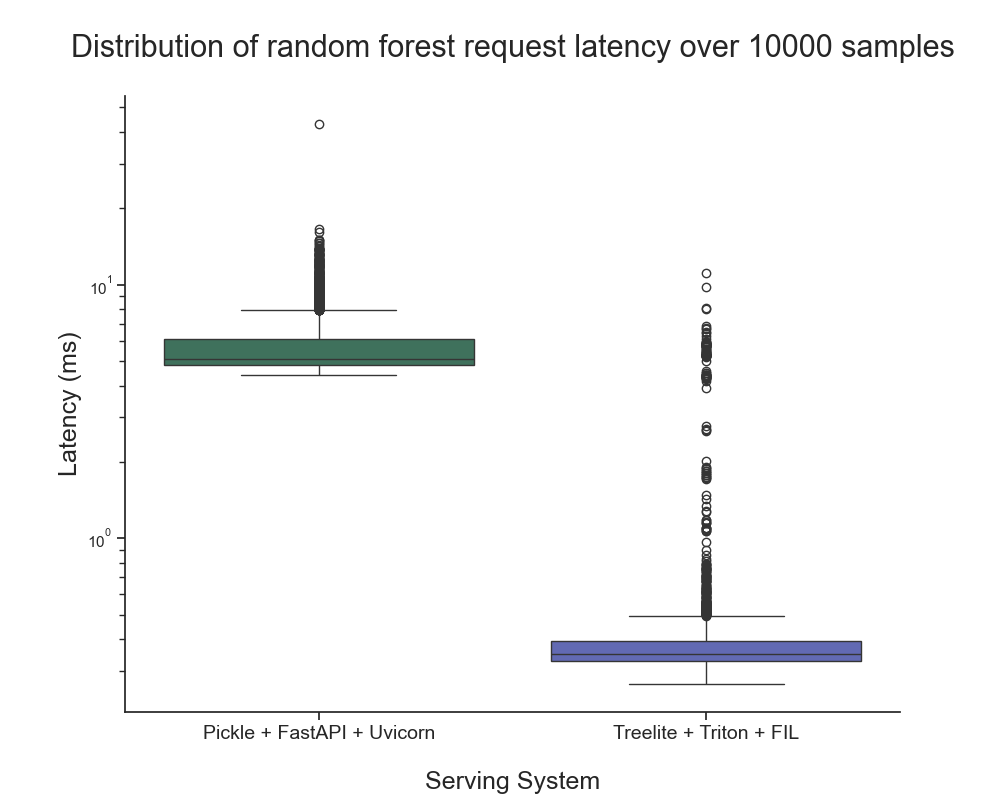

For serving tree models, NVIDIA Triton Inference Server works best with the FIL backend. We ran a simple benchmark to see how the inference latency compares to a baseline Python-based API server using FastAPI as a frontend and Uvicorn as a backend. You can reproduce the results using the triton-metaflow-starter-pack GitHub repo.

The response time that we observed (not counting the network overhead) was 0.44 ms ± 0.64 ms for NVIDIA Triton Inference Server compared to 5.15 ms ± 0.9 ms for FastAPI. This is more than an order of magnitude difference.

The benchmark was run on a CoreWeave server with eight Xeon cores, demonstrating that NVIDIA Triton Inference Server can provide big speedups in any environment, and not only on NVIDIA GPUs.

We plan to compare more interesting server combinations, explore NVIDIA Triton Inference Server optimizations such as dynamic request batching, and extend to the real-world complexity of network overhead.

NVIDIA Triton Inference Server for production inference

Security, reliability, and enterprise-grade support are critical for production AI.

NVIDIA AI Enterprise is a production-ready inference platform that includes NVIDIA Triton Inference Server. It is designed to accelerate time-to-value with enterprise-grade security, support, and API stability to ensure performance and high availability.

You can use this inference platform to relieve the burden of maintaining and securing the complex software platform of AI.

Fine-tuning and serving an LLM

It's early 2024 now so I’d be remiss to discuss model serving without mentioning LLMs. NVIDIA Triton Inference Server has extensive support for deep learning models and increasingly sophisticated support for serving LLMs. Yet, serving LLMs efficiently is a deep and quickly evolving topic.

To give you a small sample of serving LLMs with NVIDIA Triton Inference Server, I used this workflow to fine-tune a Llama2 model from HuggingFace to produce QLoRAs, and serve the resulting model with NVIDIA Triton Inference Server. For more information, see Fine-tuning a Large Language Model using Metaflow, featuring LLaMA and LoRA and Better, Faster, Stronger LLM Fine-tuning.

As I did previously, I constructed the NVIDIA Triton Inference Server configuration on the fly after the workflow finishes model training. This example is meant as a proof-of-concept.

Next steps

Start experimenting with the end-to-end AI stack highlighted in this post in your own environment. For more information, see the following resources:

- Installing Metaflow

- NVIDIA Triton Inference Server is available with NVIDIA AI Enterprise under free 90-day software evaluation licenses.

Should you have any questions or feedback about this article, join thousands of ML/AI developers and engineers in the Metaflow community Slack!