Beginner Recommender Systems: Episode 2

1How to use DuckDB

Given our dataset is in a parquet file, in this lesson you will learn how to leverage an open-source, a hyper-performant database for analytics workloads called DuckDB. You can follow along with the code in this flow. DuckDB has become popular as a fast way to keep Pandas DataFrame interfaces while processing data faster and consuming less memory, as demonstrated in these public benchmarks and described in this post.

If you're familiar with basic SQL commands, all data preparation should be immediately understandable: DuckDB reads the parquet file (cleaned_spotify_dataset.parquet) in memory and produces the dataset we need. Remember, a playlist with:

- song_525, song_22, song_814, song_4255

needs to become:

- query: song_525, song_22, song_814

- label: song_4255

for our model. The label is the event we want our model to predict, so we can suggest songs the listener likes. Following standard best practices, we divide our sequences of songs into a train, validation and test dataset.

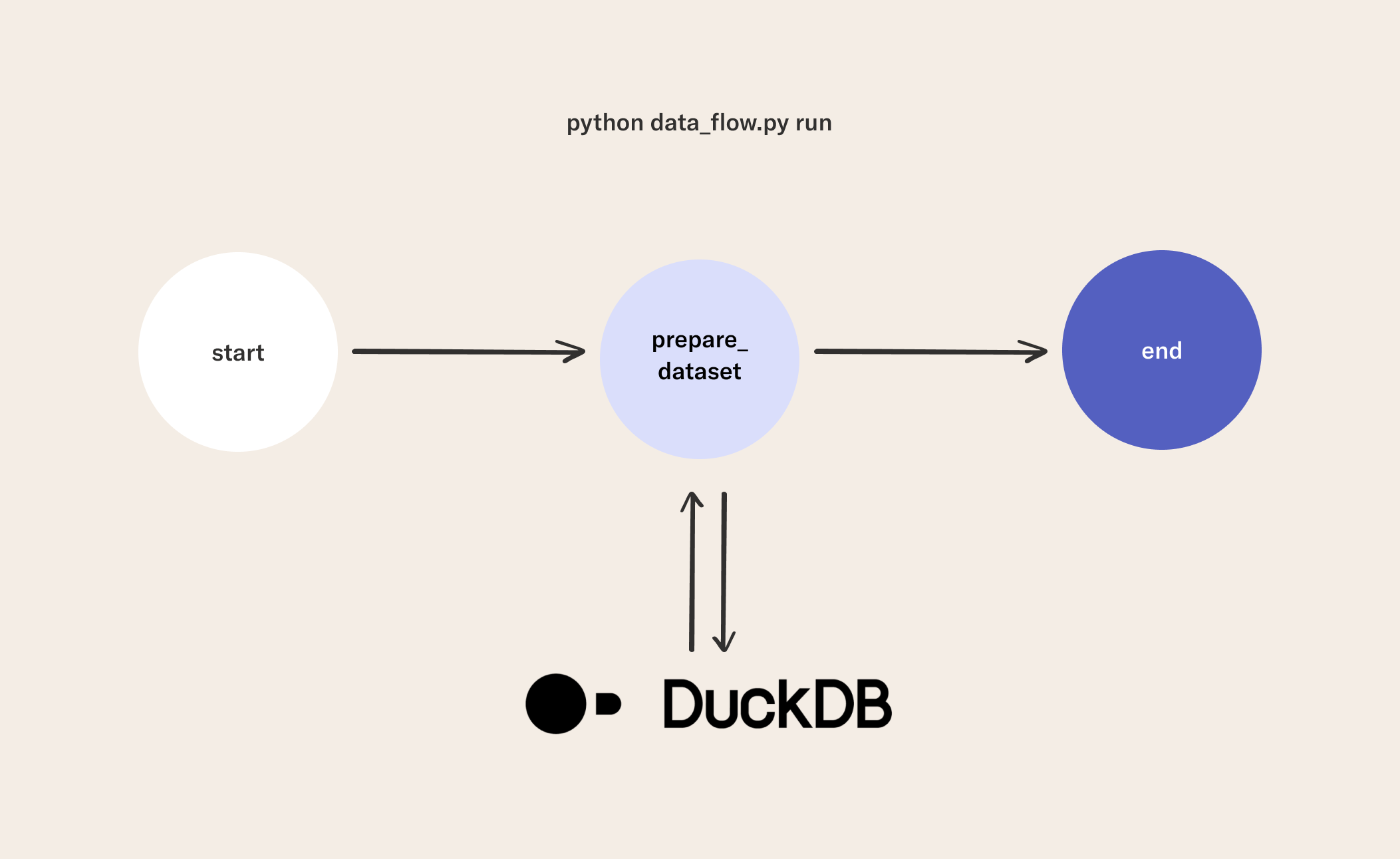

2Ingest and split data in a flow

In this flow, you will see how to prepare the dataset using DuckDB queries. The data is then split into train, validation, and test splits. In general, it is good practice to have a validation set for choosing the best hyperparameters and a held out test set to give an estimate of performance on unseen data. Later, you will extend this flow to evaluate, tune, and deploy a model to make real-time predictions.

You can also observe two more tricks in the PlaylistRecsFlow:

- we declare and use an

IS_DEVparameter to sample down the dataset in case we are iterating quickly in "Developer Mode". You can imagine running the flow on a few thousand lines initially just to get the logic correct, and then running it in "Evaluation Mode" without any sampling on the full dataset; - we make use of Metaflow's built-in versioning capability to version precisely the datasets we just created - this will be very important later on for debugging and inspection.

from metaflow import FlowSpec, step, S3, Parameter, current

class DataFlow(FlowSpec):

IS_DEV = Parameter(

name='is_dev',

help='Flag for dev development, with a smaller dataset',

default='1'

)

@step

def start(self):

self.next(self.prepare_dataset)

@step

def prepare_dataset(self):

"""

Get the data in the right shape by reading the parquet dataset

and using DuckDB SQL-based wrangling to quickly prepare the datasets for

training our Recommender System.

"""

import duckdb

import numpy as np

con = duckdb.connect(database=':memory:')

con.execute("""

CREATE TABLE playlists AS

SELECT *,

CONCAT (user_id, '-', playlist) as playlist_id,

CONCAT (artist, '|||', track) as track_id,

FROM 'cleaned_spotify_dataset.parquet'

;

""")

con.execute("SELECT * FROM playlists LIMIT 1;")

print(con.fetchone())

tables = ['row_id', 'user_id', 'track_id', 'playlist_id', 'artist']

for t in tables:

con.execute("SELECT COUNT(DISTINCT({})) FROM playlists;".format(t))

print("# of {}".format(t), con.fetchone()[0])

sampling_cmd = ''

if self.IS_DEV == '1':

print("Subsampling data, since this is DEV")

sampling_cmd = ' USING SAMPLE 10 PERCENT (bernoulli)'

dataset_query = """

SELECT * FROM

(

SELECT

playlist_id,

LIST(artist ORDER BY row_id ASC) as artist_sequence,

LIST(track_id ORDER BY row_id ASC) as track_sequence,

array_pop_back(LIST(track_id ORDER BY row_id ASC)) as track_test_x,

LIST(track_id ORDER BY row_id ASC)[-1] as track_test_y

FROM

playlists

GROUP BY playlist_id

HAVING len(track_sequence) > 2

)

{}

;

""".format(sampling_cmd)

con.execute(dataset_query)

df = con.fetch_df()

print("# rows: {}".format(len(df)))

print(df.iloc[0].tolist())

con.close()

train, validate, test = np.split(

df.sample(frac=1, random_state=42),

[int(.7 * len(df)), int(.9 * len(df))])

self.df_dataset = df

self.df_train = train

self.df_validate = validate

self.df_test = test

print("# testing rows: {}".format(len(self.df_test)))

self.next(self.end)

@step

def end(self):

pass

if __name__ == '__main__':

DataFlow()

3Run your flow

python data_flow.py run

In this lesson, you structured a data ingestion workflow using DuckDB and Metaflow. This pattern can be used on a wide variety of ML tasks to help you efficiently move between fast, local data storage and cloud resources. In the next lesson, you will build on this flow by building a predictive model to predict the next track to suggest.