If you are already using one of the major clouds, we have good news for you: Outerbounds is now available on all cloud marketplaces: AWS, Azure, and GCP. You can easily deploy it in your existing cloud account with just a few clicks, avoiding extra paperwork and vendor management. Just sign up to get started for free.

Thanks, but we are already running ML/AI on our cloud account

If you take a look at the companies using the open-source Metaflow, you'll recognize many names known for their long-term ML efforts, with Netflix being a prime example. These companies have been utilizing ML/AI in the cloud for years, some for more than a decade.

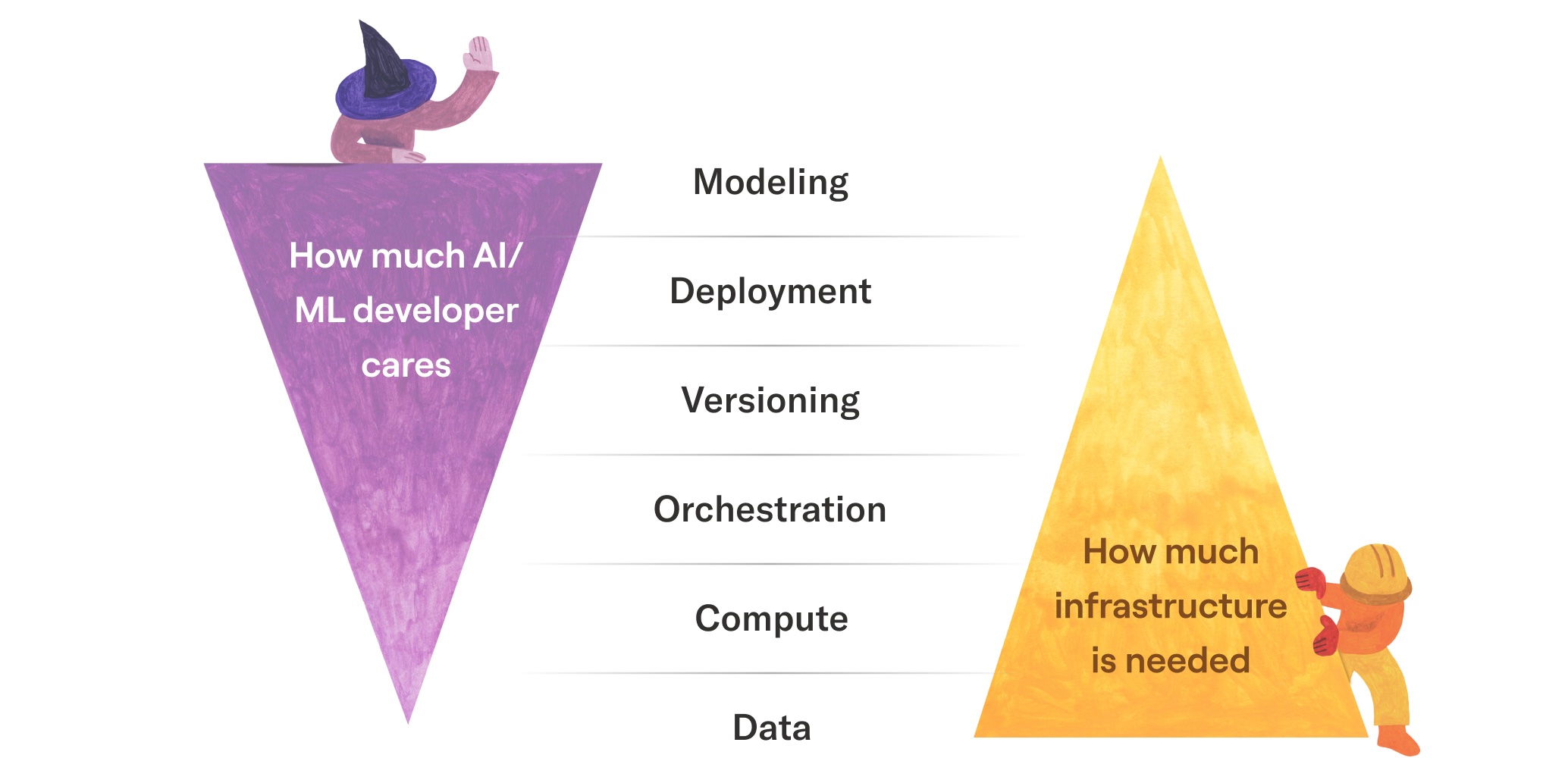

Metaflow was specifically designed to empower developers working on ML/AI projects to take advantage of cloud resources seamlessly. It is based on the understanding that cloud providers excel at offering basic utilities such as scalable storage, compute, and robust security primitives. However, a dedicated ML/AI platform is necessary atop this foundation for ML engineers and data scientists to develop systems quickly and independently.

Hacking together a few ML/AI prototypes in the cloud or getting started with the default services that cloud providers offer is not particularly challenging. However, as your efforts evolve, it's worth considering how easy it would be to build ten production-ready systems, or even a hundred, with your existing solution.

Eventually, organizations realize the need to transition to a more robust and productive environment that enables them to address a broader range of ML/AI use cases more efficiently. This situation is akin to how many DevOps and SRE teams eventually adopt tools like DataDog for advanced observability solutions or how Snowflake is used for data warehousing. Both leverage foundational cloud resources to offer a maintenance-free, developer-friendly service.

What is a proper ML/AI platform?

Much has been written about MLOps and specific features related to ML/AI development, such as experiment tracking, model monitoring, feature stores, and, more recently, the rapidly evolving tools around Generative AI. Many of these features eventually prove useful to projects, but in our experience, it is beneficial to start by setting up a robust foundation.

Let's consider the foundational layers that all ML/AI projects end up including, sometimes by design and sometimes in a more ad-hoc manner:

Development environment - All ML/AI code needs to be developed somewhere. Your development environment can be a self-service laptop, a cloud-based notebook, or a modern IDE backed by cloud instances, such as Outerbounds Workstations.

Access data - All ML/AI projects need data. While starting with ad-hoc data access, maybe reading CSV files, is not difficult, eventually, you will need to consider data governance, reproducibility, and the speed and ease of access.

Development APIs - There are many ways to create workflows, handle dependencies, and configure integrations, but constantly reinventing the basic scaffolding that all projects need and spending energy on complex abstractions is not productive. Addressing these concerns is the main reason why organizations choose Metaflow, which provides a consistent, developer-friendly set of APIs commonly needed by ML/AI projects.

Compute - Similar to data, all ML/AI projects need compute. In some cases, massive amounts of it, e.g. to train an LLM or to build models for thousands of customers. Leveraging inexpensive compute to make expensive human resources more productive is one of the best investments you can make.

Deploy - ML/AI projects are just cost centers if they are not connected to surrounding systems, products, and business processes. There are many ways to deploy models, but no matter what path you choose, deploying a model should be a routine matter that anyone on the team can perform confidently.

Operate - We define production as systems that run automatically and correctly with minimal human intervention, connected to their surroundings. While this is easy to state, making it happen requires robust, highly available infrastructure and proper tooling for developers to observe and fix issues quickly.

Great, but we can’t adopt systems outside our cloud account

Most of our customers cannot send their data or compute resources outside their cloud premises. Acknowledging this, Outerbounds addresses the six steps mentioned above by leveraging cloud services in your account for compute, storage, and security.

Consider the benefits of this setup:

Since storage remains in your cloud account, no data ever leaves your premises. This applies to object stores like S3, which we use to persist system state, as well as databases holding metadata.

Because compute is executed in your cloud account, no processing leaves your premises. Importantly, Outerbounds helps you leverage scalable cloud compute cost-effectively, without adding extra margin to the compute running on your account, which is governed by your existing savings plans and negotiated pricing.

By integrating with your existing security setup, such as your IAM policies and SSO providers, Outerbounds integrates into your existing infrastructure and processes in a minimally invasive manner.

In other words, you can benefit from a first-class ML/AI platform within your existing cloud account, without having to move data or compute resources outside and without having to change your existing security policies.

Why not cloud-native ML/AI services?

Cloud-native ML/AI platforms, such as AWS SageMaker, Azure ML services, and Google’s Vertex AI, rely on foundational cloud services in a similar manner. When you deploy Outerbounds through cloud marketplaces, it is included as a line-item in your existing cloud invoice, similar to the cloud-native services, and is governed by the same terms and conditions set by the cloud provider (which makes the legal department happy too).

What, then, is the difference? Consider the following three points:

Developer experience - Both the open-source Metaflow and Outerbounds, which provides the infrastructure for it, have been designed with ML developers and data scientists in mind. Outerbounds requires no knowledge of cloud infrastructure and no configuration. As a cohesively designed platform, it allows users to avoid navigating between tens of different, overlapping features and services. As a result, projects can be developed and shipped faster.

Cost of compute - Cloud-native services are typically priced by adding a margin over the cost of low-level compute resources. Especially with compute-heavy ML/AI work, these costs can quickly add up. Worse yet, the pricing models are often opaque and unpredictable, making it difficult to forecast how costs will trend over time. In contrast, Outerbounds is offered for a transparent platform fee, designed to help lower your cloud compute costs as your usage increases.

- Support - ML, and especially AI, are fast-moving fields that can be challenging to keep up with. Outerbounds provides dedicated and knowledgeable support, leveraging our experience from supporting Netflix and hundreds of other leading ML and AI organizations over the years.

It is also worth noting that by building your systems on cloud-native APIs, you ensure that moving to another cloud, should the need arise, becomes very difficult. This lock-in is desirable for the cloud provider but means fewer options for you, which can be especially problematic in the rapidly evolving fields of ML and AI.

Cloud lock-in

In 2024 all the major cloud providers, AWS, Azure, and GCP, provide roughly an equal level of service at the foundational layer. They all provide robust, scalable services for storing data, running compute, and defining security policies.

The higher-level services differ more drastically. While it is easy to move from, say, EC2 to Google Compute Engine, moving from Sagemaker to Vertex AI would mean nearly a total rewrite of code. Sadly, the higher-level cloud services are not open-source, so by building your systems with them, you are engaged in a tight, long-term relationship with the provider.

Consider an alternative:

By building your systems with open-source Metaflow running on Outerbounds, you gain two critical advantages:

Since Outerbounds supports all the major clouds, you can move your deployment between clouds as needed. No need to learn a new cloud environment and no need to rewrite code - the developer-facing interfaces and the UI stay the same across clouds, giving the infrastructure team full freedom to choose their cloud environment and architecture based on other business requirements, including pricing.

Since open-source Metaflow is used to develop systems on Outerbounds, you are not locked in to Outerbounds either. If our quality of service doesn’t satisfy you, you can always fall back to hosting Metaflow by yourself.

Leveraging resources across clouds without migrations

Clouds don't have to be mutually exclusive. Increasingly, we see teams maintaining a footprint across all the clouds, leveraging the particular strengths of each. Outerbounds supports such multi-cloud setups out of the box:

For instance, as depicted above, you can host your systems on AWS but leverage, say, Google Cloud TPUs for model training. This way, you can take advantage of the best parts of each cloud without having to pay the architectural cost of learning and supporting three different cloud environments in full. Crucially, this allows you to leverage cloud credits and other discounted pricing from the fiercely competitive clouds.

Outerbounds provides a unified UI and APIs for developing ML/AI systems, so from the developer's point of view, the existence of multiple clouds is almost immaterial. You can also use specialized cloud providers like CoreWeave, a major GPU cloud provider and one of our partners, as well as on-premise GPU resources. And more integrations are coming - stay tuned!

Get started today

If building cloud-agnostic ML/AI systems using a developer-friendly platform, securely running in your cloud account, and leveraging the lowest-cost compute across the clouds sounds relevant to your interests, take Outerbounds for a test drive!

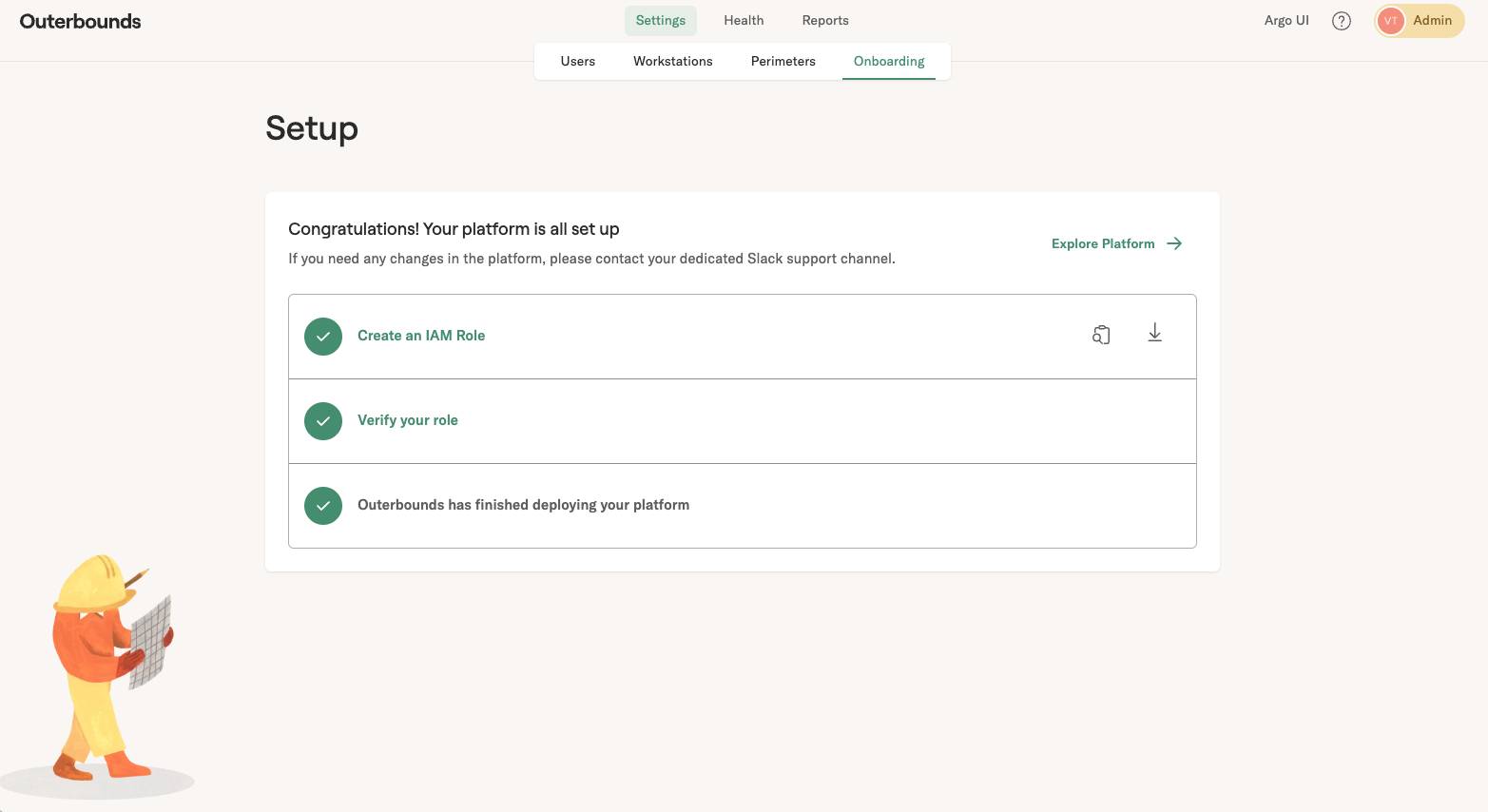

Deploying the platform doesn't require any cloud expertise, but if your engineers want to go over the technical details (including our SOC2 compliance), we are happy to do so.

Once the platform has been deployed, we provide hands-on onboarding sessions, ensuring that all ML developers and data scientists will become productive on the platform quickly. Or, in the words of Thanasis Noulas from The Trade Republic, an Outerbounds customer:

A big advantage of Metaflow and Outerbounds is that we started rolling out much more complex models. We used to have simple manually-built heuristics. In six weeks, a team that hadn’t used Metaflow before was able to build an ML-based model, A/B test its performance, which handily beat the old simple approach, and roll it out to production.