If you answer yes to the following three questions, this article is for you:

- Do you run ML, AI, or data workloads in the cloud?

- Are you concerned about the total cost of doing it?

- Are you uncertain about whether you are doing it cost-effectively?

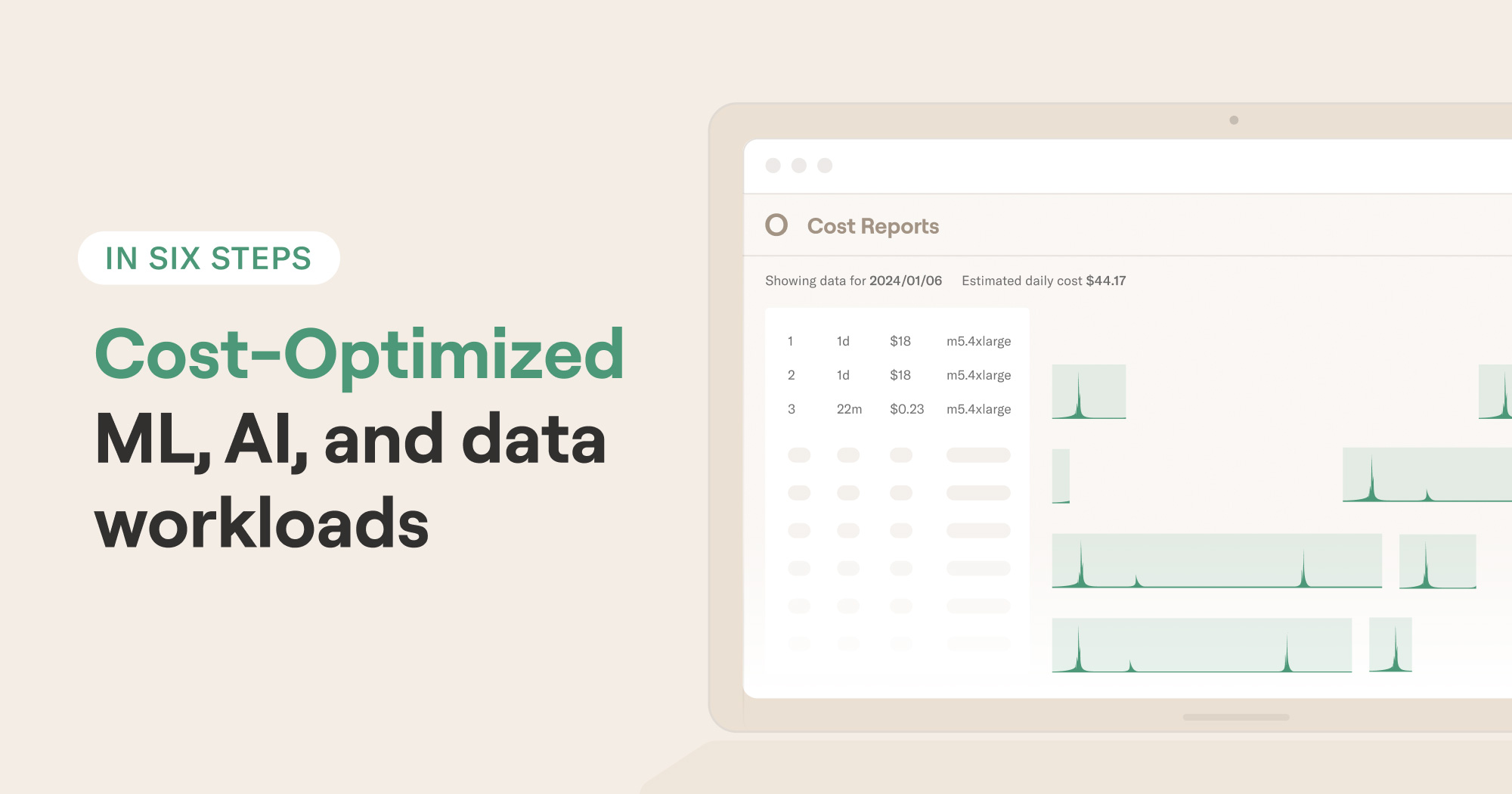

We share a six-step process for understanding costs and optimizing them. You can follow this process using tools you have today or you can do it easily on the Outerbounds platform which includes convenient UIs for observing and reducing costs, as illustrated below.

Motivation

A key upside of cloud computing is that it allows you to exchange large upfront capital expenses, like buying a rack of expensive GPUs, for granular operational expenses such as renting GPUs by second. A key downside is that these small costs add up, often uncontrollably. You may end up with an eye-watering monthly invoice without a clear understanding of how the cost was incurred.

Even more frustratingly, as projects of various kinds get lumped into one invoice, it is hard to know if the cost is commensurate with the value produced. Spending $10k a month, say, on an ad targeting model producing $1M in returns is well justified vs. an experimental model that someone just forgot to shut down.

The two schools of cost control

Having worked with hundreds of ML, AI, and data teams, we have seen two major approaches to controlling cloud costs:

- Tight controls - set blanket limits for the resources that can be used, combined with strict budgets and heavy guardrails to limit spend.

- Transparent costs - invest in tooling that makes costs visible, attribute them to individual projects, and place them in the context of value produced, combined with more granular resource management to limit spend.

While the first approach is easy to implement, it may have the paradoxical effect of increasing costs indirectly, as you may end up trading cheap compute hours for expensive human hours. The pace of development slows down as users have to wait for scarce resources, hindering valuable experimentation and innovation.

Netflix, where we started Metaflow, relies on the second approach: Instead of setting blanket limits upfront, they provide a high degree of cost visibility. In this situation, you can allow developers to experiment relatively freely and ship quickly to production to prove the value, periodically checking that the ROI stays on track. When combined with tooling that encourages and enforces cost-efficient operations, you can sleep well knowing that costs can’t spike unexpectedly.

Many organizations start with the first approach due to lack of tooling and clear processes. Having seen the benefits of the second approach, we wanted to make it easily achievable for any organization without requiring a Netflix-level amount of engineering to make it possible.

Let’s take a look at how it works in practice.

The six steps to transparent, cost-optimized workloads

Since this article is concerned with cost optimization, we skip details of how workflows are developed - more about that in the future! Let’s just assume that you have equipped your developers with a tool like Metaflow, which allows them to execute workloads in the cloud easily. As a result, you get a mixture of workloads, some experimentation, some production, running in your cloud account.

At the end of the month, you receive a cloud invoice. You want to understand if the money is spent wisely. Here, we suggest a straightforward six-step process that you can follow to examine, attribute, and minimize costs:

Let’s go through the steps one by one.

1. What does the top-line cost look like?

The cloud bill may show a big number but do you know how much of it should be attributed back to the ML, AI, and data efforts specifically? Before jumping into premature optimization, it is good to sanity-check how much money is spent on these workloads overall:

Looking at this view, our customers often notice that the costs attributed to ML, AI, and data are low enough - about $20/day in the screenshot above - hinting that cost-optimization efforts are better spent elsewhere.

It is surprising how much elasticity there is in the cloud costs: You can pay 10-30x more to execute the same workloads on a system that imposes extra margins on compute, in addition to other opaque service costs, compared to basic low-cost instances which do the job equally well.

2. What instances drive the cost?

Let’s assume that the top-line cost is high enough to warrant further investigation. Besides some fixed-size networking cost and other minor line items, the cost is mostly driven by on-demand compute resources.

As an example, let’s dig deeper and see what instances have been running on February 23th that shows a suspiciously high cost, $141.29:

On the right, you can see a 24h timeline of a day, showing all instances running on that day. Outerbounds auto-scales instances based on demand. In this case, you see four instances that have been active during the day. Inside an instance span, you can see a utilization chart indicating how actively the instance was being used.

We notice that a particular instance accounts for 50% of the daily spend, which unsurprisingly is a p3.8xlarge GPU instance that ran for five hours, hosting a workstation session.

Seeing results like this, a common knee-jerk reaction is to change the instance types to something cheaper. While it may be the right action, it is better to understand the workloads first in detail, as we do below, so we don’t have to optimize the instance mix blindly.

3. What workloads drive the instances?

Let’s say we notice that the daily cost is persistently too high. In a real-world production environment, the baseline cost is typically dominated by recurring, scheduled workloads. Since workloads are co-located on instances, it can be devilishly hard to understand why the instances are up, just by looking at the list of instances. To understand why, we must dig deeper, which luckily takes just a few clicks in the UI.

Consider this case where we have a scheduled workload, running after midnight spanning 16 parallel nodes. There are also other smaller workloads happening later which we want to identify.

We can click an instance to see all workloads that contributed to the instance being active. In this case, we see that DBTFlow and MonitorBench caused 48 cents of extra spend, besides the scheduled workload.

Note that the workloads correspond to Metaflow tasks, so we can attribute costs down to individual functions that were executed. A typical ML workflow could e.g. include a very expensive training step, whereas other parts of the workflow are cheap to execute. Or, you may be surprised to notice that feature transformation steps cost more than the training itself.

4. What resources do the workloads use?

If you identify redundant workloads, you can simply terminate them to reduce costs. However, you may notice that costs are driven by workloads that are necessary but simply too expensive. This raises the question whether the workload could be cost-optimized.

When it comes to cloud cost-optimization, a key realization is that you pay for the amount of resources you request, not for the amount you use. For instance, a task could request 5 GPUs but only use one of them, or request 10GB of memory but only use 2GB - 80% of the spend is wasted! It is like having a gym membership that you rarely use - good for the gym’s business, bad for your finances.

Hence to drive the cost down, you shouldn’t request more resources than what you actually need. A challenge is to identify workloads that are the biggest offenders when it comes to wasted resources. To make the challenge even more challenging, workloads are not static, so you need to understand their behavior over a longer period of time.

Outerbounds includes a view just for this:

Here, we can dig deeper into workloads we identified in the previous step. In general, it is not necessary to optimize every single task, but you can focus on the biggest offenders - tasks that are executed frequently and consume much resources.

For instance, in the above screencast the step ScalableFlow.start has been executed 69 times over the last 30 days. It uses its one requested CPU core quite efficiently, but it uses only 10% of the memory requested, which is an opportunity for optimization. In another example, JaffleFlow.jaffle_seed is shown to burst above its requested number of CPUs but it uses only about 50% of the requested memory.

By right-sizing the resource requests, you can reduce wastage and pack more workloads on the same instance that you are already paying for, resulting in instance cost savings. Once you understand what resources your workloads actually need, you can also optimize your instance mix, getting rid of overly expensive instances if they are not needed.

5. What workloads can be optimized?

To optimize resource requests, you should contact the owner of the workflow in question, as reported in the UI. They can simply adjust the @resources decorator in the offending step.

You may wonder why this right-sizing couldn’t be done automatically by the platform? A challenge is that resource consumption can change over time as the amount of data increases and due to other factors, so as of today it is safer to keep a human in the loop, who has domain-knowledge about the workload in question.

Luckily Outerbounds makes it easy for developers to eyeball the resource consumption of their tasks in real-time in the UI:

Here, the red line shows the amount of resources requested - 2 CPU cores and 8GB of memory - and the blue line shows the actual resources used, which grows to about one fully-utilized CPU core during the task execution.

This information is aggregated in the cost optimization view shown in the previous section, to provide you a macro-level view to resource consumption. As a developer, it is a good idea to check this micro-level view as you are authoring flows, to make sure your resource requests are at the right scale.

6. Where should the workloads execute?

After you have gone through steps 1-5, your workloads should be lean and mean, running efficiently on right-sized instances. Congratulations! At this point you are more sophisticated than 90% of companies using the cloud.

By going through these steps you can benefit from extremely cost-efficient compute resources with minimal wastage and no extra margin. As a result, you can start running more experiments, more production workloads, and produce more value with data, ML, and AI.

As your appetite to do more grows, you may wonder if there are even more opportunities to reduce costs. Outerbounds provides one more major lever for cost-optimization - you can move workloads between clouds easily:

Without changing anything in your code, you can move workloads between AWS, Google Cloud, and Azure. This allows you to take advantage of various discounts and credits that the fiercely competitive clouds offer. Also, you gain leverage for negotiating spend commitments with the clouds, as you have a realistic and effortless option to move workloads if necessary.

In addition, you may be able to leverage on-prem compute resources, choosing the most cost-optimal instance mix for your workloads.

Start saving money today

If your ML, AI, and data teams could benefit from transparent costs, reduced cloud bills, easy portability between clouds, and higher developer productivity, take Outerbounds for a spin! You can start a 30-day free trial today - the platform deploys securely in your cloud account in a few minutes.