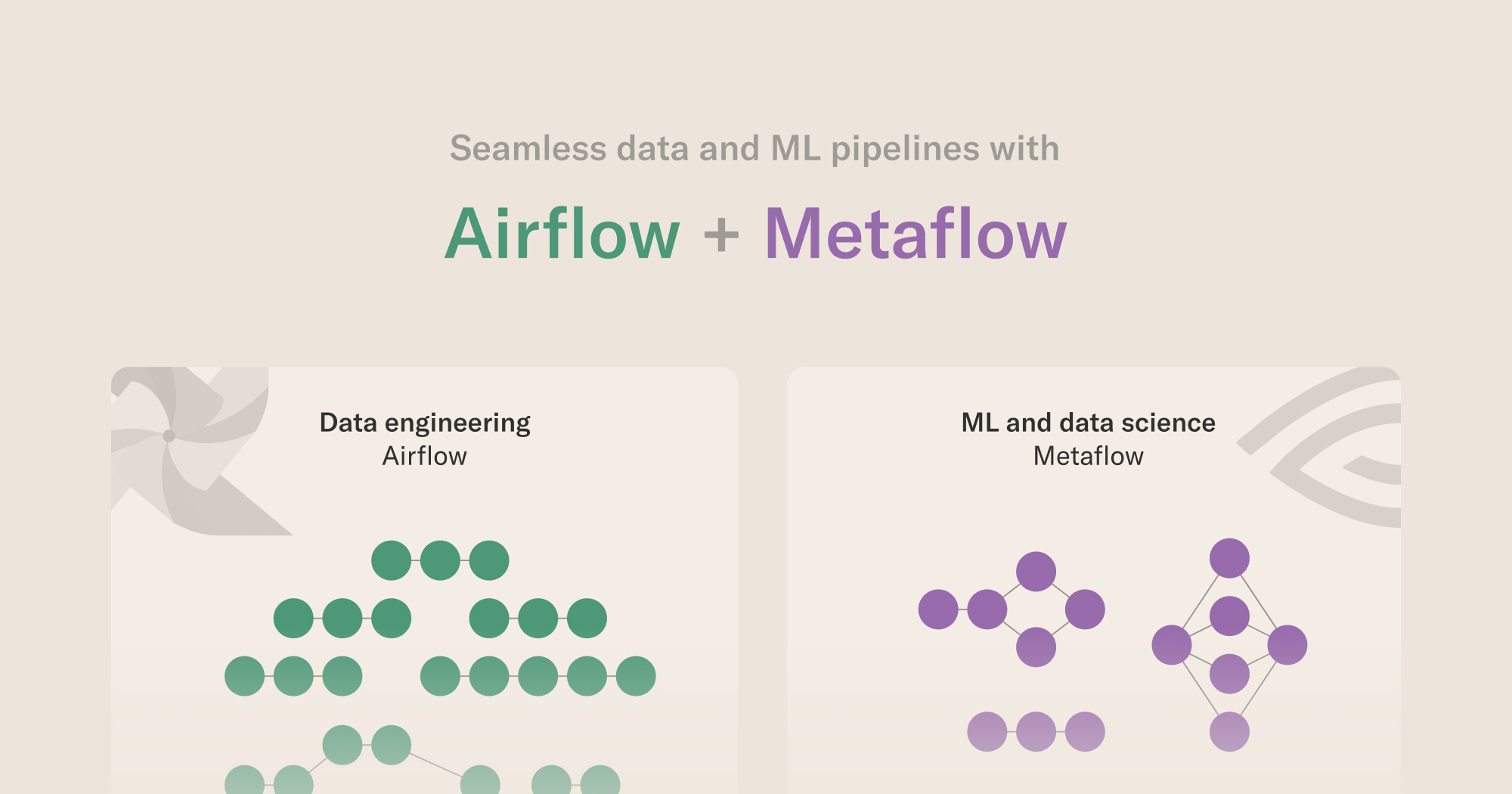

This post, originally published in the Airflow blog, is written in collaboration with Michael Gregory from Astronomer. We show how ML and data science teams can develop with Metaflow and deploy flows on Airflow, avoiding the need to migrate existing Airflow workflows or to navigate between multiple systems.

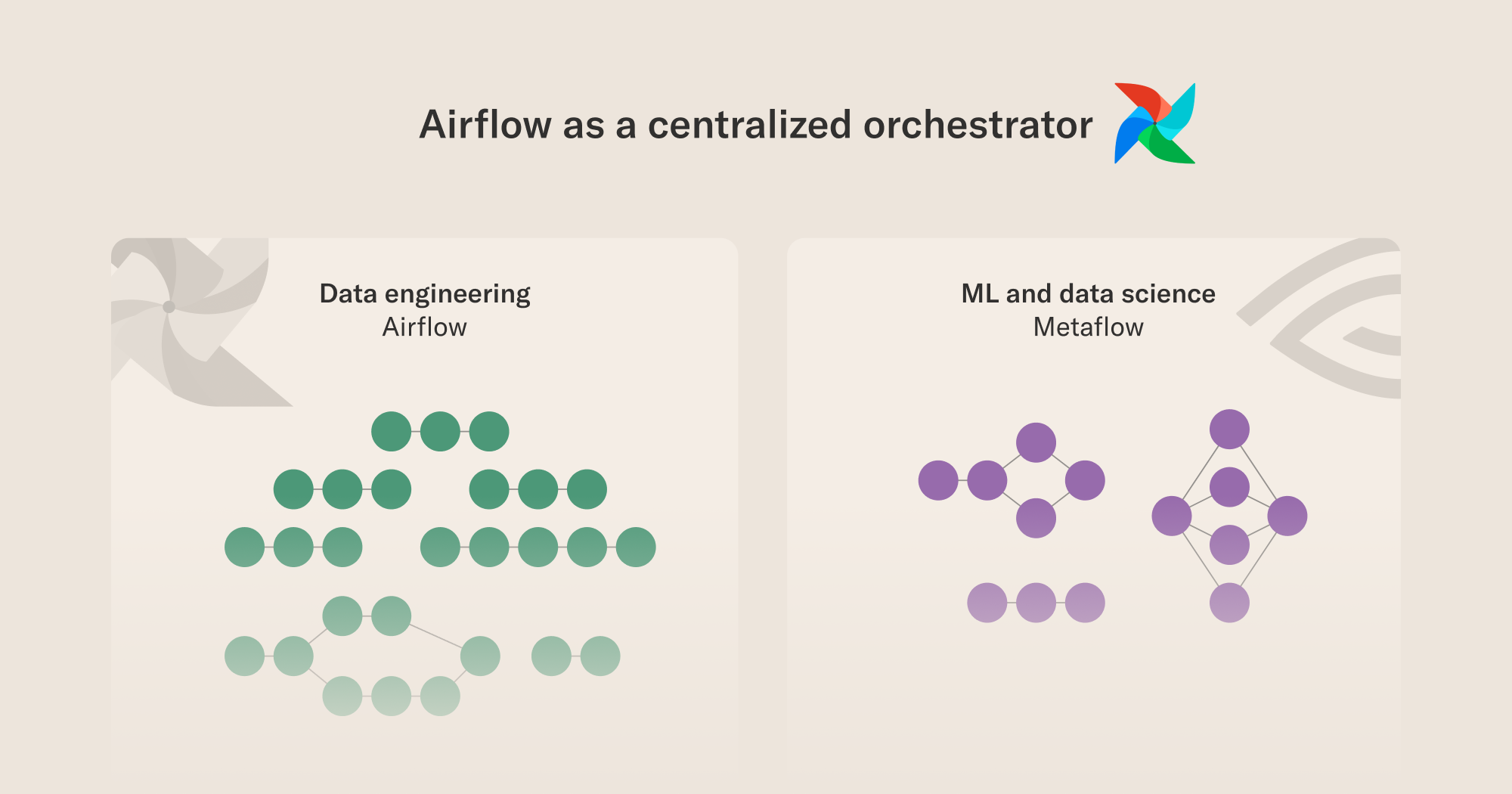

Consolidated workflow orchestration for all personas

Workflow orchestration sits at the nexus of all of an organization’s efforts to create business value from data. Many organizations have disparate, and often disconnected, teams working across the data value chain. There may be teams maintaining and governing data warehouses, separate teams building security ops, teams building ETL and data engineering workflows, teams building ML models and ML operations.

Each of these areas by themselves are vital and, because they are extremely difficult to do well, much of an organization’s mental energy is spent on just getting them right individually. It’s yet another monumental task to pull them all together into a cohesive whole.

And yet business users and leaders, arguably, are becoming more and more interested in tying it all together into a cohesive whole where business value and return on investment can be realized. Each team often has platforms and infrastructure specific to their needs, and while each platform may have workflow orchestration components, they are usually specific to that platform and lack the holistic, single-view that is needed when the rubber meets the road to get products out the door.

This is where Enterprise workflow orchestration comes in and, to be effective, the workflow orchestrator must be future proof to protect investment while being able to connect to anything, be secure, open, extensible, and support any language, for any user or skill set. This is a tall order for any piece of technology and many organizations must stitch together more than one tool. It should be noted that this isn’t easy and, at the very least, the number of tools should be reduced to the absolute minimum.

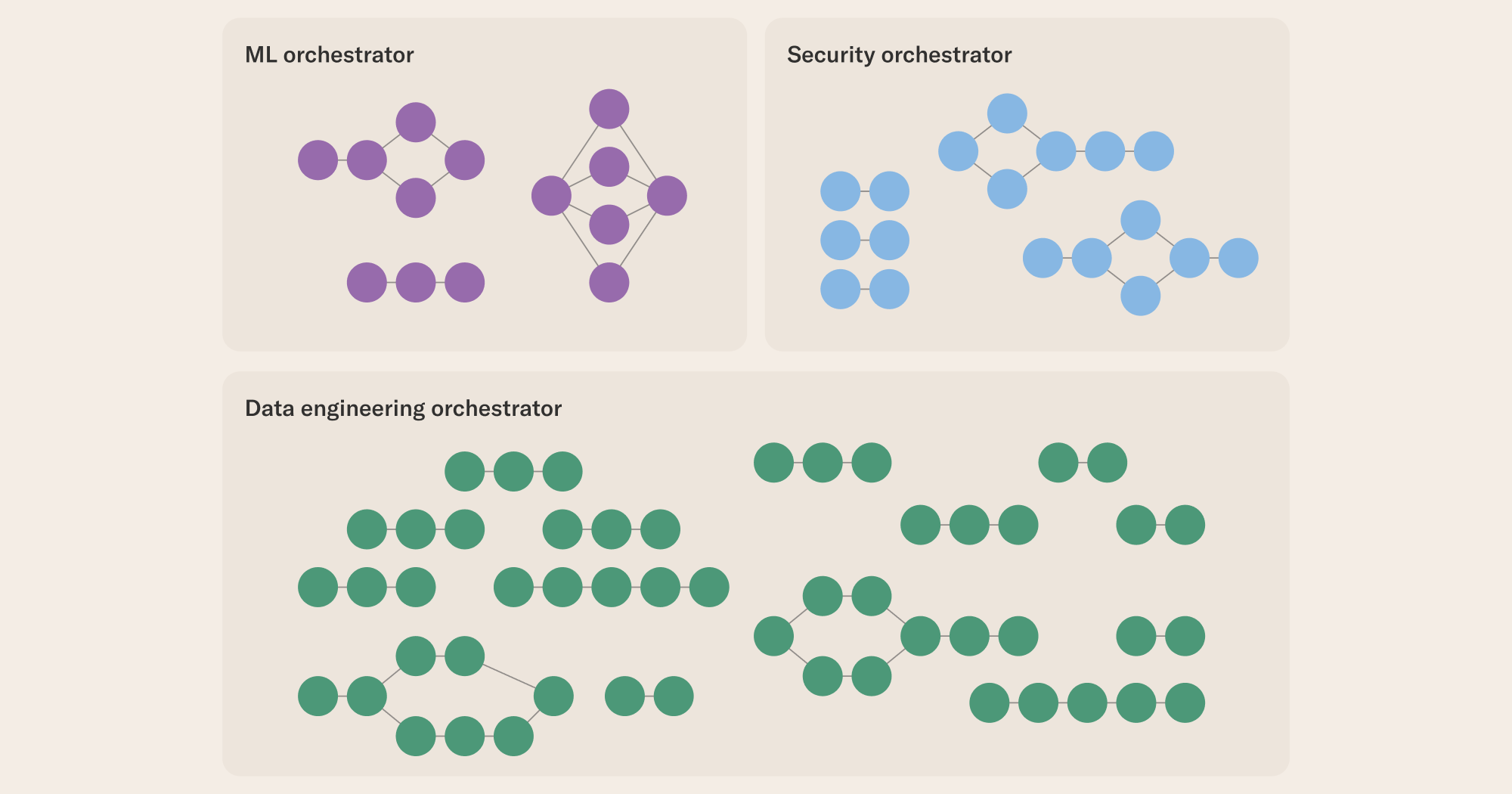

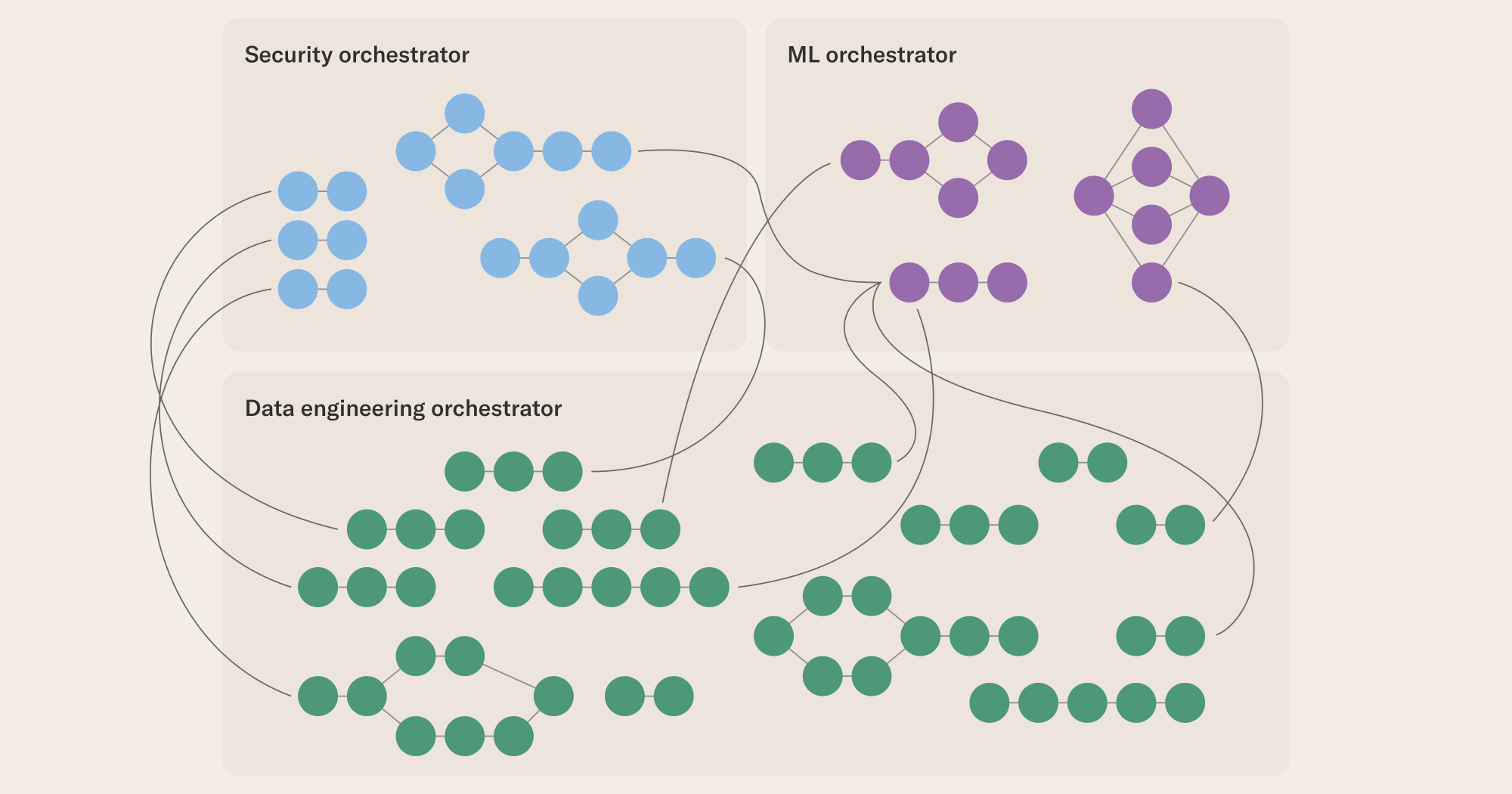

At the 10,000 foot view there are two main approaches to dealing with multiple orchestrators and bridging the gaps between teams and their tools.

Hierarchical Orchestration

In the hierarchical approach orchestrators trigger workflows in other orchestrators. While this allows, for instance, different tools to support domain-specific requirements it relies heavily on the tools’ openness and extensibility.

Structurally this could look like a hierarchy with an “uber-orchestrator” or a mesh. Challenges arise, however, with regards to visibility and resource utilization. Visibility becomes an issue whenever more than one orchestrator is used and the state and metadata is spread across multiple “split-brains”.

In this case, troubleshooting, reproducibility and governance become difficult. Likewise resource utilization and efficiency suffer as infrastructure management for each tool is duplicative. Often the same bits of code generating the same data are run on multiple platforms. In practice, the hierarchical approach often defeats the purpose of holistic orchestration.

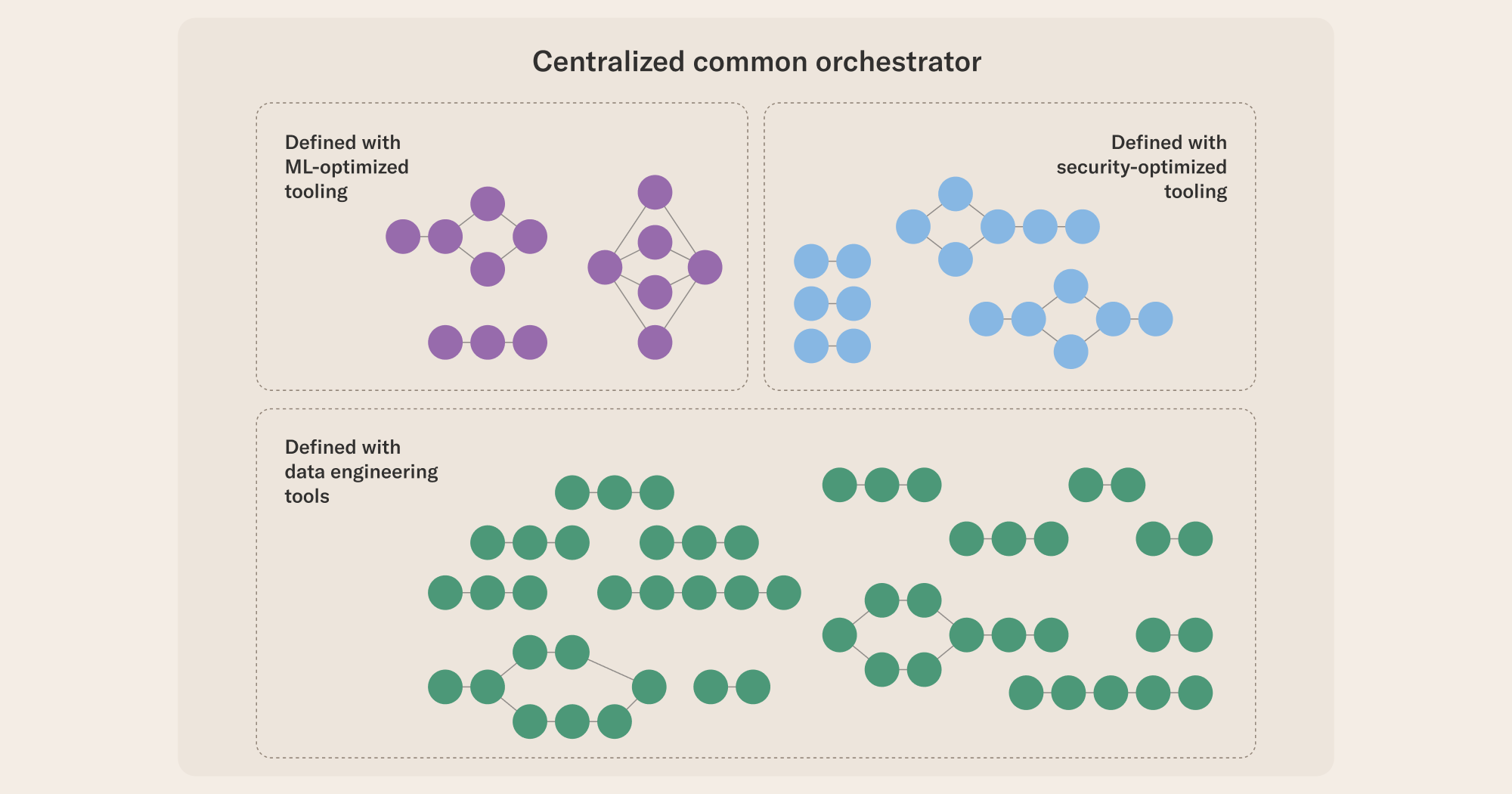

Domain-Specific Orchestration

In this approach, each team can use the tools, sometimes homegrown, that best meet their needs with a domain-specific language (DSL). The DSL allows users to build the way they want and export their workflows to a centralized orchestrator.

With a single orchestrator visibility, fault analysis and resource management are all simplified. Furthermore this approach lends itself well to things like dev and prod separation. Teams can use their tool of choice for development and leverage a single orchestrator for production, for instance.

A large-scale example of this approach is Netflix’s internal workflow orchestrator, Maestro, which supports a number of DSLs optimized for different use cases, including Metaflow for ML and data science use cases.

To provide a concrete example of how you can replicate the same successful pattern in your environment, this blog demonstrates a DSL-approach using Apache Airflow and Metaflow.

Apache Airflow

Born of necessity as Airbnb’s workflow orchestration and data pipeline tool, Airflow has grown to become the largest and most active open source project in the history of the Apache Software Foundation. Airflow brings a huge toolbox of integrations to nearly every data platform and tool as well as a vast community of contributors.

With more than 16 million monthly downloads, Airflow is the glue that pulls the modern data stack together. It is heavily used in data engineering and MLOps use cases and it is exactly Airflow’s ability to connect from anything and to anything that makes it a perfect “uber orchestrator”.

Metaflow

Forged in the real-life, complex landscape at Netflix, Metaflow was designed to meet the specific needs of high-powered users with simplicity and scalability. Expanding beyond Netflix it has also found an enthusiastic audience across 1000s of organizations.

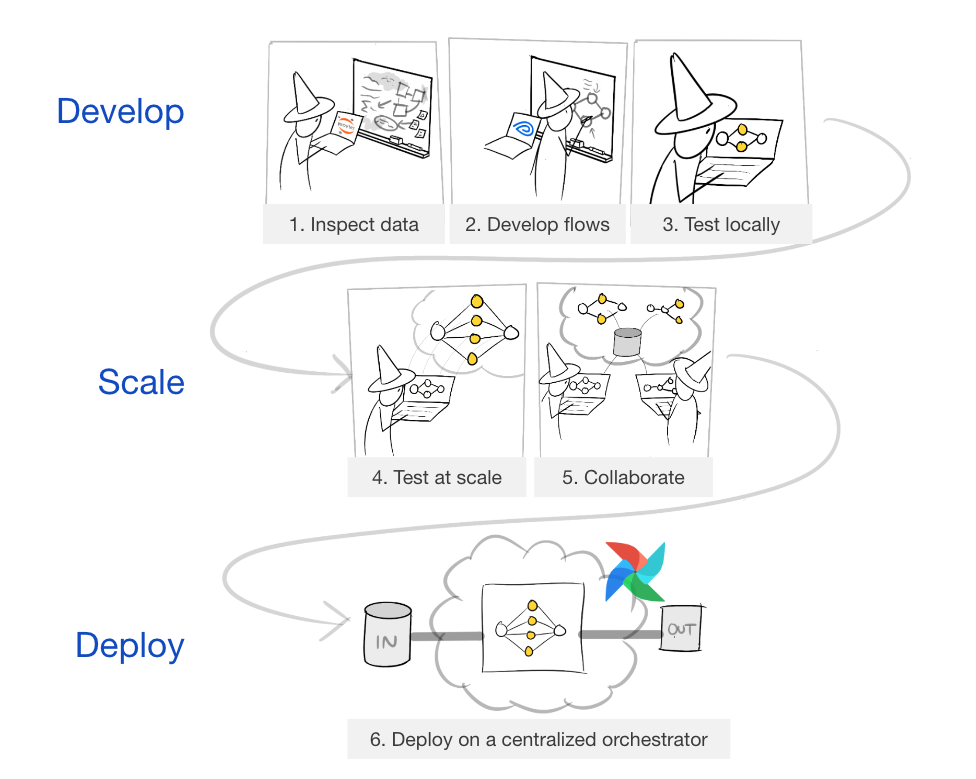

Metaflow strives to provide the best possible user experience for data scientists and ML engineers, allowing them to focus on parts they like. Similar to Airflow, Metaflow treats workflows as the key abstraction and simplifies passing of data between steps. Additionally, Metaflow enables simple use of cloud compute resources like GPUs and helps with inspecting runs in notebooks as well as tracking and versioning of code, data, and models.

This figure adapted from the documentation of Metaflow illustrates the idea:

Metaflow makes it easy to develop data- and compute-intensive applications, experiment with them locally, and leverage cloud compute for scalability. Once everything works, the data scientist can deploy their Metaflow flows in Airflow for production orchestration without having to write configuration files or change anything in the code.

As a result, data scientists and ML engineers can develop solutions quickly and deploy them as first-class workflows on the company’s centralized workflow orchestrator, seamlessly connecting to other data pipelines.

Example code walkthrough

Let’s take a look at how this works in practice! In our code example, we try to simulate two teams:

- Data Engineering Team: Responsible for processing and refining data, this team transforms raw data into actionable insights, readying it for subsequent analysis.

- Machine Learning Team: They create effective ML models, heavily relying on the datasets curated by the Data Engineering team.

Tooling and Orchestration

Both teams use specialized tools for their tasks. The real strength, however, is in their integration. The orchestrator ensures seamless coordination between these teams.

The Astro CLI is the fastest way to develop Airflow DAGs and leverages Docker Desktop (with Kubernetes) integration for a simple but powerful local development environment. Once DAGs are developed the Astro CLI offers a containerized deployment option to deploy workflows to hosted, managed and secure Astro Hosted instances for production.

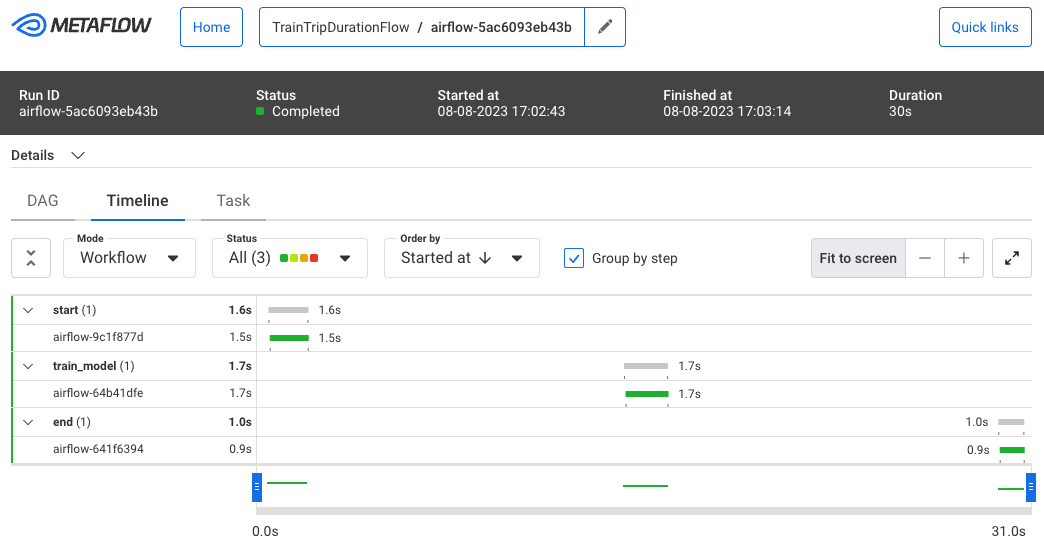

This demonstration uses local containerized instances of Metaflow running alongside Airflow containers created by the Astro CLI. This enables the creation of Metaflow DAGs and conversion to Airflow-compatible DAGs. By using the KubernetesPodOperator the Metaflow conversion enables consistent task execution in both local development and production environments.

Airflow orchestrates the workflows, fostering collaboration and a single system of record for monitoring and troubleshooting, while Metaflow offers tools apt for data-heavy ML tasks and facilitates integration into an Airflow DAG without code alterations.

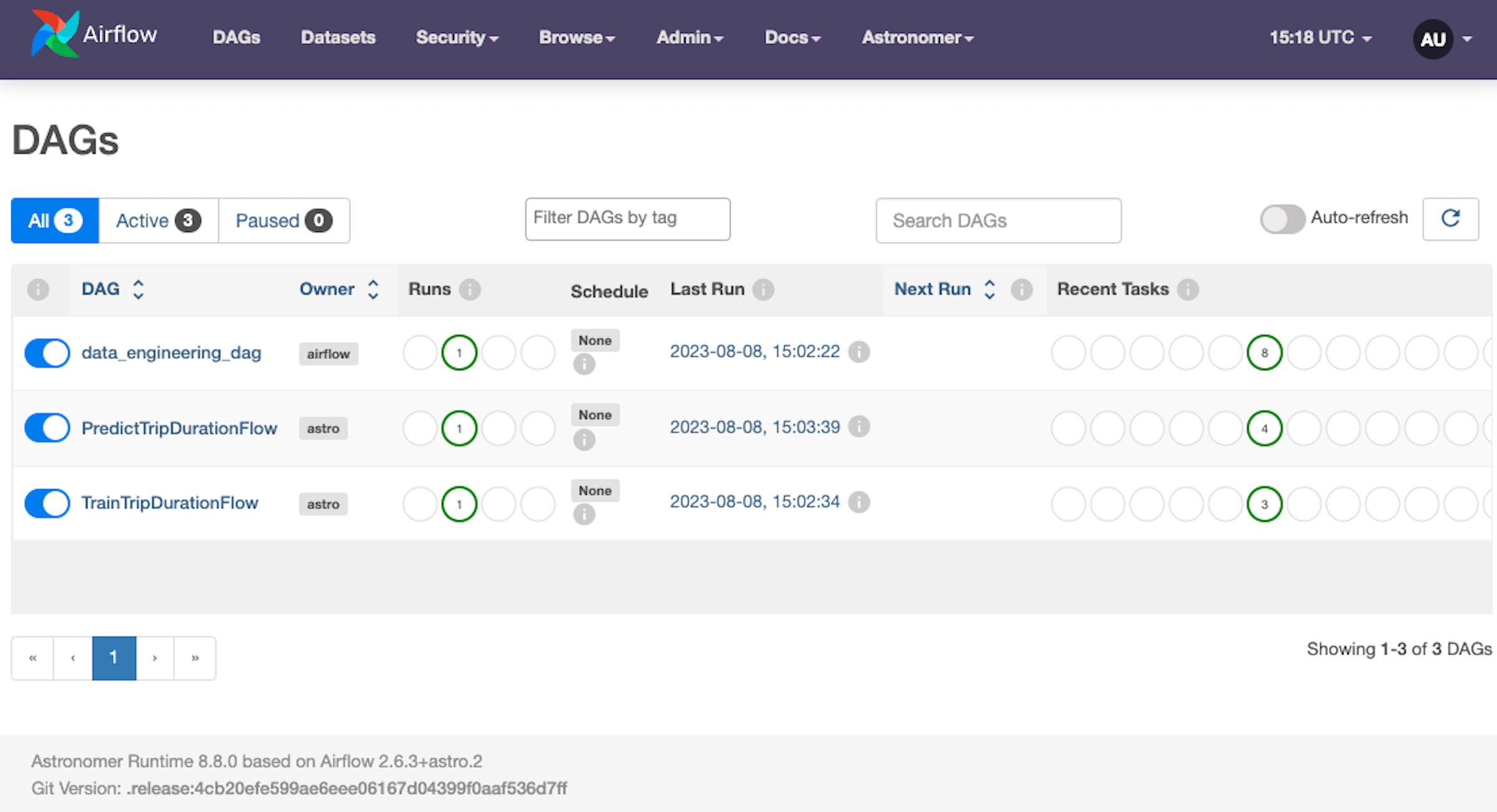

Workflows

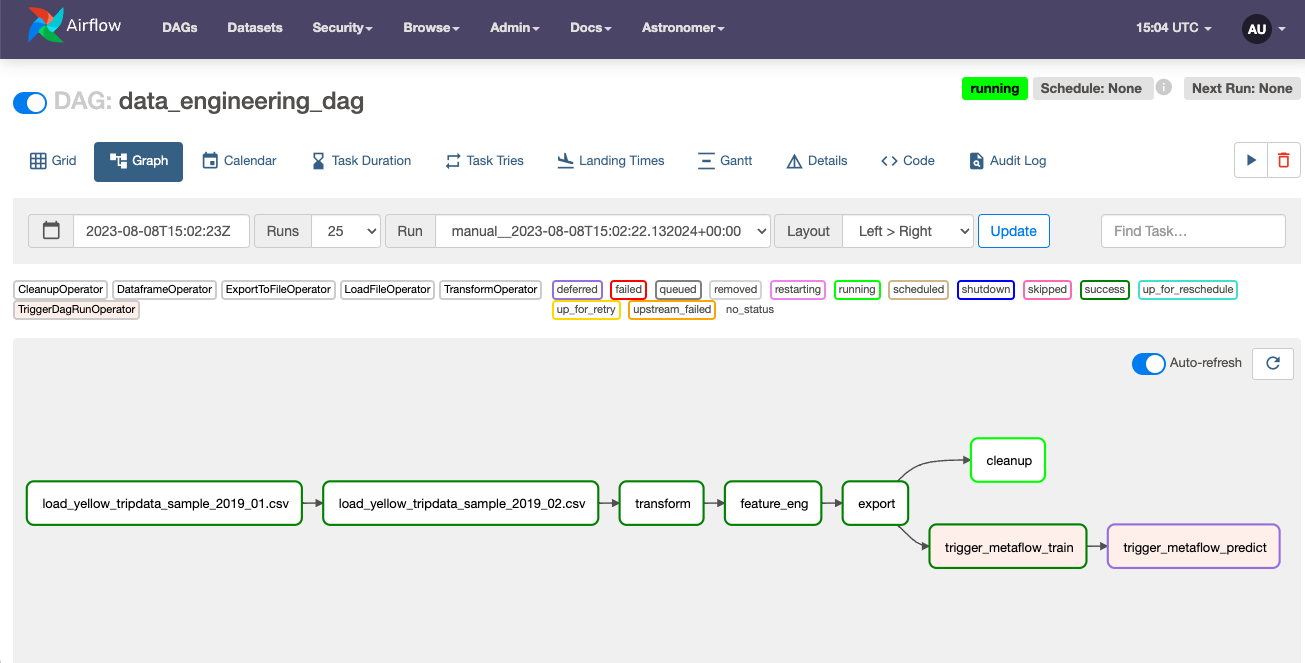

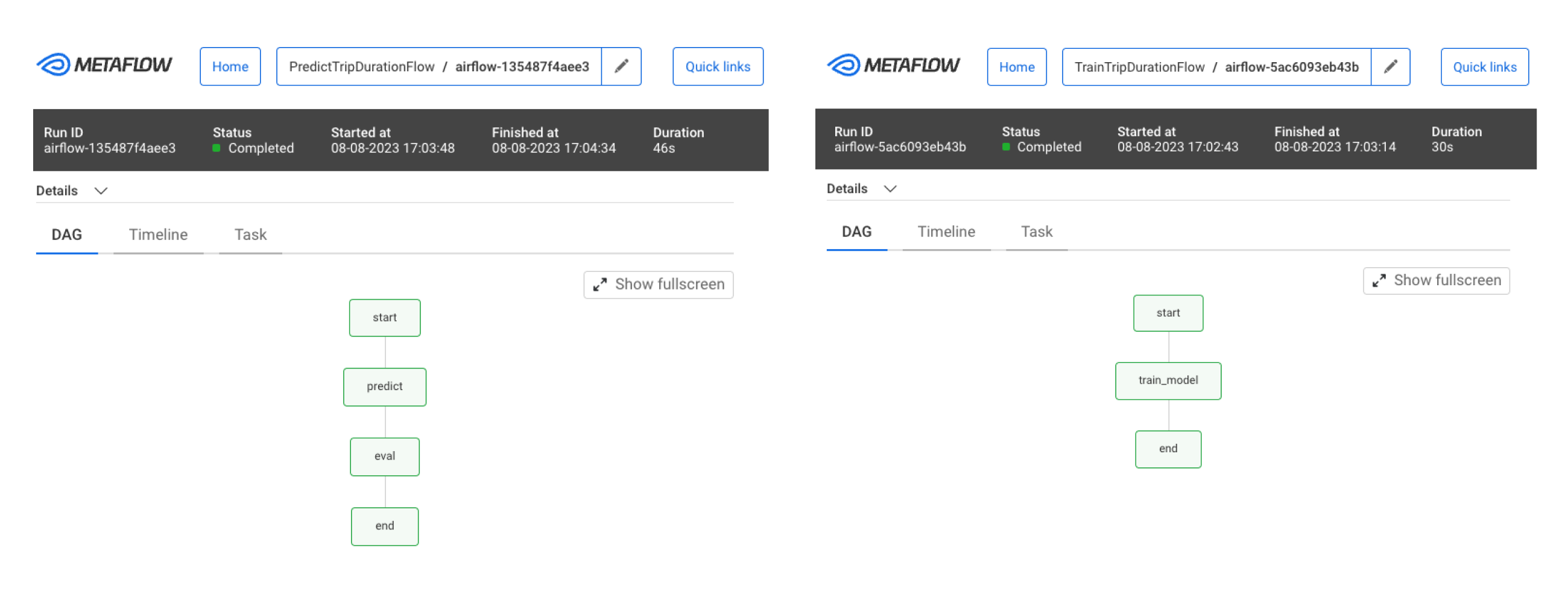

In our sample repository, there is one data engineering DAG and two Metaflows (train and predict) built by the ML team.

The data engineering DAG manages the dataset: it loads, refines, and then saves the transformed data as a CSV file compatible with Pandas Dataframes.

The ML team’s DAGs utilize this file to sequentially execute two workflows. The initial workflow trains a model, and the subsequent workflow uses the trained model to predict results. All artifacts created during the Metaflow DAG run are automatically stored by Metaflow and accessible through the Metaflow client API. This feature is consistent across orchestrators and even in local runs. This ability to checkpoint and version artifacts created during a workflow execution helps build/maintain complex pipelines with data dependencies.

Below is a Metaflow code snippet from the example prediction flow, which references the latest created model and reuses this model for prediction in a later step.

@step

def start(self):

from metaflow import Flow, S3

import pandas as pd

with S3() as s3:

data=s3.get('s3://taxi-data/taxi_features.parquet')

self.taxi_data = pd.read_parquet(data.path)

self.X = self.taxi_data.drop(self.taxi_data[['pickup_location_id', 'dropoff_location_id', 'hour_of_day', 'trip_duration_seconds', 'trip_distance']], axis=1)

flow = Flow('TrainTripDurationFlow')

self.train_run = flow.latest_successful_run

self.next(self.predict)

The Flow object in Metaflow allows accessing artifacts created by previous executions of Flows (TrainTripDurationFlow). In this snippet, it is accessing the model created in the latest execution of TrainTripDurationFlow and uses this model for creating predictions.

When the ML team is ready to deploy the workflows on Airflow, they just simply export the Metaflow DAGs into Airflow-compatible DAGs using the below commands.

cd /usr/local/airflow/dags

python ../include/train_taxi_flow.py airflow create train_taxi_dag.py

python ../include/predict_taxi_flow.py airflow create predict_taxi_dag.py

Airflow uses the TriggerDagRunOperator to automatically trigger the Train and Predict DAGs when new data arrives.

Since workflows share a universal syntax, the Data Engineering and ML teams can collaboratively orchestrate tasks by merging these workflows using Airflow’s syntax, as demonstrated here.

Summary

In this post, we emphasized the importance of centralized orchestration systems to enable cross-team collaboration. Following the patterns outlined here, you can use Metaflow to develop and test ML and data science applications quickly — including LLMs and other GenAI models — and orchestrate them on the same Airflow orchestrator that is used by all other data pipelines.

Thanks to using a centralized orchestrator, all operational concerns can be monitored and addressed through a single system, without having to triage across siloed environments.

As next steps...

- Run the Metaflow+Airflow pattern demonstration by following along in the repo.

- Find more information about open-source Metaflow at docs.metaflow.org

- Try Astro Hosted Apache Airflow.