We recently sat down with Yudhiesh Ravindranath, an MLOps Engineer at MoneyLion, to discuss how his team uses Metaflow, among many other great tools, and how it has impacted machine learning more generally at MoneyLion.

What type of ML questions do you work on at MoneyLion and what type of business questions do they solve?

At MoneyLion our mission is to rewire the financial system to positively change the path of every hard-working American. We offer an all-in-one mobile banking experience, covering banking, zero-interest cash advances, and much more. We are a data-driven organization that uses data science to empower our customers, and we use machine learning heavily in our day-to-day operations, including but not limited to: credit scoring, fraud detection, bank data enrichment, and personalized recommendations.

What did your ML stack look like before you adopted Metaflow and what pain points did you encounter?

Prior to adopting Metaflow our data science team built machine learning pipelines mostly within a single-node HPC environment (an AWS EC2 instance with 500GB RAM and 64 CPU Cores) and using Apache Airflow running on Kubernetes which is used heavily by our data engineering team.

We were hitting the limits of vertically scaling our HPC environment as we scaled up to 40+ data scientists this year. All of them had access to it and we were running their workflows of differing compute needs on it, which resulted in constant memory alerts and crashes of the entire environment which impacted all workflows on it. While it was easy to run workflows this way, it was also difficult to scale them up anymore and ensure the independence of workflow execution.

In the MLOps team, I was tasked with building a continuous training pipeline for one of our credit scoring models and decided to use our Apache Airflow setup instead due to the aforementioned reasons. It solved my issue of being able to run a complex pipeline with high compute resources but came at the cost of being much more complex to set up. The team had a goal of ensuring that any data scientist could set up these continuous training pipelines on their own but Apache Airflow was too complex due to the fact that data scientists need to know:

- How to use Docker,

- How to build a CI/CD pipeline to push the Docker containers to AWS ECR, and

- How to build a CLI application.

These things can be learned over time but I felt like there must have been an easier way.

What questions do you think about answering when adopting new ML technologies and how do you make decisions about what to adopt?

The MLOps space is getting overcrowded with tools that promise heaven and earth so I really do suffer from decision paralysis when trying to pick a tool to adopt. I see tools being split into two different categories (note that mutability here refers to whether or not we need to replace preexisting tools in an organization):

- Mutable tools:

- Tools that are meant to replace preexisting tools in your organization;

- Should be a very strict set of requirements that the new tool should be better at than the current tool in use;

- Takes more time to roll out;

- Example: Migrating from AWS Redshift to Snowflake;

- Immutable tools:

- Tools that solve a problem that the organization does not currently handle;

- Requirements are not as strict as the area the tool is solving is still filled with uncertainty;

- Takes less time to roll out;

- Example: Using Great Expectations for data quality checks when data quality checks were not incorporated into ETL pipelines prior to it.

Regardless of the type of tool, the evaluation framework I employ is the same.

One of the very first things to do is to actually ask yourself if the tool you are considering is solving a big enough problem for the organization. For example, if you are trying to consider tool X which supposedly makes model serving Y% faster: Is model serving such a bottleneck to the organization that it requires a complete re-architecture in order to be improved? If it is not then your time is better spent doing another task and/or evaluating a more pressing business problem.

Every day I get blasted with all these new and great tools to use to improve our machine learning development process and it’s so easy to suffer from FOMO but it’s very important to not get distracted from the bigger picture in terms of organizational needs.

Once you have determined that tool X is actually worth spending time trying out, I have a very simple litmus test to determine if the tool is worth spending time on, which is to find out the Time To Complete A Basic Example. If it takes more than a couple of hours to complete a basic example then it usually means that the tool is not worth my time.

At this point, if it passes on, I will spend more time with more complex examples related to our work at MoneyLion and if I am happy with the tool at this point I start drafting a POC proposal document.

This document will highlight to important stakeholders:

- What is the problem and how does this tool solve it?

- What are some metrics that can be improved using this tool?

- A basic example of the tool with some code;

- A comprehensive overview of the POC:

- Concrete use cases to test the tool on;

- The time needed for the POC;

- Manpower needed for the POC.

Once the stakeholders give their feedback we can then make a decision to fit it into our sprint planning goals if the POC is approved. I would like to highlight how blessed I am to have a culture that prioritizes continuous innovation at MoneyLion, without this, working in MLOps would be much more difficult.

Why did you choose Metaflow?

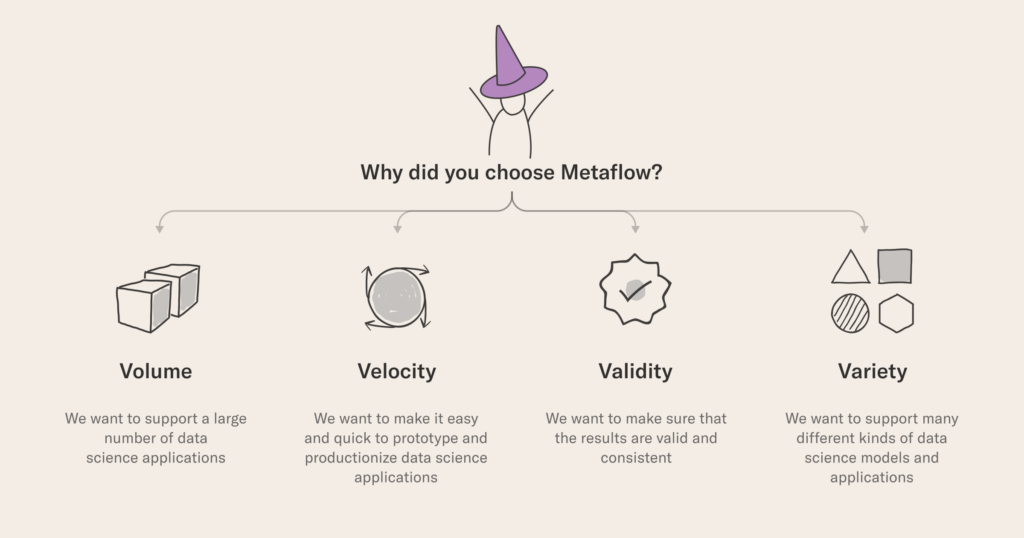

Metaflow helps us achieve the following four V’s:

| Feature | Motivation |

|---|---|

| Volume | We want to support a large number of data science applications |

| Velocity | We want to make it easy and quick to prototype and productionize data science applications |

| Validity | We want to make sure that the results are valid and consistent |

| Variety | We want to support many different kinds of data science models and applications |

What does your stack look like now, including Metaflow?

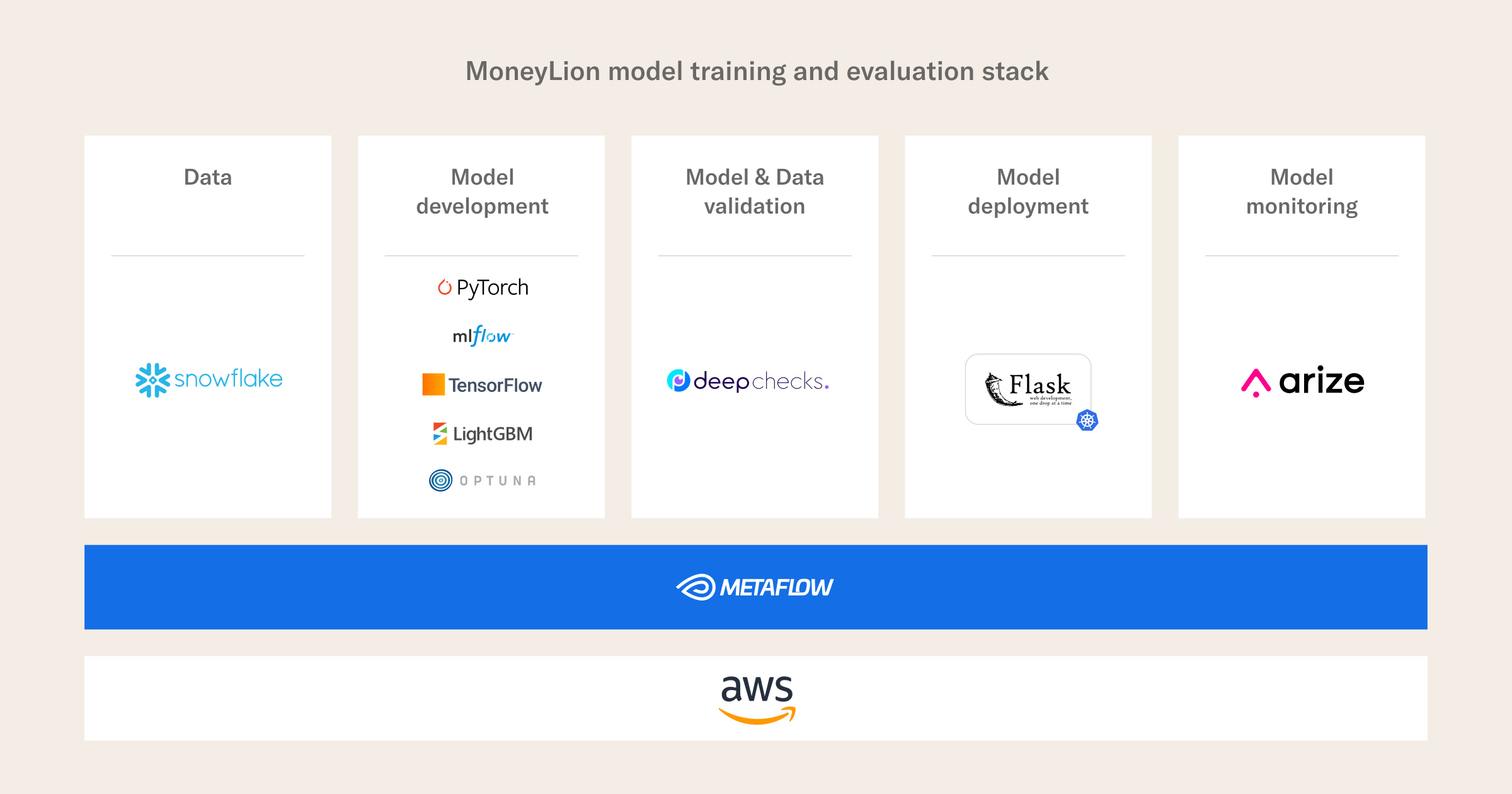

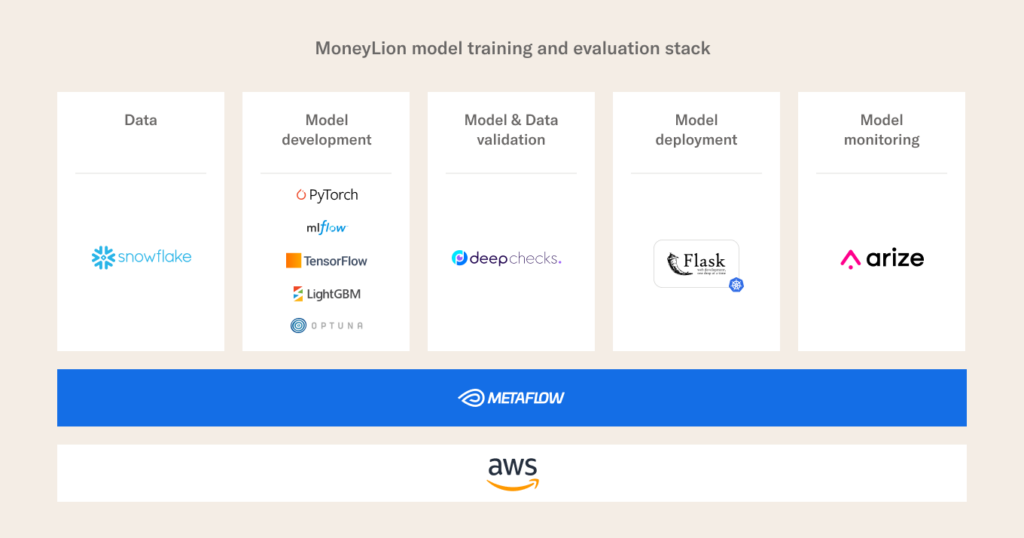

There are many ways to slice the stack but a useful approximation is into data, model development, model and data validation, deployment, and monitoring:

- For the data layer, we use Snowflake;

- For model development, we use a number of tools:

- For model and data validation, we use deepchecks;

- For model deployment, we use Flask running on Kubernetes;

- For model monitoring, we use Arize.ai;

What did you discover about Metaflow after using it? What Metaflow features do you use and what do you find useful about them?

Easily one of my favorite features of Metaflow is that it has the easiest way to store/share/version data artifacts between different steps in a distributed workflow from any tool that I have tried. Coming from Apache Airflow I would have to use XComs in order to share data between different steps in a workflow but you could only share small data artifacts, such as a very simple JSON object. Anything bigger would require you to explicitly store/manage the data in an object store such as AWS S3.

Piggybacking off this point, I love the Metaflow Client API and how easy it is to share data between different users. It is such a common use case where data scientist A needs to leverage some data computed some time back from data scientist B for their work. Previously, we were always praying that data scientist A did save the data and that they remember where they saved it. If not we would have to recompute the data (if we even have the code to do so!) which slows down data scientist B significantly and increases our cost, but now as long as the workflow is done via Metaflow we never have to worry about it again as the Client API and the artifact store make it super easy to fetch the data.

How has using Metaflow changed ML at MoneyLion?

We are still in the early stages of using Metaflow at MoneyLion but based on feedback from the Data Scientists who do use Metaflow the main thing that it has enabled us to do is to increase the velocity at which we as an organization operate. Less time is spent building and scaling pipelines, less time is spent figuring out how to share data between users and workflows, less time is spent trying to provision resources for workflows, and importantly more time is spent doing data science.

Join our community

If these topics are of interest, you can find more interviews in our Fireside Chats and come chat with us on our community slack here.