We recently celebrated two book launches with a panel and release party during a live event on YouTube. You can watch it here:

During and after the event the book authors, Chip Huyen and Ville Tuulos answered questions from the audience on many topics in the #ama-guests channel on Outerbounds Slack (you should join too!). The remainder of this post contains a collection of these questions and answers organized by the following themes:

- MLOps Landscape

- Building and Investing in an MLOps Organization

- Real-time ML

If you’re interested in these types of conversations, make sure to join our next fireside chat Operationalizing ML: Patterns and Pain Points from MLOps Practitioners with Shreya Shankar and the subsequent AMA!

MLOps Landscape

From a DevOps perspective, what would be the new techniques/frameworks/programming languages we have to use/learn to switch to MLOps?

When it comes to languages, Python is pretty unavoidable with today’s ML frameworks. It’s pretty helpful with data processing too. From the DevOps perspective, the three biggest systems you have to consider are:

- A data platform/warehouse such as Snowflake or Databricks.

- A compute layer for processing data and training models such as AWS Batch or Kubernetes.

- A workflow orchestrator for running workflows reliably such as Argo Workflows.

There are other components too like Experiment Tracking, but from the DevOps perspective, their footprint is smaller. If you need real-time data, you need to choose your data platform and compute the layer accordingly (e.g. Flink).

Ten years from now, what might the landscape of MLOps or Infra look like?

You can listen to Ville’s in-depth take in this video.

Big players like Databricks and Amazon are using Jupyter-like interfaces as code editors. How can this affect (positively /negatively) the CI/CD of the ML pipeline?

For starters, Sagemaker uses a customized version of JupyterLab as their IDE. Technically you can use JupyterLab to create Python files as with any other IDE, so it has no special impact. I presume you are hinting at actually using .ipynb files as production-ready code containers. Afaik, Databricks isn’t Jupyter-native anyways, so that’s a separate case. Using .ipynb has a number of implications. In particular, as of today, creating importable modules and packages as notebooks isn’t well supported, which makes it hard to create more complex apps as notebooks. At least with Metaflow, we recommend that you write your ML workflows as Python files as usual (you can certainly use JupyterLab for that!) and you can use notebooks alongside for analysis (where they really shine). If you do this, all existing GitOps and CI/CD tooling work as before.

With big tools like Snowflake, DataBricks, and FireBolt, do you see all of these platforms trying to scoop up all of the different “data” needs? For example, Snowflake acquired Streamlit. Or will more “specialized niche” tools dominate like DBT, Airbyte, Astronomer, Prefect, etc.? Or will we see more of “single” space winning like Snowflake’s Streamlit acquisition, Snowpark, the coming of Snowflake Materialized Tables (for Kafka topics)?

Both Snowflake and Databricks are increasingly portraying themselves as “complete data platforms”, so they are definitely trying to bundle everything. Interestingly there’s a counterforce of unbundling the data platform through engine-agnostic open-source tools like Iceberg and Arrow, and all kinds of specific tools for data catalogs, data quality, etc. There are many benefits to bundling (potentially better user experience, less complexity, fewer vendors) and maybe equally many to unbundling (more flexibility, less vendor lock-in, maybe more cost-effective, use the best tool for the job). Hence my crystal ball says that we will stay forever floating somewhere between these two gravitational forces – some things will get bundled, some don’t.

How do you standardize something, say ML as a subject, that is evolving so rapidly? In ML, things come and go pretty fast. So, with the overflowing research and introduction of new topics in ML, do you think this subject will ever reach that kind of standardization or will remain research-heavy for quite some more time? Or do you think there’s a standardization that’s already taking place?

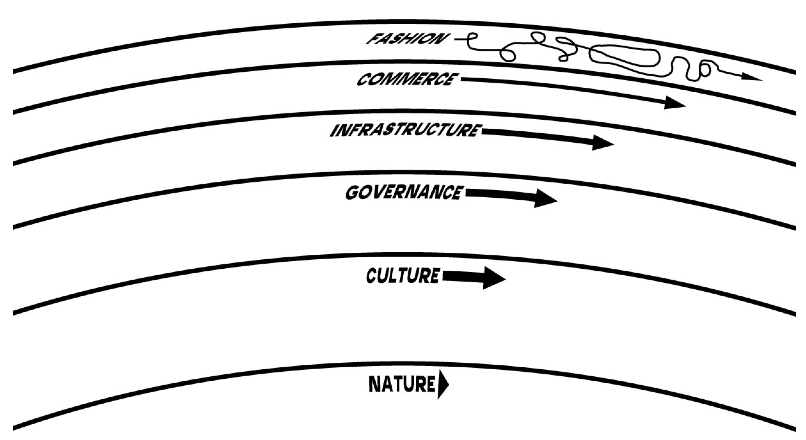

I think this concept of pace layering is relevant (read more about it here):

Some things in ML move fast, like “the fashion” and “the commerce” layers in the figure, but many things move much slower (including infrastructure like what’s handled by Metaflow). Logistic regression is still the most widely used method in businesses and it hasn’t changed in decades. Numpy is still the best library for representing numerical data in Python and it is 15 years old.

A big goal of education is to help people distinguish between the fast and slow layers. Once you know the fundamentals, you start seeing that things that matter don’t actually move that fast. There’s just a lot of noise which you can mostly ignore.

What practices or tooling in the MLOps space could help personal ML projects/research that isn’t too overkill on the infrastructure side? Say for an individual with just a single GPU on a desktop.

I guess it depends on what you want to do. If you focus on model development/research, probably just a notebook goes a long way. Maybe combined with a tool like Weights and Biases or Tensorboard for basic monitoring. On the other hand, if you want to prototype end-to-end ML-powered applications, then you probably want to consider a tool like Metaflow, which gets you on the right track with minimal overhead. Just pip install metaflow to get going!

Building and Investing in an MLOps Organization

For a startup company wanting to tap into the ML domain, to build out an in-house ML team, is it necessary to have ML engineers at the beginning, or can it be covered by another engineering team? If not, at which stage, do you think it makes sense to have a dedicated ML engineer team? And what are the common mistakes you’ve seen other companies make in this journey?

We see many companies being successful with two roles: engineers who can help with infrastructure and data scientists who can focus on ML. With a tool like Metaflow, data scientists don’t have to worry too much about infrastructure, and engineers don’t have to worry too much about ML. Hence you don’t necessarily need to have unicorn-like ML engineers who can do everything by themselves.

How can you tell if investment in MLOps has made a difference in your organization? And distinguish it from ML research breakthroughs/the skill of the data scientist to make a good model?

There are many differences but I think it mostly boils down to the model not being an island. When doing ML research, you can consider models to be islands: you have a static input data set, and you evaluate the model in a lab setting, maybe just by yourself. In contrast, in a real-world business MLOps environment, the model is connected to:

- constantly changing input data, which introduces many new sources of failure.

- outside systems that interact with the model, which introduces many new requirements for the model (offline-online consistency, stability, etc.).

- A team that probably wants to change the model/systems around it frequently.

These points make the whole thing 100x more complicated.

How do we convince our leaders to invest in MLOps?

Three steps:

- First, do you want to invest in ML/data science in the first place? You need to find and define a business scenario where ML/DS actually produces value (in the simplest case, money) for the business.

- Often it is possible to create a POC that proves the value before deploying a full-scale ML platform / MLOps solution. This is to justify any future investment.

- Once it is clear that the business really needs an ML/DS solution, MLOps is just a necessary requirement to make it work in a sustainable manner. In the same way, if the business decides to set up an e-commerce store, you need to set up web servers or sign up with a platform like Shopify. MLOps (or ML/DS infra in general) is the same deal.

I’ve seen that companies have a hard time justifying the investment in (3) since they haven’t thought through (1) and (2) properly. A major motivating use case for why we created Metaflow at Netflix was to make it easy and quick (i.e. cheap) to do (2). Once the value was proven, Metaflow makes it possible to gradually harden the solution towards (3) as necessary.

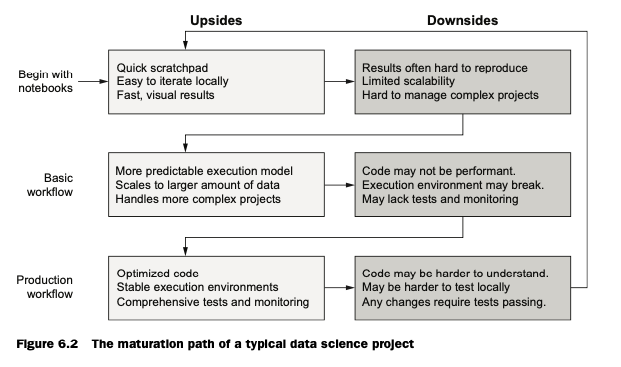

As for an ML project early in development (be it personal or business-related), is it necessary/recommended to invest time and resources into MLOps and all its nuances right from the get-go? Or only when there is a stronger foundation to work on?

Absolutely no need to think about all production-level concerns from the get-go. In fact, doing it might be counterproductive, since everything comes with a cost. Metaflow very much advocates the idea that you can start simple and harden the project gradually as the project becomes more critical. Some projects never need all belts and suspenders.

Deploying ML Systems

Once an ML Pipeline is defined, what steps should be taken to constantly improve and what metrics should be tracked to see if the pipeline is itself good?

As a new grad, how can I get started with MLOps?

For pipeline health, these basic metrics go a long way:

- Have alerting in place that sends alerts if the pipeline fails or is delayed.

- Have some basic insights/alerts for the final output of the pipeline (Metaflow cards can help here).

After these two, the next step is to have a dashboard that compares the pipeline outputs/metrics over multiple runs. Catching big diffs between runs is an effective way to catch failures (in data/models).

How do you see MLOps fitting into cloud native environments? How different is MLOps from understanding cloud orchestration and general RESTful software development best practices?

You can read this post to understand our view on what makes MLOps different.

How do you make ML Infra resilient?

You need stable foundational infrastructure: stable data platforms (batch or real-time), compute layers and workflow orchestrators. Luckily high-quality implementations of such infra are available in open-source and/or as managed cloud services. None of these tools are ML-specific, so then it’s useful to use a layer like Metaflow on top of them, to make them more usable for ML use cases.

As ML becomes more prevalent, it’ll present another attack vector for malicious actors.

- What steps do you think could be taken to increase ML security?

- Do you think increasing security would impact model performance? (latency, accuracy, etc.)

- Do you think AI security also goes hand in hand with AI ethics?

Security, as always, is highly contextual. Some applications need to be much more careful than others – increased security comes with a cost. Consider for instance Netflix recommendations that get their data from a highly controlled environment (Netflix apps) and even if someone manages to spoof the input data for a particular user, there’s a minimal downside. Compare that to, say, facial recognition for passport control at the border where the environment is much more chaotic, there are clear incentives for malicious behavior, and the impact of security breaches is much more drastic. You would approach ML security in fundamentally different ways in the two cases.

Where do you see real-time model deployment going? What seems to be the bottleneck: is it the lack of tooling, model capabilities, or difficulty of filtering noise in real-time?

See Chip’s post on using real-time data to generate more accurate predictions and adapt models to changing environments here.

Between the Reliability, Scalability, Maintainability, and Adaptability features of an ML system, which one will be the very first to pay attention to?

The answers depend on the use case, but I’d say Maintainability. Imagine having an ML system that’s, say, scalable and reliable but not maintainable – it doesn’t sound fun! It sounds like a legacy Fortran codebase. Usually, the best way to achieve Maintainability is to keep the code simple, which is especially important with ML systems that have all kinds of obscure failure modes. As Hoare said:

There are two ways to write code: write code so simple there are obviously no bugs in it, or write code so complex that there are no obvious bugs in it.

Metaflow Questions

Can you version control models/experiments/data in Metaflow?

Indeed you can! Metaflow tracks all runs (experiments) and artifacts (including models and data) automatically and exposes various ways to access and analyze the data afterward, like the Client API.

Is it possible to integrate/introduce Metaflow in a more mature project on some other cloud providers like Azure/IBM/or some other?

Yep! Azure support is already in Metaflow – stay tuned for an official announcement in a few weeks GCP will soon follow.

What next?

Read the following books:

- Designing Machine Learning Systems: An Iterative Process for Production-Ready Applications

- Effective Data Science Infrastructure

Finally, we have many more upcoming events! Next we will be speaking with Shreya Shankar about operationalizing machine learning. Register today so you won’t miss it!