As the industry is working to develop shared foundations, standards, and a software stack for building and deploying production-grade machine learning software and applications, we are witness to a growing gap between data scientists who create machine learning models and DevOps tools and processes to put those models into production. This often results in data scientists building machine learning pipelines that aren’t ready to be deployed and/or that are challenging to experiment and iterate with. It can also result in models that are neither properly validated nor isolated from relevant business logic.

With the aim of reducing this gap between prototype and production for data scientists, in this blog post, we show how you can build a simple production-ready MLOps pipeline using Metaflow, an open source framework allowing data scientists to build production-ready machine learning workflows using a simple Python API, and Seldon, which provides a range of products to allow companies to put their machine learning models into production. In particular, we show you how you can

- Train and deploy your models locally and then

- Scale out to production with the “flick of a switch” to train on the cloud and deploy on a cluster.

This is a version of a post that first appeared on Seldon’s blog.

ML pipelines and the MLOps workflow

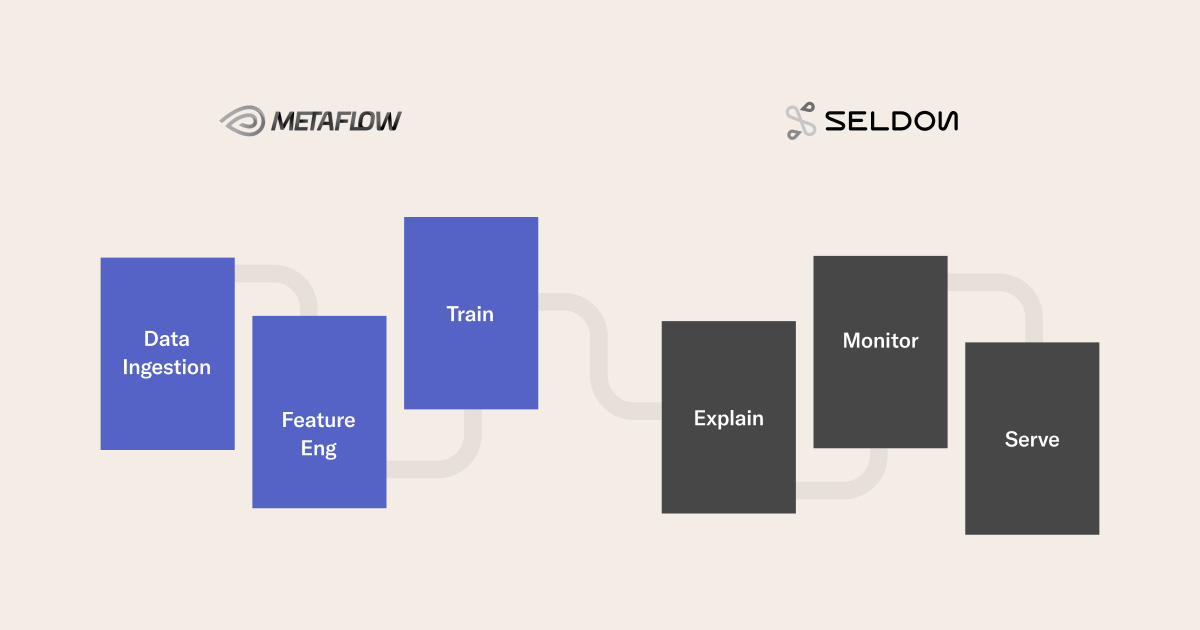

A common pattern in ML is

In this post, we’ll show you how to build out the full ML pipeline using Metaflow, a project that focuses on the first three steps, and Seldon, which concerns itself with the deployment stages of the last three.:

Local deployment vs cloud deployment

Data scientists, for the most part and for good reason, prefer to explore, iterate, and prototype their data, models, and pipelines locally. But at some point in time, they’ll need to move to a cloud-based development and testing environment. This could be for any number of reasons, whether they’re required to use large data lakes for training data or the need to train models at scale (with many GPUs and/or massively parallelizing a hyperparameter sweep, for example.

They will also need to deploy their model remotely. This also could be for any number of reasons, including the need for a remote, scalable endpoint with no downtime for end-users of the need to test their models at scale.

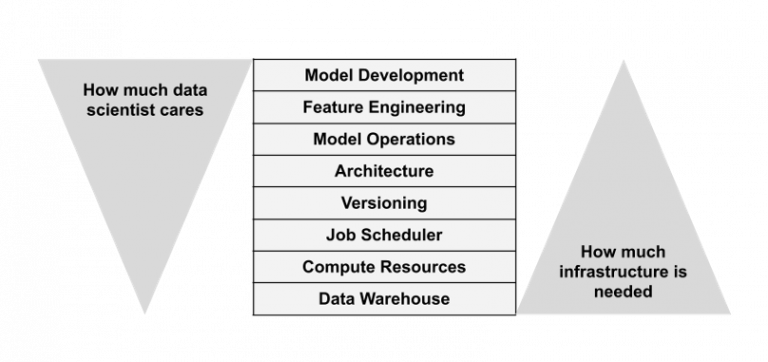

So how do we meet this challenge of allowing both modes of work, local prototyping and remote deployment, with seamless transitions between the two, while having the most ergonomic abstraction layers so that data scientists do not need to get involved in the lower levels of infrastructure?

Reproducible ML pipelines with Metaflow

To help data scientists focus on the parts of the stack they really care about, such as feature engineering and model development, and abstracting over the aspects that they care about less, such as organizing, orchestrating and scheduling jobs, interacting with the data warehouses, and so on, we’re excited to show you Metaflow, an open source framework allowing data scientists to build production-ready machine learning workflows using a simple Python API, originally developed at Netflix for this specific challenge. As you’ll see, Metaflow not only provides an out-of-the-box way to build ML pipelines, and track and version the results, ensuring every run is reproducible, but it also allows you to move seamlessly between your local prototyping environment and cloud-based deployments.

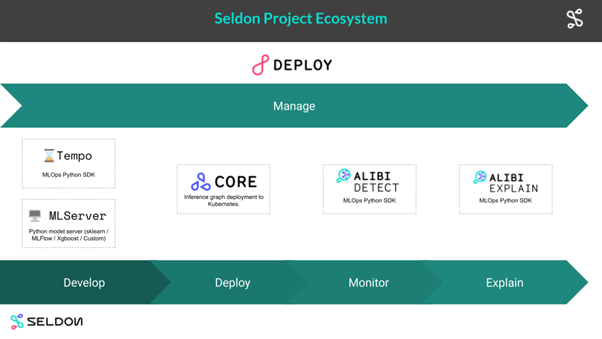

Open source and ergonomic model deployment with Seldon

The second moving part that we need is a framework that allows any set of machine learning models to be deployed, scaled, updated, and monitored. The OSS Seldon ecosystem of tools provides flexible functionality for these sets of tasks (moreover, it can be included in a Metaflow flow!):

sklearn_model = Model(

name="test-iris-sklearn",

platform=ModelFramework.SKLearn,

local_folder=sklearn_local_path,

uri=sklearn_url,

description="An SKLearn Iris classification model",

)

Data scientists can define simple Model classes like this or via decorators and can also combine components with any custom Python code and library, allowing custom business logic for inference. Given such models or pipelines, Tempo can deploy your models locally or on the cloud and you can then run predictions against them.

Production ML made easier

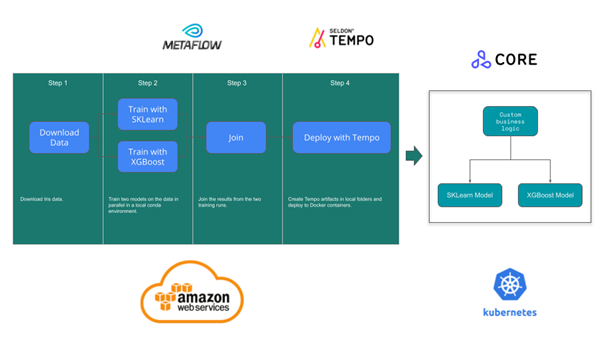

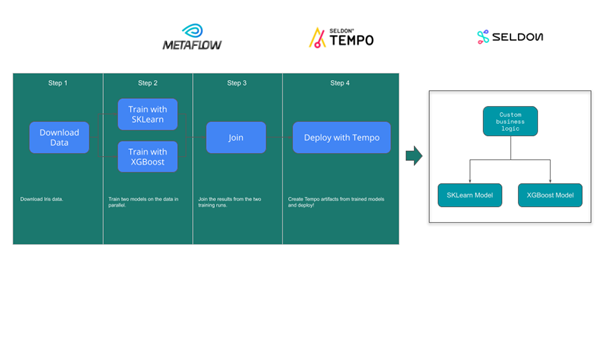

Let’s now consider a typical example of an ML pipeline and show how we can use Metaflow and Seldon together to make production ML easier for working data scientists. We’ll be using the Iris dataset and building two ML models, one from scikit-learn, the other from xgboost. Our steps will be to:

- Define Tempo inference artifacts that

- Call these two models with custom business logic and

- Deploy the models.

- Use Metaflow to run these steps as shown below.

python src/irisflow.py --environment=conda run

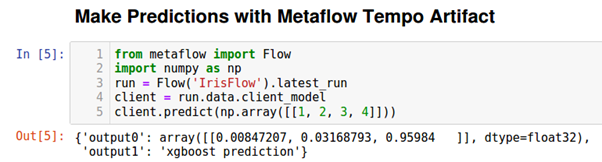

Once this has completed, you’ll find three Docker containers. This is where Metaflow comes into its own, allowing us to retrieve model artifacts and use them to make predictions:

Moving to cloud deployment

What we want to now do is be able to move between our local workstation and the cloud with as little friction as possible. Once you have the correct infrastructure set up (see details below), the same ML pipeline can be deployed on AWS with the following:

python src/irisflow.py --environment=conda --with batch:image=seldonio/seldon-core-s2i-python37-ubi8:1.10.0-dev run

Note that this is pretty close to the code you wrote to run your pipeline locally:

python src/irisflow.py --environment=conda run

Once this has completed, you’ll find Seldon Deployments running in your cluster:

kubectl get sdep -n production

NAME AGE

classifier 2m58s

test-iris-sklearn 2m58s

test-iris-xgboost 2m58s

You can also use your artifact to make predictions, exactly as before!

Wrapping up

A big challenge in the MLOps and machine learning deployment space is figuring out tools, abstraction layers, and workflows that allow data scientists to move from prototype to production. By combining Metaflow and Tempo, ML practitioners have a powerful combination to develop end-to-end machine learning pipelines with the ability to switch between local and remote development and deployment, as needed. On top of this, both projects provide auditing and reproducibility. If you’d like to learn more, check out this fully-worked example here.

You can also learn more by joining Metaflow on slack here and Seldon on slack here.