Hands-on experience with the full stack of ML infrastructure - no installation required

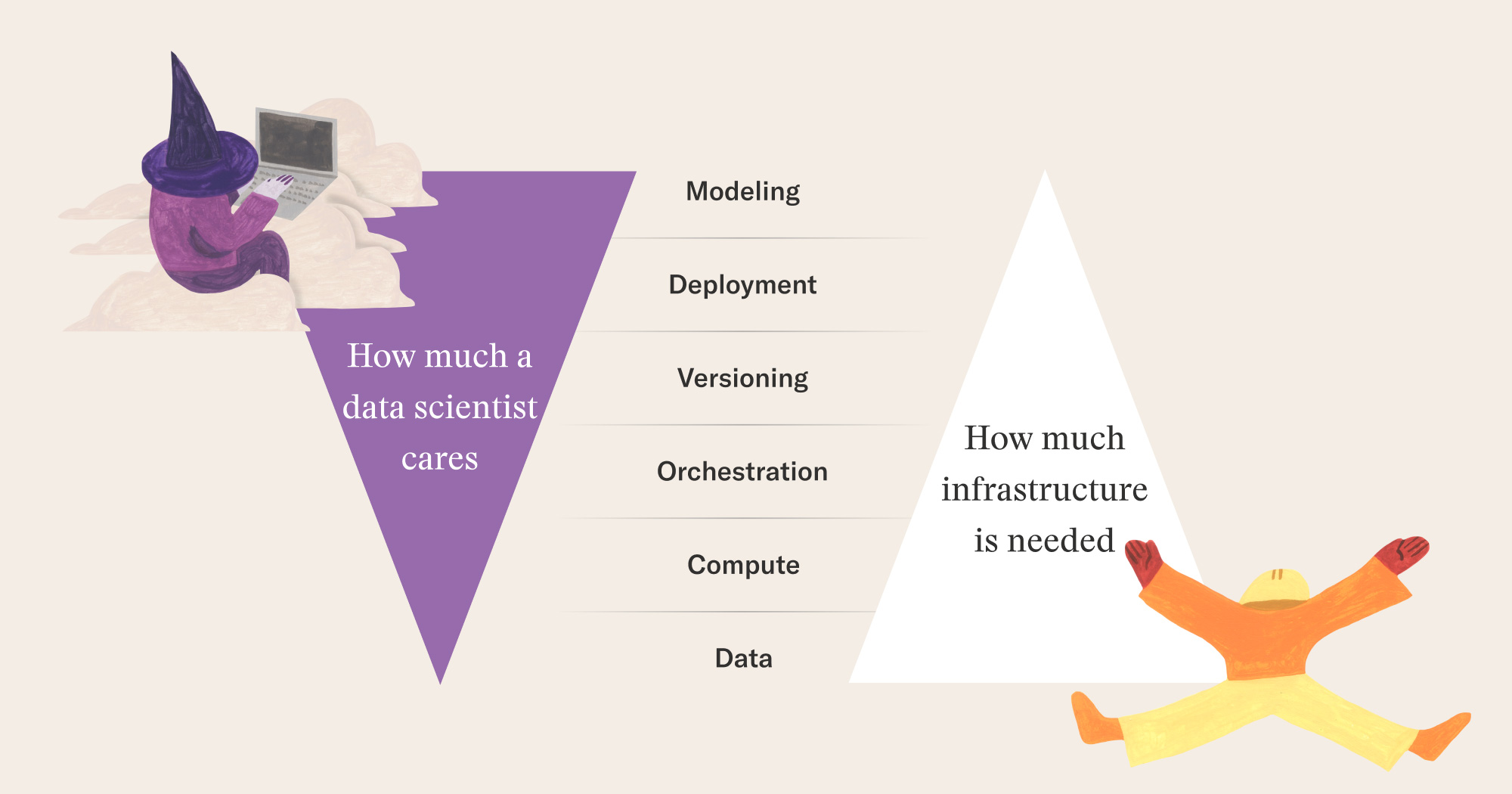

Through our work with open-source Metaflow, which was started at Netflix in 2017, we have had an opportunity to work and talk with hundreds of companies regarding machine learning infrastructure. A common theme across these organizations is that they want to make it quick and easy for their data scientists and ML engineers to promote their work from notebook-based prototypes to production-grade ML systems.

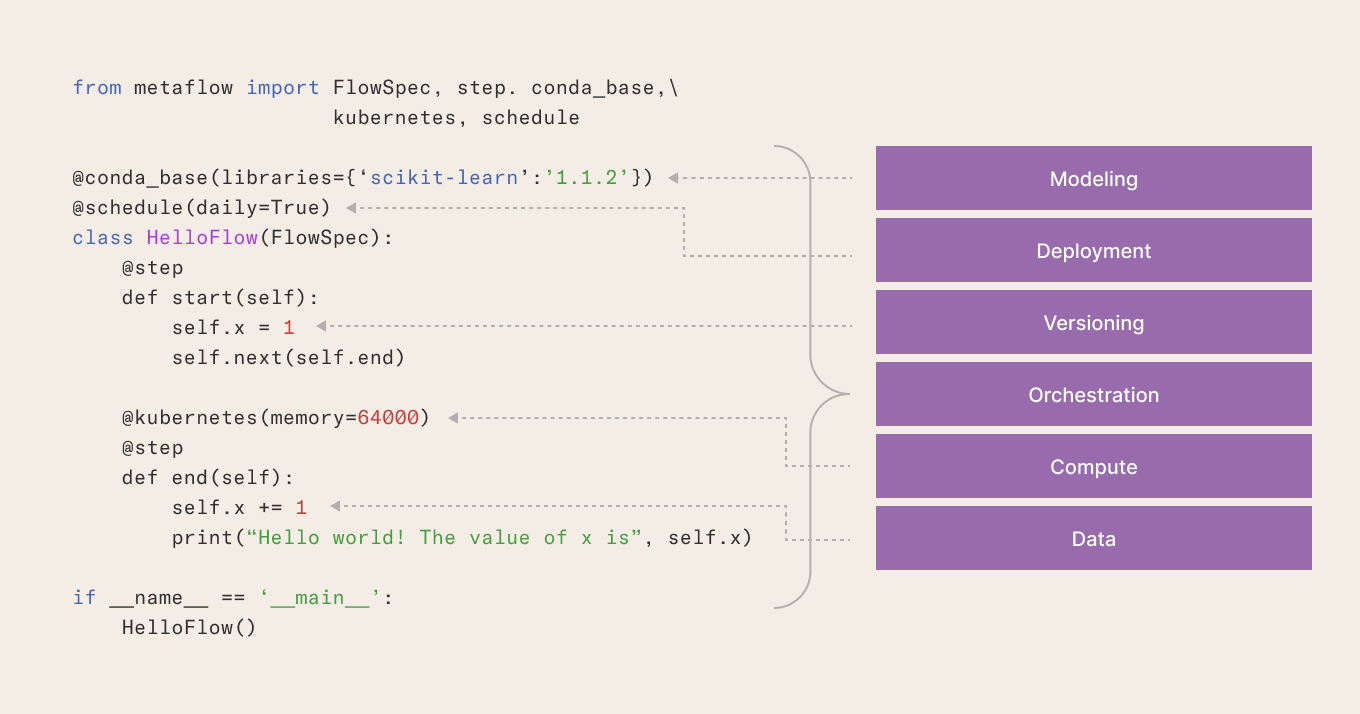

Doing this is not trivial. Comprehensive tooling like that provided by Metaflow helps, but as with any sophisticated real-world activity, there’s no substitute for hands-on practice. However, practicing the whole infrastructure stack from scalable compute, event-based orchestration, versioning, and A/B testing is not easy for individuals who rarely have unhindered access to production-grade infrastructure.

To address this gap, we have partnered with CoRise, an e-learning platform targeting professionals. Our new course, which we taught for the first time in April 2023, covers the full-stack of ML infrastructure, allowing you to experience and experiment with modern ML and the infrastructure behind it directly from your browser, powered by open-source Metaflow, without having to install anything locally. The next course will start in August, so read on to learn more!

“One standout feature of the class was the efficient use of Metaflow sandboxes to streamline the environment setup. This approach allowed participants to focus on learning rather than getting bogged down in complex configuration processes." –Emily Ekdahl, Senior ML Engineer at Vouchsafe Insurance

“The Metaflow sandbox made it very easy for students to review content, run their projects, and take what they learned back to their colleagues. We had so many learners ask about how they could introduce Metaflow at work!” –Shreya Vora, course coordinator at CoRise

Attending this course were data scientists, machine learning engineers, software engineers, data science managers, solutions architects, engineering managers, heads of data science, directors of ML, and ML consultants from Amazon, Heineken, YNAB, Sequoia, Fidelity Investments, Wadhwani AI, and more. Given such an array of participants and verticals, we thought it important to teach both the technical and business sides of things through real-world, hands-on projects. So how did we do this?

Get your hands dirty with real-world ML projects

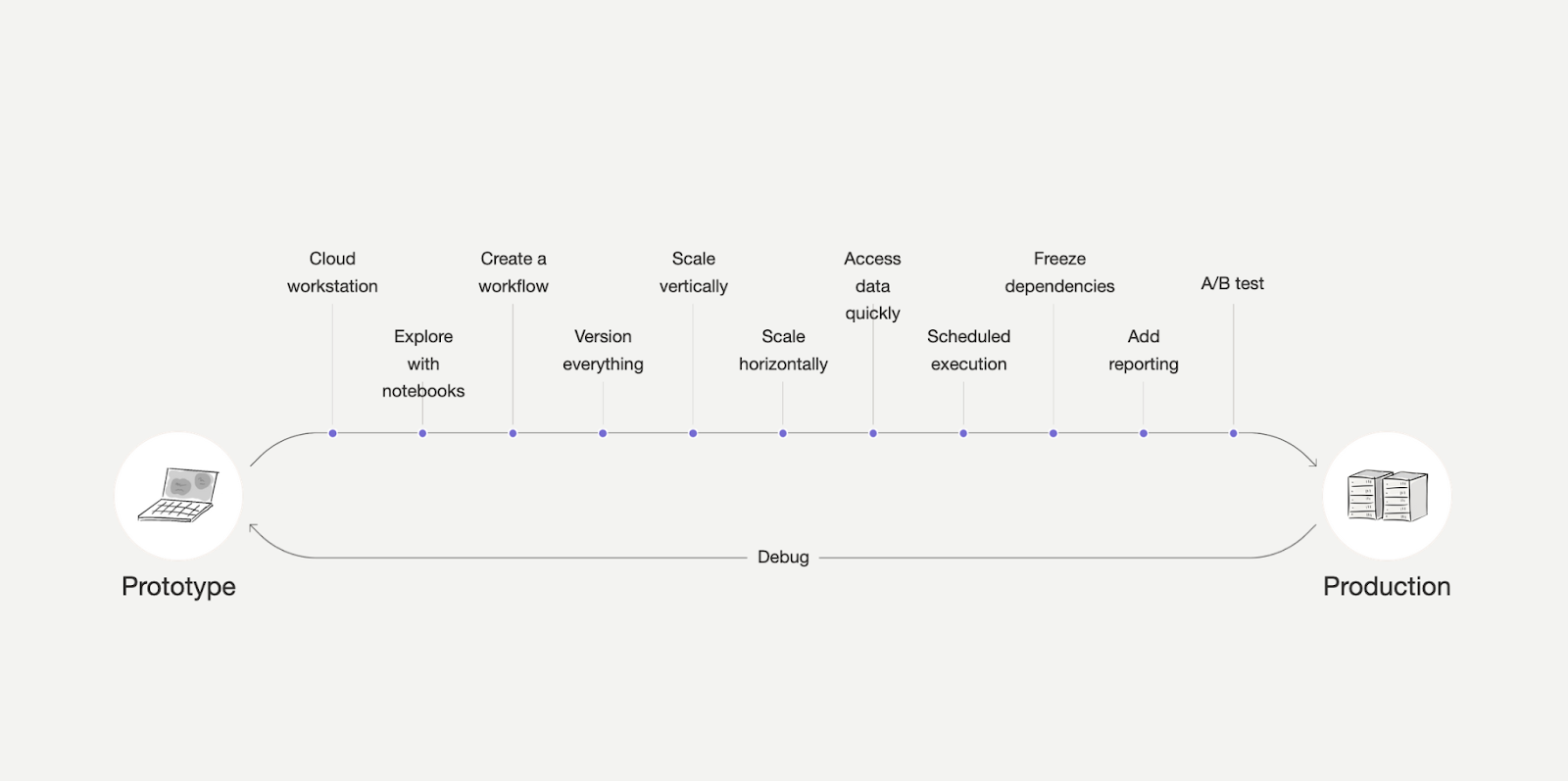

Moving between ML prototype and production is a spectrum, as opposed to an on-off switch. In the real-world projects you work on in this course, you get to experience many different elements of production ML, from using cloud workstations, interacting with updating data streams, and versioning your code, models, and data to scheduling execution, freezing dependencies, and A/B testing champion and challengers ML models.

We made sure to focus on real-life scenarios and common failure patterns, derived from experiences from hundreds of real-life data science projects. These projects involved a real production system with updating data and experiment tracking, leveraging state-of-the-art cloud infrastructure and schedulers, along with building custom and shareable dashboard to document results.

As we are so excited about the projects, we wanted to share with you some of the first cohort’s submissions, along with additional context.

ML workflows and human-readable reports

In week 1, participants build several machine learning workflows and create a custom report using Metaflow cards, with a view to thinking about how essential human-digestible reports are for production ML. Here’s an example of such a report, which includes exploratory data analysis and some model diagnostics:

A report of model prediction metrics produced by a student in the Full Stack Machine Learning Corise course.

Sign up to join the next cohort to make and share your own ML reports here!

Production deep learning, versioning, parallel training, and the cloud

In week 2, participants dive deeper into modeling techniques in workflows, leveraging state-of-the-art deep learning, versioning with Metaflow, parallel training, and sending specific workflow steps to the cloud (those requiring significant compute, such as parallel training steps). These are all foundational aspects of moving ML workloads from prototype to production and the ability to send particular steps to cloud compute is becoming more and more important (just look at large language models and generative AI!). Here’s an example of a custom report that a participant submitted:

Model deployment and handling failures

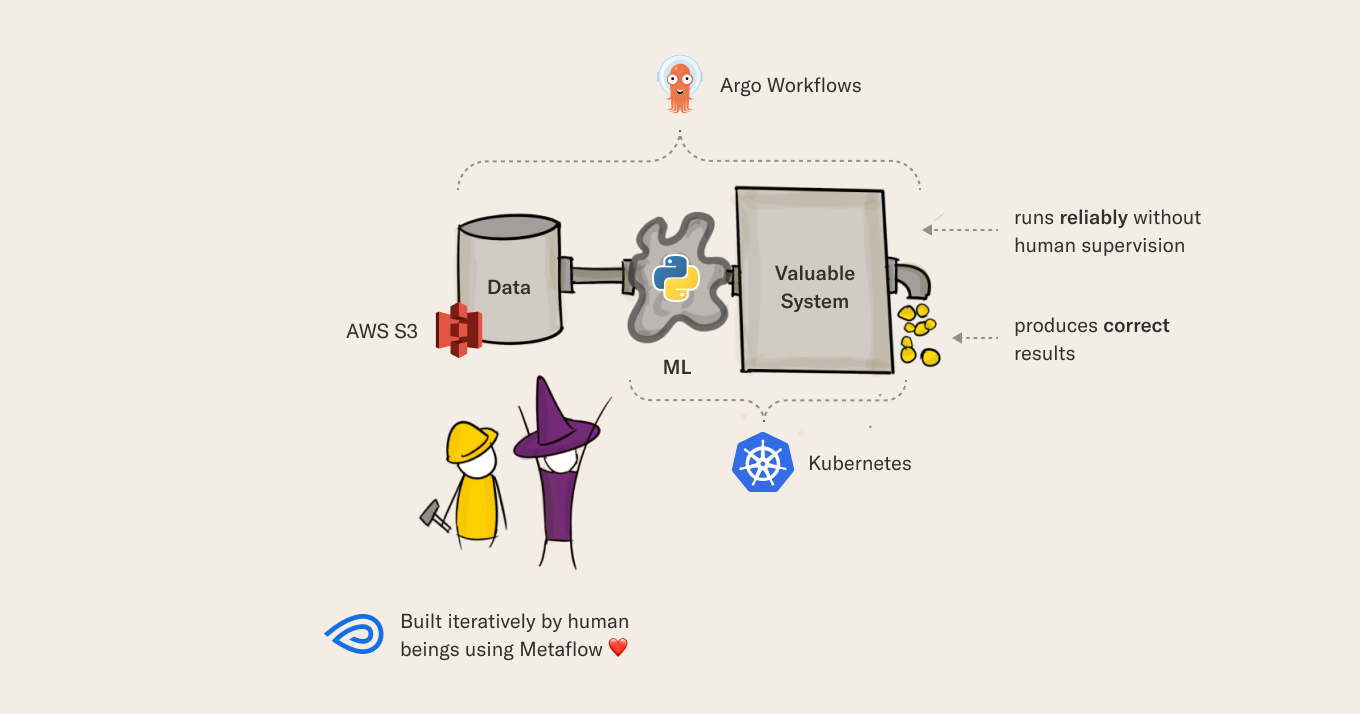

In week 3, things really heat up when we teach everyone how to deploy ML models to production using Metaflow, Argo, and Kubernetes. In their projects, participants deploy their ML models such that they would update hourly when new data became available! On top of this, you’ll need to deal with a set of commonly occurring failure modes when data is updated. Failure modes aren’t spoken about enough but dealing with them takes up so much of the real work!

Eagle eyes may observe that the model's performance on the R^2 metric got worse over time. Students are encouraged to think about this type of drift and how you use Metaflow and other tools from the ecosystem to identify and rectify this. If that sounds like a lot to cover in one week, well, it is, but it was aided by Metaflow’s trigger decorator that allows you to trigger flows given arbitrary events in your products and ML systems:

A/B-testing machine learning models

In week 4, we teach participants how to run machine learning experiments while having models already in production. To do this, we demonstrate how to think about and deploy several types of predictions: batch, streaming, and real-time predictions. In their projects, participants deploy both champion and challenger models and A/B-tested them with respect to performance! This is an essential part of ML model maintenance as the real world is in constant flux, resulting in model and data drift. You can see here two custom reports, one for the champion model, the other for the challenger:

This is a subset of all the things you need to build ML-powered software that both (1) runs reliably without human supervision and (2) produces correct results.

Although we clearly couldn’t be comprehensive in this post with respect to the contents of the course, we hope that it gave a sense of what we cover.

Take a course and get in touch!

We’re excited to be offering this course again in August and would love for you to join us for it. As a faithful reader of our blog, you can use the code HUGOB10 for a 10% discount!

As stated, we’ve already taught data scientists, machine learning engineers, and many others from companies such as Amazon, Heineken, YNAB, Sequoia, Fidelity Investments, and Wadhwani AI. Add this to the hundreds of companies we’ve worked with since open-sourcing Metaflow at Netflix in 2017 and the Metaflow sandboxes, sometimes we wish we could take the course, in addition to teaching it!

If you’d like to get in touch or chat about full-stack ML, come say hi to thousands of data scientists on our community Slack! 👋