Gradient Boosted Trees Flow

In this episode, you will reuse the general flow structure from Episode 1. Specifically, you will replace the random forest model in your flow with an XGBoost model.

1Write a Gradient Boosted Trees Flow

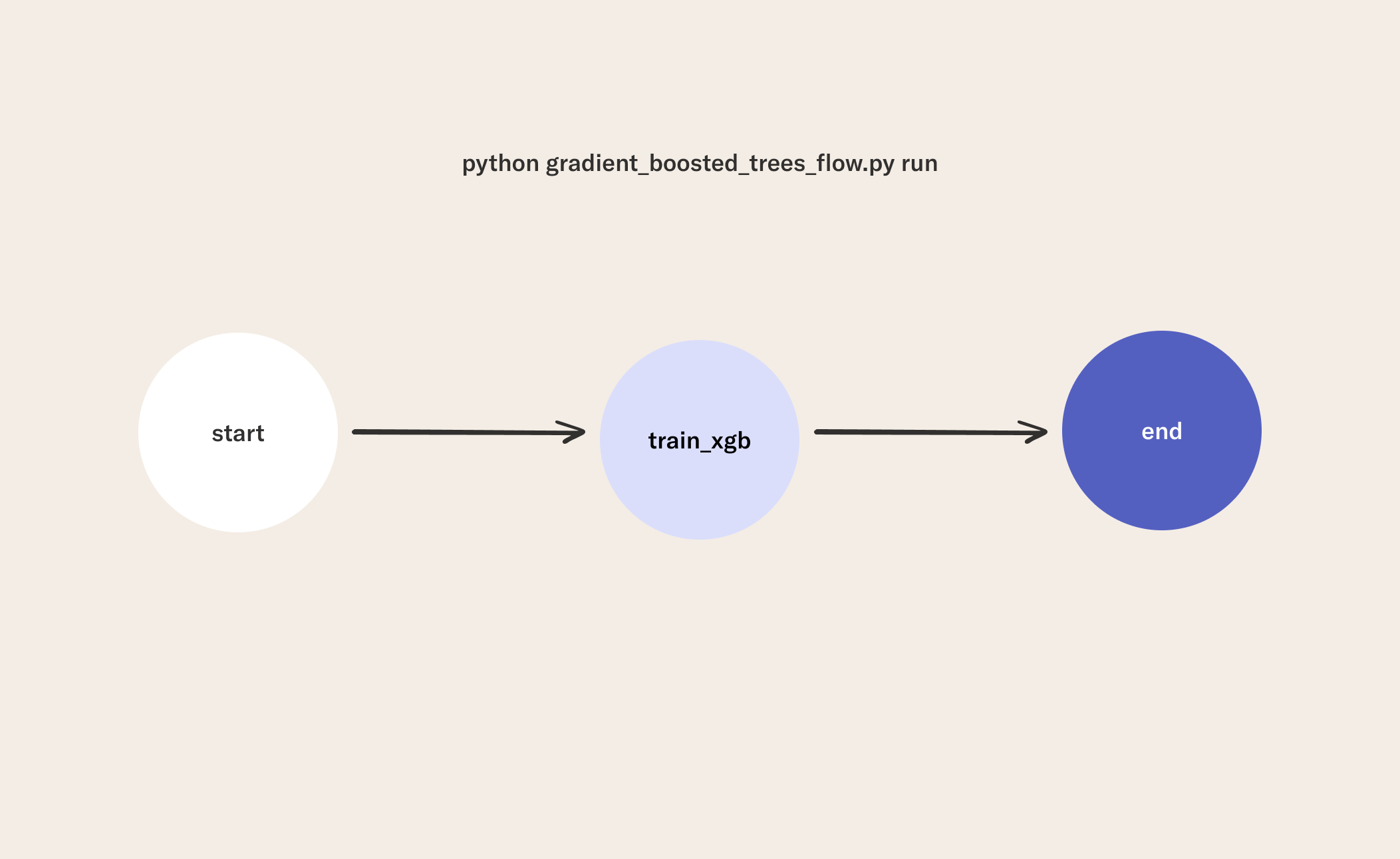

The flow has the following structure:

- Parameter values are defined at the beginning of the class.

- The

startstep loads and splits a dataset to be used in downstream tasks. - The

train_xgbstep fits anxgboost.XGBClassifierfor the classification task using cross-validation. - The

endstep prints the accuracy scores for the classifier.

from metaflow import FlowSpec, step, Parameter

class GradientBoostedTreesFlow(FlowSpec):

random_state = Parameter("seed", default=12)

n_estimators = Parameter("n-est", default=10)

eval_metric = Parameter("eval-metric", default='mlogloss')

k_fold = Parameter("k", default=5)

@step

def start(self):

from sklearn import datasets

self.iris = datasets.load_iris()

self.X = self.iris['data']

self.y = self.iris['target']

self.next(self.train_xgb)

@step

def train_xgb(self):

from xgboost import XGBClassifier

from sklearn.model_selection import cross_val_score

self.clf = XGBClassifier(

n_estimators=self.n_estimators,

random_state=self.random_state,

eval_metric=self.eval_metric,

use_label_encoder=False)

self.scores = cross_val_score(

self.clf, self.X, self.y, cv=self.k_fold)

self.next(self.end)

@step

def end(self):

import numpy as np

msg = "Gradient Boosted Trees Model Accuracy: {} \u00B1 {}%"

self.mean = round(100*np.mean(self.scores), 3)

self.std = round(100*np.std(self.scores), 3)

print(msg.format(self.mean, self.std))

if __name__ == "__main__":

GradientBoostedTreesFlow()

2Run the Flow

python gradient_boosted_trees_flow.py run

Note that XGBoost has two ways to train a booster model. This example uses XGBoost's scikit-learn API. If you use the XGBoost learning API you will have to use xgboost.DMatrix objects for data. These objects can not be serialized by pickle so cannot be stored using self directly. See this example to learn how to deal with cases where objects you want to self cannot be pickled.

In the last two episodes, you wrote flows to train random forest and XGBoost models. In the next episode, you will start to see the power of Metaflow as you merge these two flows and train the models in parallel. Metaflow allows you to run as many parallel tasks as you want, and the next lesson will provide a template for how to do this.