Flow Artifacts

1Why Artifacts?

Machine learning centers around moving data. Machine learning workflows involve many forms of data including:

- Raw data and feature data.

- Training and testing data.

- Model state and hyperparameters.

- Metadata and metrics.

In this episode, you will see a way to track the state of data types like this with Metaflow.

In Metaflow you can store data in one step and access it in any later step of the flow or after the run is complete. To store data with your flow run you use the self keyword. When you use the self keyword to store data, Metaflow automatically makes the data accessible in downstream tasks of your flow no matter where the tasks run.

When using Metaflow we refer to data stored using self as an artifact. Storing data as an artifact of a flow run is especially useful when you run different steps of the flow on different computers. In this case, Metaflow handles moving the data to where you need it for you!

2Write a Flow

This flow shows using self to track the state of artifacts named dataset and metadata_description.

The objects in this example are a list and a string. You can store any Python object this way, so long as it can be serialized with pickle.

from metaflow import FlowSpec, step

class ArtifactFlow(FlowSpec):

@step

def start(self):

self.next(self.create_artifact)

@step

def create_artifact(self):

self.dataset = [[1,2,3], [4,5,6], [7,8,9]]

self.metadata_description = "created"

self.next(self.transform_artifact)

@step

def transform_artifact(self):

self.dataset = [

[value * 10 for value in row]

for row in self.dataset

]

self.metadata_description = "transformed"

self.next(self.end)

@step

def end(self):

print("Artifact is in state `{}` with values {}".format(

self.metadata_description, self.dataset))

if __name__ == "__main__":

ArtifactFlow()

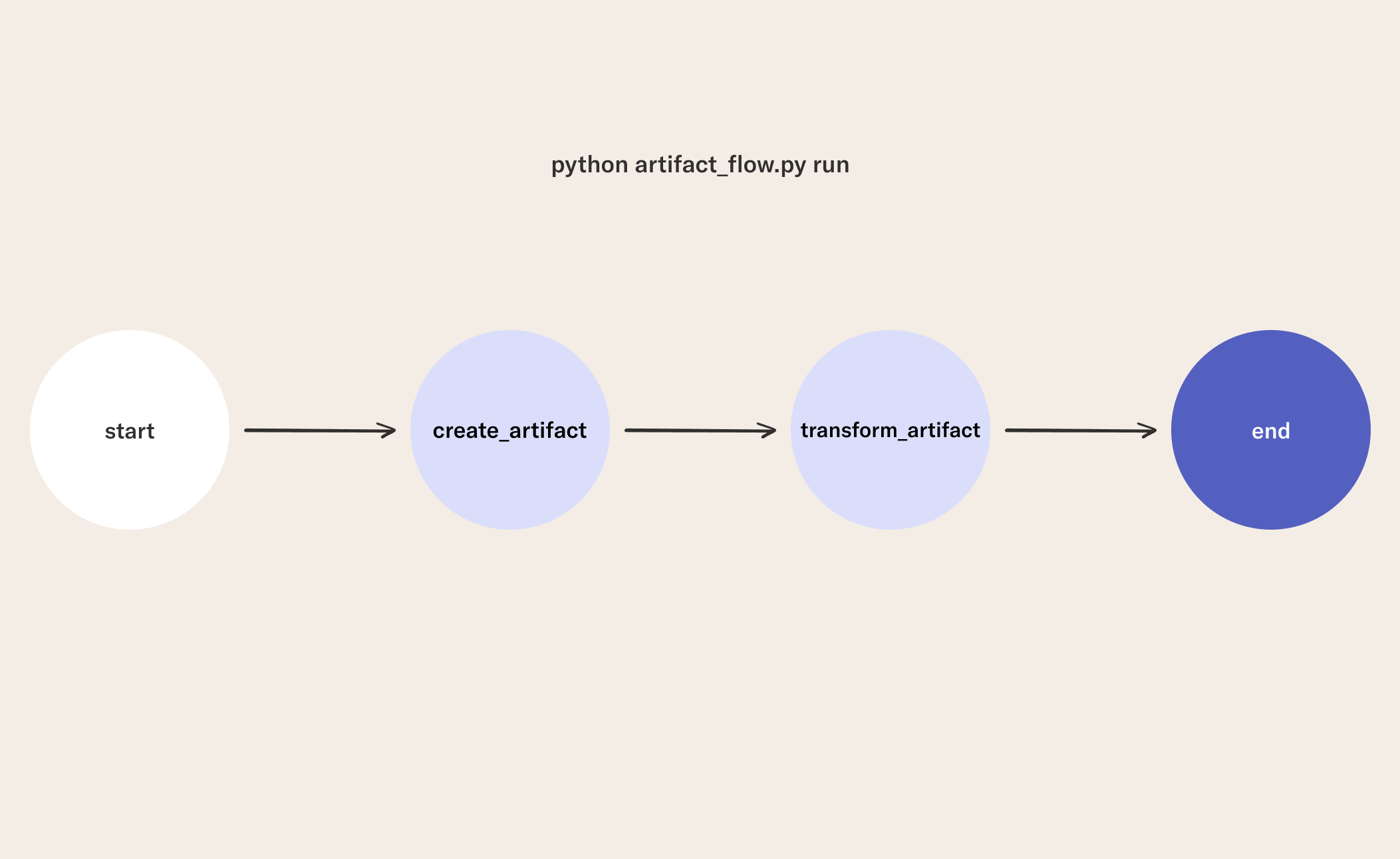

3Run the Flow

python artifact_flow.py run

In machine learning workflows, artifacts are useful as a way to track experiment pipeline properties like metrics, model hyper-parameters, and other metadata. This pattern can deal with many cases but is not ideal for storing big data. In the case where you have a large dataset (e.g., a training dataset of many images) you may want to consider using Metaflow's S3 utilities.

4Access the Flow Artifacts

In addition to observing and updating artifact state during the flow, you can access artifacts after flow runs are complete from any Python environment. For example, you can open this notebook from the directory where you ran this flow in a Jupyter Notebook like:

jupyter lab S1E3-analysis.ipynb

from metaflow import Flow

run_artifacts = Flow("ArtifactFlow").latest_run.data

run_artifacts.dataset

In this episode, you have seen how to store artifacts of your flow runs, and access the data later. In the next lesson, you will see how to add parameters to your flow so you can pass values to your flow from the command line.