Retrieval-Augmented Generation: How to Use Your Data to Guide LLMs

We continue our series of articles that demonstrate how you customize open-source large language models for your own use cases - see previous articles about fine-tuning and instruction-tuning. This time we focus on a popular technique called Retrieval Augmented Generation which augments the prompt with documents retrieved from a vector database.

An off-the-shelf large language model (LLM) formulates a syntactically correct and often a convincing-sounding answer to any prompt. Sadly, a generic LLM will happily hallucinate an answer even when it has no knowledge of the topic and no access to relevant data.

For example, consider asking an LLM for ideas for what to serve at a dinner party, taking into account the preferences of participants which the model has no knowledge of. Or, consider using an LLM to build a solution specific to your product or business.

In cases like this, we can't assume that a generic off-the-shelf LLM, like an open-source Llama2 model or an OpenAI API, knows much about data that's relevant for the problem. For instance, a public LLM knows nothing about your company's internal documents or discussions. In serious real-life use cases, where random hallunications are not tolerated, you need a way to expose the model to more relevant data.

Exposing LLMs to your data

Let's start by looking at typical approaches for exposing the model to your own data, with the goal of getting more relevant responses from the model.

In the illustration below, we start with a prompt (a query / a question) at the bottom. The large language model is the big blob in the middle, which formulates a response (at the top) to the prompt. The darker the shade, the more relevant the object.

We assume that the prompt is always relevant: The user is specifically interested in topics related to your domain. We’d like the response to be dark too, i.e. as relevant as possible.

This illustration shows four alternative ways to nudge an LLM to produce relevant responses:

- Generic LLM - Use an off-the-shelf model with a basic prompt. The results can be highly variable, as you can experience when e.g. asking ChatGPT about niche topics. This is not surprising, because the model hasn’t been exposed to relevant data besides the small prompt.

- Prompt engineering - Spend time structuring the prompt so that it packs more information about the desired topic, tone, and structure of the response. If you do this carefully, you can nudge the responses to be more relevant, but this can be quite tedious, and the amount of relevant data input to the model is limited.

- Instruction-tuned LLM - Continue training the model with your own data, as described in our previous article. You can expose the model to arbitrary amounts of query-response pairs that help steer the model to more relevant responses. A downside is that training requires a few hours of GPU computation, as well as a custom dataset.

- Fully custom LLM - train an LLM from scratch. In this case, the LLM can be exposed to only relevant data, so the responses can be arbitrarily relevant. However, training an LLM from scratch takes an enormous amount of compute power and a huge dataset, making this approach practically infeasible for most use cases today.

From RAGs to riches

Let's consider another popular technique, Retrieval Augmented Generation (RAG), which combines a prompt engineering approach with a custom dataset. In contrast to fine-tuning workflows, RAG workflows don’t require training of the model. Instead, the goal is to search for and merge relevant context from the dataset into the prompt fed to the model at generation time.

RAGs use vector embeddings to give a model more context; for example what documentation pages are relevant references in response to a user’s prompt in a chatbot session. This is significantly easier to engineer than building your own fine-tuning workflows, which is far easier than pre-training a fully custom LLM.

In terms of the above illustration, the RAG approach would look like this:

- RAG with a generic LLM - Insert your dataset in a (vector) database, possibly updating it in real time. At the query time, augment the prompt with additional relevant context from the database, which exposes the model to a much larger amount of relevant data, hopefully nudging the model to give a much more relevant response.

- RAG with an instruction-tuned LLM - Instead of using a generic LLM as in the previous case, you can combine RAG with your custom fine-tuned model for improved relevancy.

Compared to training the model, the relative engineering ease of RAG makes it an approachable option for reasonable scale organizations with small, high-quality datasets. This approach can help you quickly make leaps in reducing out-of-the-box LLM problems like hallucinations. Moreover, they work in tandem with prompt engineering and can be paired with fine-tuning strategies to improve the relevance of LLM generations.

Vectors: the fundamental unit of generative AI

RAGs extend prompt engineering by retrieving information relevant to a user’s prompt and augmenting the prompt that an LLM generates text to complete. The data being indexed, searched through, and retrieved as context is a collection of vectors, each representing a source object like a chunk of text, audio, or an image.

With an embedding function that maps objects into vector space, we can embed many source objects into the same vector space and use similarity search algorithms to compare the source objects in a quantitative way.

To do this in practice, we need to go through three steps:

- We need to chunk our unstructured data to parts that can be embedded as vectors, typically using (another) LLM. We want to store the vectors in a way that allows fast querying - vector DBs (see below) being a good option.

- When we receive a prompt, we need to embed the prompt in the same vector space for querying.

- Finally we find close matches to the prompt-vector in our vector space (quickly).

This diagram summarizes the process:

Vector database - new infrastructure on the block

The above vector-based approach for information retrieval has been around since the 1960s. Due to the recent wave of generative AI and LLMs, the old concept has been packaged into easy-to-use and highly optimized products, spawning a category called vector databases.

For a deep dive into the motivations behind a new kind of database, you can read this article from Pinecone, a vector DB vendor. There are many open-source vectorDB providers you can try, and vector libraries like Faiss that expose lower-level functionality and optimization opportunities. In a future post, we will dive deeper into the lower levels of vector DBs, to make sense of benchmarks, and to show when to use a vector DB (and when not).

RAG in practice

To make these ideas concrete, consider creating an application that prompts an off-the-shelf model like GPT-4, or an open-source model like Llama 2 behind an API. Our goal is to control hallucinations and provide relevant information to the LLM.

RAG is used widely to solve this challenge:

- Perplexity.ai uses advanced search engines to give GPT-4 models context to guide a Q&A interface.

- The Bing AI chat interface is similar in that it provides references to the information its generated response is conditioned on.

- This talk from Vipul Gagrani at CourseHero goes into detail about how they use RAGs to address hallucinations in their edtech platform.

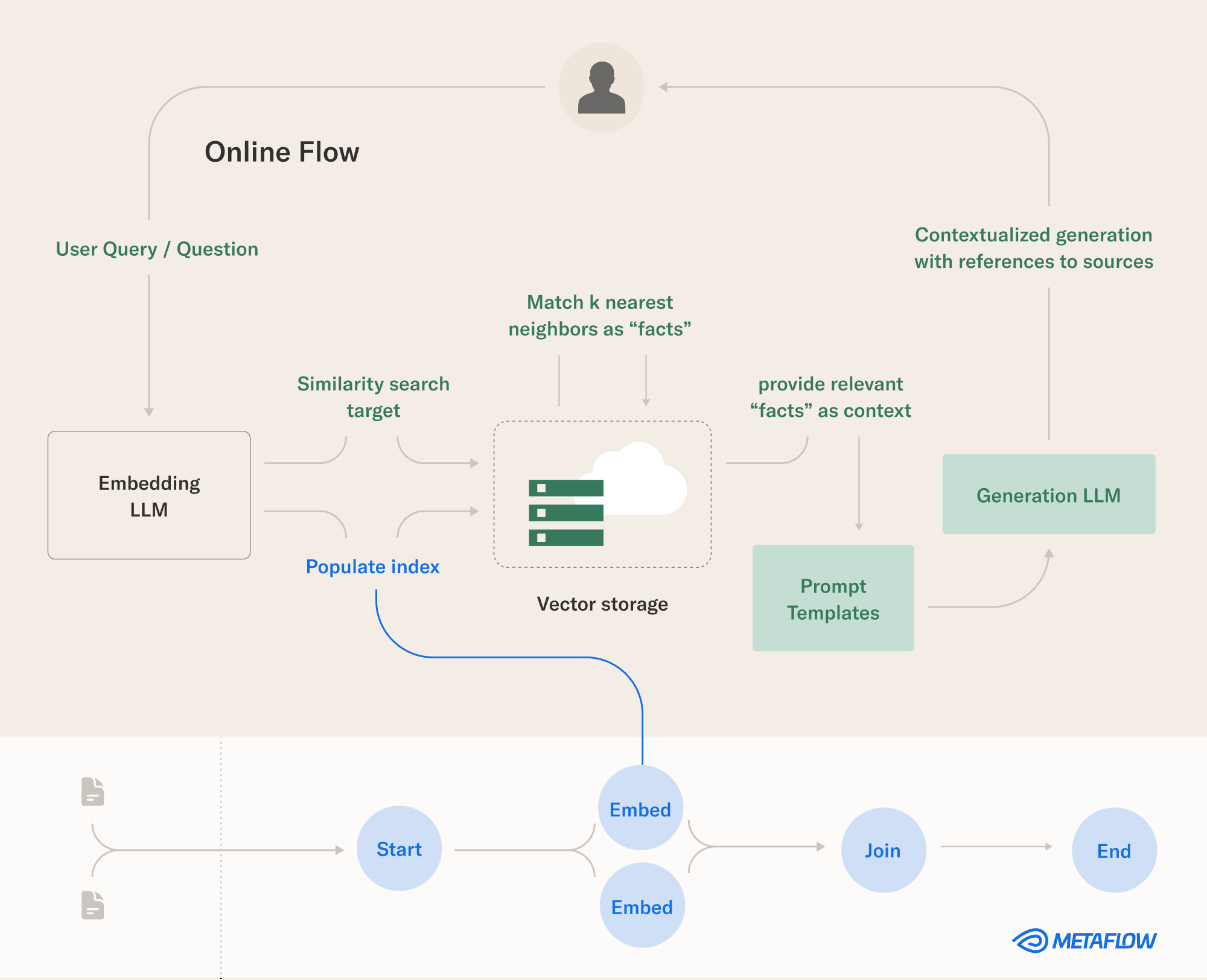

The following diagram illustrates all the components involved in RAG, from a prompt (user query) to the final response:

The lower part of the diagram depicts a crucial part of this process. We need to be able to create embeddings at scale, which requires batch-inferencing with an LLM, as well as the ability to embed any new data regularly. A data- and compute-intensive workflow like this, which needs to react to updating data, is a perfect use case for the open-source framework Metaflow.

Hands-on example: Using RAG to query Metaflow documentation

Building a RAG-based application is surprisingly easy! The rest of this article shows how - follow along with the code examples in this repository.

We structure the application as two Metaflow workflows. We benefit from a number of Metaflow features:

- We can structure the application cleanly as pure-Python workflows which we can easily deploy to production.

- Modularize the app as two separate parts which are connected them through event triggering.

- Keep data up to date using

@schedule. - Access GPU compute at scale through

@resources.

Data Workflow 1: The Markdown Chunker

The first flow processes Markdown files and writes a dataframe for each header, with the corresponding section contents, metadata, and URL. The workflow run looks through code repositories where we store the Markdown files that become web pages at https://outerbounds.com and https://docs.metaflow.org.

This workflow should be reusable for indexing most Markdown files. Get started in this notebook if you want to learn how to add a new repository. If the repositories you wish to index use a different file format, you will need to write your own parsing logic.

Data Workflow 2: The Table Processor

The first workflow completing triggers a second feature engineering workflow automatically.

@trigger_on_finish(flow='MarkdownChunker')

class DataTableProcessor(FlowSpec):The output dataframe from the first flow is filtered and used to engineer new features that can be used as input to a machine learning model.

parent_flow = Flow(self.parent_flow)

run = parent_flow.latest_run

unprocessed_df = run.data.df

...

# apply filters

# build new features

# store cleaned df for downstream useHere you can see summary statistics of the result of this workflow, visualized as a Metaflow card:

Testing the setup with LlamaIndex

We can use a notebook to quickly eyeball the data.

In this notebook, you can see an example of using outputs of the workflows in a RAG demo. We use an open-source tool called LlamaIndex to build the core components of a Bing Chat-like LLM-powered search experience in a notebook.

When fed an index of data gathered and processed in the above workflows:

- Hallucinations are being controlled as the model tends not to answer when it isn’t confident. This isn’t a complete solution, but can be paired with other post-processing techniques to greatly improve over the default LLM mode.

- Information is fresh since the workflows collectively scrape and clean the latest source of web pages we trust as factual.

- The model shows references by formatting citations that were injected into its prompt based on the relevant context found in the vector store.

The notebook can be used to iteratively debug a matching Streamlit application that serves as this repository's prototyping environment.

There are many ways that this notebook could be improved. For example, you might consider the following LlamaIndex extensions:

- Test more vector storage options

- Tune the query engine response mode

- Consider structured outputs

LlamaIndex provides convenient functionality like above but in an actual production application, you may want to remove unnecessary levels of abstraction.

More production-ready RAG workflow

When you are ready to move from a notebook to a more serious production setting, you need to choose how to implement the three core components:

- the embedding model,

- the vector DB,

- the LLM.

In our example, we use the sentence-transformers package as the embedding model. We will pick an off-the-shelf model available in the package and compute vectors with it. Alternatively, OpenAI has embedding APIs which you can use as well.

For the rest of the pipeline, we have a plethora of options to choose from. In the codebase, we demonstrate two different approaches:

- An open-source approach uses LanceDB as a vector DB and Llama2 as the LLM.

- A closed-source approach uses Pinecone as a vector DB and ChatGPT as the LLM.

If you want test your own solutions, take a look at these test scripts, test_simple_embedding.py and test_simple_query.py which demonstrate how the libraries work together.

Examples

Let's see how our RAG example works in practice.

First, as a baseline, let's use vanilla ChatGPT to ask a question about Metaflow:

The answer is nonsense. This is expected, as ChatGPT has limited knowledge of Metaflow. See the correct answer in the official documentation for @conda.

What's worse, ChatGPT shows confidence in this suggestion, as there is no “I don’t know about this” reply. A new user could waste a lot of time trying this code while learning incorrect habits and patterns.

A better response can be given by our RAG pipeline. The RAG steps provide ChatGPT what it needs to generate useful results grounded in factual content about Metaflow. In this case, we prompt ChatGPT with a bunch of documents retrieved from our vector DB containing all of the Metaflow documentation:

Question: How do I specify conda dependencies in my flow in Metaflow?

Answer: To specify conda dependencies in your flow, you can use the @conda_base decorator at the flow level and the @conda decorator at the step level.

At the flow level, you can use the @conda_base decorator to specify explicit library dependencies and the Python version. For example:

@conda_base(libraries={'numpy':'1.15.4'}, python='3.6.5')

class LinearFlow(FlowSpec):

...In effect, ChatGPT is used to summarize the answer found in the documentation.

Avoiding stale data

Consider this example where ChatGPT doesn't have the latest data about newer features of Metaflow, so its answer is evasive:

Our RAG approach provides the model with the latest relevant data, so it is able to give a much more relevant response:

Question: How can I use the trigger decorator in Metaflow?

Answer: The @trigger decorator in Metaflow is used to trigger a flow based on an external event. It is specifically used with flows deployed on Argo Workflows and allows you to specify the event(s) that the flow depends on. You can trigger a flow based on a single event or multiple events. Additionally, you can map the event payload to Metaflow parameters for the flow. The @trigger decorator takes parameters such as event, events, and options for configuring event triggering.

The model uses the context that appears in the documentation to give us a correct answer.

Try it at home

You can easily apply the above RAG example to your own content. Give it a try!

The best part is that you can do all this securely in your own cloud account without leaking any data or processing to 3rd parties, which makes it possible to use this approach even in tightly regulated and security-sensitive environments. You can deploy Metaflow securely in your own cloud account, use it to fine-tune your own open-source LLMs, and run RAG with open-source vector DBs. If you are short on engineering resources, you can do all this on the managed Outerbounds Platform.

If you need help with the ideas presented in this article, have questions, or you just want to meet thousands of other likeminded engineers and data scientists, join the Metaflow community Slack!

Start building today

Join our office hours for a live demo! Whether you're curious about Outerbounds or have specific questions - nothing is off limits.