The Next Leap in Building Standout AI

Today, we’re releasing a major upgrade to Outerbounds, including real-time inference, agentic deployments, first-class asset and model tracking, and powerful new APIs and UIs for custom evaluations. Built on the robust Outerbounds platform - securely running in your cloud - these features form a cohesive toolchain to help you rapidly build and iterate on standout, production-grade agents and AI systems.

Through the adoption of Outerbounds, we've had an inside look at a wide range of behind-the-scenes AI projects. To give you a sense of the variety, consider these examples:

- Improving fraud detection models based on traditional data operating on structured data by incorporating new data sources, processed through LLMs.

- Accelerating game development with AI that can generate meshes for avatars and environments, as well as agents that recommend gameplay optimizations.

- Using Deepseek-style posttraining of custom LLMs to analyse proprietary code bases.

- Finetuning large LLMs to outperform frontier models for a specific domain and distilling them to smaller models for inexpensive, large-scale inference.

- Coordinating multiple AI agents that evaluate candidates from different angles within a recruitment platform.

If you are curious for more details, you can take a look at how Netflix tackles large-scale, human-in-the-loop synopsis generation, or how MasterClass fine-tunes models using their unique data assets to power a new AI coaching service.

From wrappers to differentiated AI

A striking aspect of today’s AI stack is that you can hack together a flashy demo for any of the above use cases in a weekend using off-the-shelf APIs. Many companies have learned that the gap between a demo and a robust, business-critical system can be surprisingly wide. Similarly, investors have learned that a prototype created in a weekend can be cloned by ten other startups just as quickly.

As the novelty of AI wears off, there's a growing focus on building AI products that are functional and sustainable. This matters just as much for startups that need to stand out from the competition as it does for established companies looking to use AI in serious, business-critical domains.

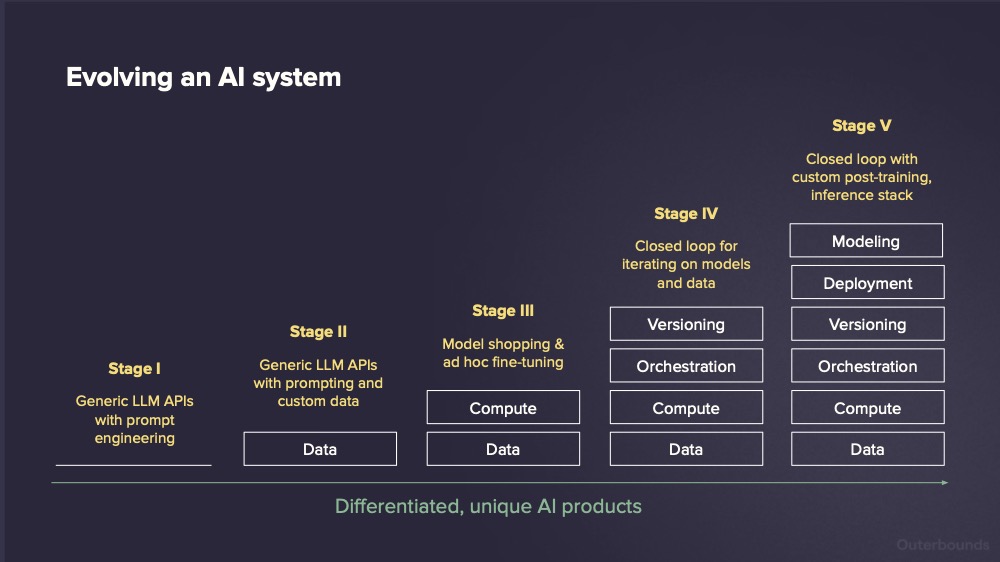

Our mission at Outerbounds is to help companies graduate from AI wrappers and demos to differentiated, sustainable, and delightful products that are powered by AI under the hood. We have talked about this topic frequently, summarizing the typical graduation path in the following graphic:

- Everyone starts at Stage I: You create a quick demo or a POC with off-the-shelf APIs with a few rounds of ad-hoc prompt engineering. Eventually it becomes evident that the system needs more domain-specific data to produce more accurate, domain-specific, and timely results.

- In Stage II, you introduce RAG or another mechanism to incorporate domain-specific data into the system. The more high-quality data the system can access, the better the results. This typically gets you to a local maximum in performance, which may or may not be sufficient for production use. Considerations like cost, data privacy, and long-term stability may prompt you to explore further refinements.

- In Stage III, you begin exploring more advanced models to address the limitations. While it's easy to experiment with various off-the-shelf APIs, the focus may shift toward hosting models internally or fine-tuning open-weight models to address the needs of your specific domain.

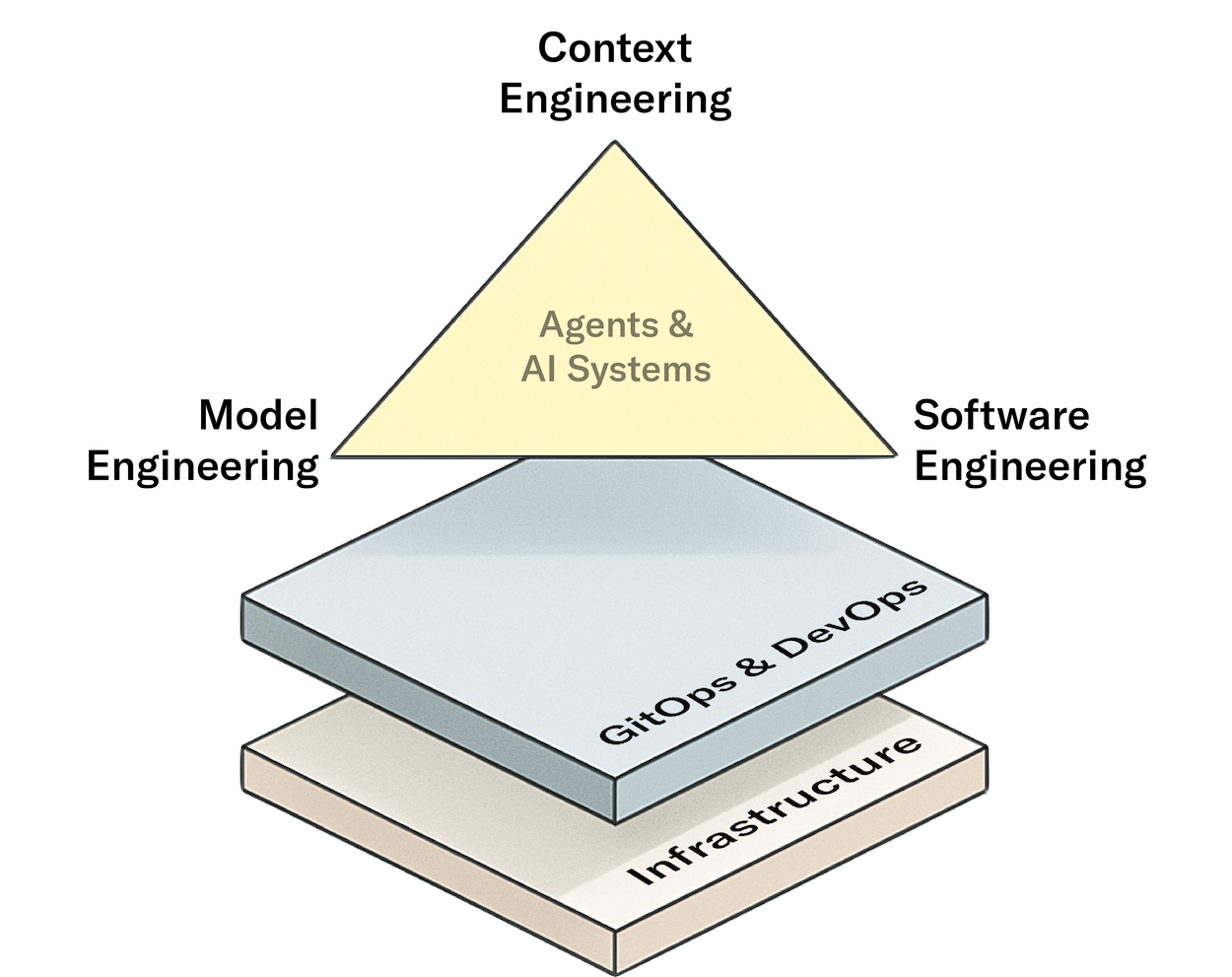

This marks a key inflection point: Stage I may have been a weekend project, but Stage II introduced elements of a proper software system with agents, vector/state databases, and data pipelines. A new term, Context Engineering, captures this well: LLMs - agents in particular - are data-hungry systems that rely on being fed with the right context in the right format at the right time.

Then Stage III adds even more moving parts - potentially including the need for GPUs for fine-tuning and in-house inference. Building a system like this can be a fun challenge for an engineering team. However, the real test - just like with any sustainable, long-term software system - is designing the entire stack, including workflow and agent orchestration, data pipelines, and models, so that every component can be iterated on, deployed securely, and operated with confidence.

Differentiated AI in production, continuously improving

This brings us to Stage IV - the foundation for systematically perfecting a differentiated AI product through iterative development across your code, data, and models.

At this stage, software engineering best practices become essential: GitOps, CI/CD, and first-class branching for all system components, combined with structured data collection for evaluation and observability. Operationally, the goal is to ensure the entire stack runs in a secure, scalable, and cost-efficient infrastructure.

The defining feature of Stage IV is the ability to systematically operate and evolve your agents and AI systems in a reliable production environment - transitioning from ad hoc, shallow experimentation to deeper, controlled iterations that deliver increasingly accurate, domain-specific results. In an era of commoditized AI, this is how you create lasting advantage.

Craft standout AI on the new Outerbounds

Developing a production-ready stack for Stage IV is not a simple feat, especially when you should focus on building agents or AI systems on top of it.

For the past few years, we have been developing a complete platform for building differentiated AI systems in tight collaboration with our customers that run their business-critical AI systems on Outerbounds. We have also worked with top-tier infrastructure providers like Nebius and NVIDIA, integrating their technologies seamlessly in the platform.

Today, we’re excited to release a set of core new features that help you deliver differentiated AI faster - built on the performant, secure foundation of Outerbounds. Take a look at this highlight reel for a quick teaser:

Let’s walk through the new features:

New: Deploy models and agents in your cloud with cost-efficient GPUs

One of the most requested features in Outerbounds is now here: First-class support for model inference and agent deployments, securely running in your cloud account.

As before, we continue to support proprietary models from providers like OpenAI and Anthropic, along with models served via inference platforms such as AWS Bedrock or Together.ai. The new deployment capabilities on Outerbounds are designed for use cases that go beyond what off-the-shelf solutions can handle easily, including:

- Large-scale batch inference where external APIs may become cost-prohibitive or unable to provide the required throughput.

- Privacy-sensitive deployments that require keeping both models and data entirely within your own cloud environment, such as cases requiring HIPAA compliance.

- Cost-effective hosting of fine-tuned models which often comes with a significant markup on external services.

- Deployments with policy constraints including robust authentication, security perimeters, geo-constraints, and a managed software supply chain.

- Built-in support for tracing and evaluations allowing you to compare and benchmark models easily, based on domain-specific evaluations.

Deployments on Outerbounds are deeply integrated with the rest of the stack, enabling rapid iteration on custom endpoints—with first-class support for CI/CD and GitOps. This is especially valuable for agentic workloads, where fast iteration across code, tools, and prompts is essential.

A key benefit of deploying models and agents on Outerbounds is straightforward and secure access to cost-effective GPUs required to host the models. When deploying on Outerbounds, besides your home cloud at AWS, Azure, or GCP, you can leverage large pools of top-tier GPUs provided by Nebius and CoreWeave, as well as various other vendors through NVIDIA’s new DGX Cloud Lepton marketplace. Naturally, all without per-token pricing or extra markup on the compute cost.

New: Complete GitOps and asset tracking for code, data, and models

A key feature of Stage IV is support for development workflows that lets teams confidently experiment and iterate on the entire system—agents and AI workflows included. While most developers already use Git for code versioning and have some form of CI/CD in place, the same level of discipline rarely extends to data pipelines, prompts, and model variants, which often remain fragmented and ad-hoc.

Agentic systems, in particular, are tightly coupled - relying on a delicate interplay between data, models, and code. Ensuring reliability and fast iteration requires consistent management of all these components, now supported natively in Outerbounds. Think of the streamlined experience of a modern web development platform like Vercel or Netlify, now brought to the domain of production-grade AI.

Outerbounds lets you register data and model assets as continuously evolving entities, with full auditability of their producers and consumers. Testing a new model is as simple as opening a pull request. You can, for example, build a RAG system that updates automatically with new data, potentially ingesting data securely from your enterprise data warehouse or lake - enabling continuous improvement over time, all within the platform.

New: Iterate confidently with custom evaluations and tracking

Fast development cycles without robust evaluation are just Brownian motion - you’re moving, but not necessarily progressing. To make meaningful advances, you need to continuously monitor performance and accuracy across all branches.

A key challenge is that there’s no universal metric for measuring accuracy or progress - AI system evaluation is often highly domain-specific. As Hamel Husain, an expert in AI evaluations, puts it:

Don’t rely on generic evaluation frameworks to measure the quality of your AI. Instead, create an evaluation system specific to your problem.

This statement aligns with our experience from hundreds of real-world AI use cases. To enable easy domain-specific evaluations, such as the one illustrated in the video above, we provide three building blocks:

- Consistent tracing and logging of agent behavior and model inputs and outputs, scoped to each project and branch - with easy access for both programmatic use and ad-hoc analysis.

- Robust workflows to analyze the data continuously, thanks to all the functionality in open-source Metaflow which we support natively.

- Straightforward APIs for building custom reports and dashboards, which work particularly well with code co-pilots, allowing you to build highly domain-specific views in minutes, taking system observability to the next level.

New: Craft domain-specific models with expanded toolchain for post-training

Last but not least, you may be wondering about Stage V in the chart at the top of this article. While most vertical AI applications don’t require custom models with elaborate post-training today, rising competitive pressure may push some companies to pursue DeepSeek-level sophistication in the coming years.

We’re building Outerbounds to support you no matter how ambitious your goals are. That includes making it easy to finetune even the largest open-weight models using frameworks like Ray without getting bogged down in infrastructure. Built-in checkpointing ensures your progress is always saved, even across long or distributed training runs.

If you are curious to learn more how to leverage advanced post-training, including reinforcement learning techniques such as GRPO, on Outerbounds, take a look at this recent webinar:

Level up your AI today

Outerbounds supports you wherever you are on your AI journey.

- At Stage I, the platform helps you set up a foundation for context engineering, and building agentic and RAG use cases in a systematic, production-grade way - securely running in your own cloud account.

- If you're at Stage II with agents and RAG pipelines already running, but struggling to iterate quickly, introducing top-notch workflows, deployments, and GitOps can help elevate your system to the next level.

- If you're at Stage III - exploring open-weight models and maybe experimenting with finetuning - you'll benefit from treating models as first-class assets, deploying them cost-effectively on top-tier GPUs, and wrapping them in domain-specific evaluations to drive accuracy.

- If you’re already at Stage IV, Outerbounds offers support for large-scale, cost-efficient deployments on the deepest and most cost-efficient GPU pools available, securely connected to your cloud account or on-prem resources - alongside advanced post-training capabilities and enterprise-grade governance.

To get started, schedule a call to unlock a 14-day free trial.

Start building today

Join our office hours for a live demo! Whether you're curious about Outerbounds or have specific questions - nothing is off limits.