.jpeg)

Agentic Metaflow

There’s no shortage of hype around agents. If you’ve read too many articles like that, don’t worry - this article won’t add hot air. More interestingly, the just released Metaflow 2.18 comes with a major new feature, the long-awaited support for conditional and recursive steps, which come in handy when building agentic applications.

Before diving into the details and a fun, illustrative example (with complete, try-it-at-home source code), let’s start with a quick overview of how agentic systems are built - and how Metaflow fits into the picture.

Building agentic systems with Metaflow

When talking about agents, the discussion tends to focus on agent frameworks, of which there are plenty - LangChain, AutoGen by Microsoft, Strands by AWS, Agent Development Kit by Google, OpenAI Agent SDK, amongst others. The primary function of these frameworks is to orchestrate the agent loop: accepting prompts, invoking LLMs, interpreting their outputs, and executing external tools whose results are fed back into the model. On top of this core loop, frameworks add guardrails, tracing, and support for evaluations.

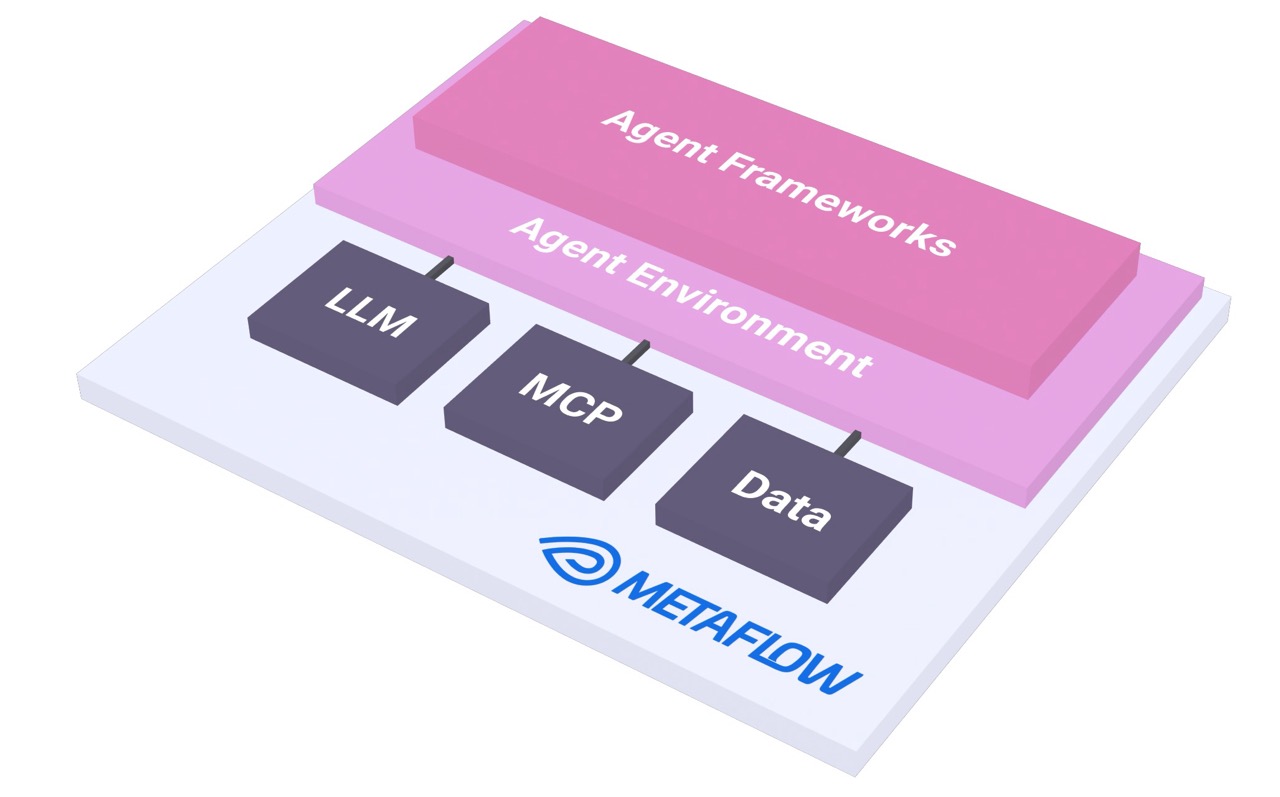

A key insight is that while an agent framework may be necessary, it is not sufficient for building a complete agentic system. This diagram illustrates the bigger picture:

All the agent frameworks need to be deployed and executed in an agent environment. In the simplest case, execution could happen on your laptop but serious systems are more likely to run in a cloud environment such as Kubernetes - Google’s ADK deployment options illustrate the idea. Notably, many agentic applications rely on entire auto-scaling fleets of agents, adding to requirements.

Besides an execution environment, agents require mechanisms for state and memory management. As with any deployed container, agents may crash or be interrupted. This is especially necessary for agents that perform long-running, compute-intensive tasks. Durable state enables them to recover from failures and continue execution from the last checkpoint.

Agents interact with the outside world by nature. To do so, they require access to LLMs, frequently more than one, allowing them to route requests to the most suitable model. For instance, Claude Code uses smaller models eagerly for practical tasks for which a large frontier model would be overkill. To reduce the impact of rate limits and costs, and to support domain-specific fine-tuning, agents may leverage open-weight models alongside proprietary ones.

Also, a defining characteristic of agents is tool use: they can invoke APIs, query databases, and interact with microservices through the Model Context Protocol (MCP). This, in turn, adds a layer of complexity as agents mustn’t compromise the stability and security of external APIs and databases. And crucially, agents cannot produce reliable, non-hallucinated results without access to accurate, domain-specific data.

Enter Metaflow

To summarize, a production-ready agentic system requires ways to

- Deploy agents in an auto-scaling cloud environment.

- Store agent state and recover from failures.

- Provide access to LLMs, including custom, domain-specific ones.

- Provide a fleet of stable and secure services, exposed via MCP.

- Expose agents to accurate data that’s often continuously updating.

As illustrated above, Metaflow serves as the baseplate for agentic systems, helping you address all of the above requirements. It does not replace or compete with agent frameworks, but it complements them by delivering foundational services - data, compute, orchestration, and versioning - which are needed to complete the system around the core agent loop.

Metaflow shines when powering autonomous agents, that is, scenarios where fleets of agents operate without continuous human oversight. Beyond chatbots or support assistants, think of agents built for deep research tasks, such as analyzing a decade of S-1 filings or detecting fraud across financial transactions. Such workloads often involve large numbers of long-running agents, massive datasets, and significant compute requirements - precisely the challenges Metaflow is designed to address.

New in Metaflow: Recursive and conditional steps

Effective state management is a critical requirement for long-running agents. Metaflow addresses this with efficient snapshotting of workflow state through artifacts. At Netflix alone, Metaflow manages more than 12PB of artifacts with no limits in sight, making it hard to imagine a more proven foundation for agent state management.

Unlike typical ML and data DAGs, agents are recursive by nature: Agent frameworks keep running them in a loop until some stopping condition is hit. To make artifact-based state management work for this pattern, we added support for recursive steps in Metaflow, now available in 2.18. And since loops need an exit, the new release also comes with conditional step transitions. Besides agentic use cases, recursion and conditionals have been long-awaited features which have many uses in traditional workflows as well.

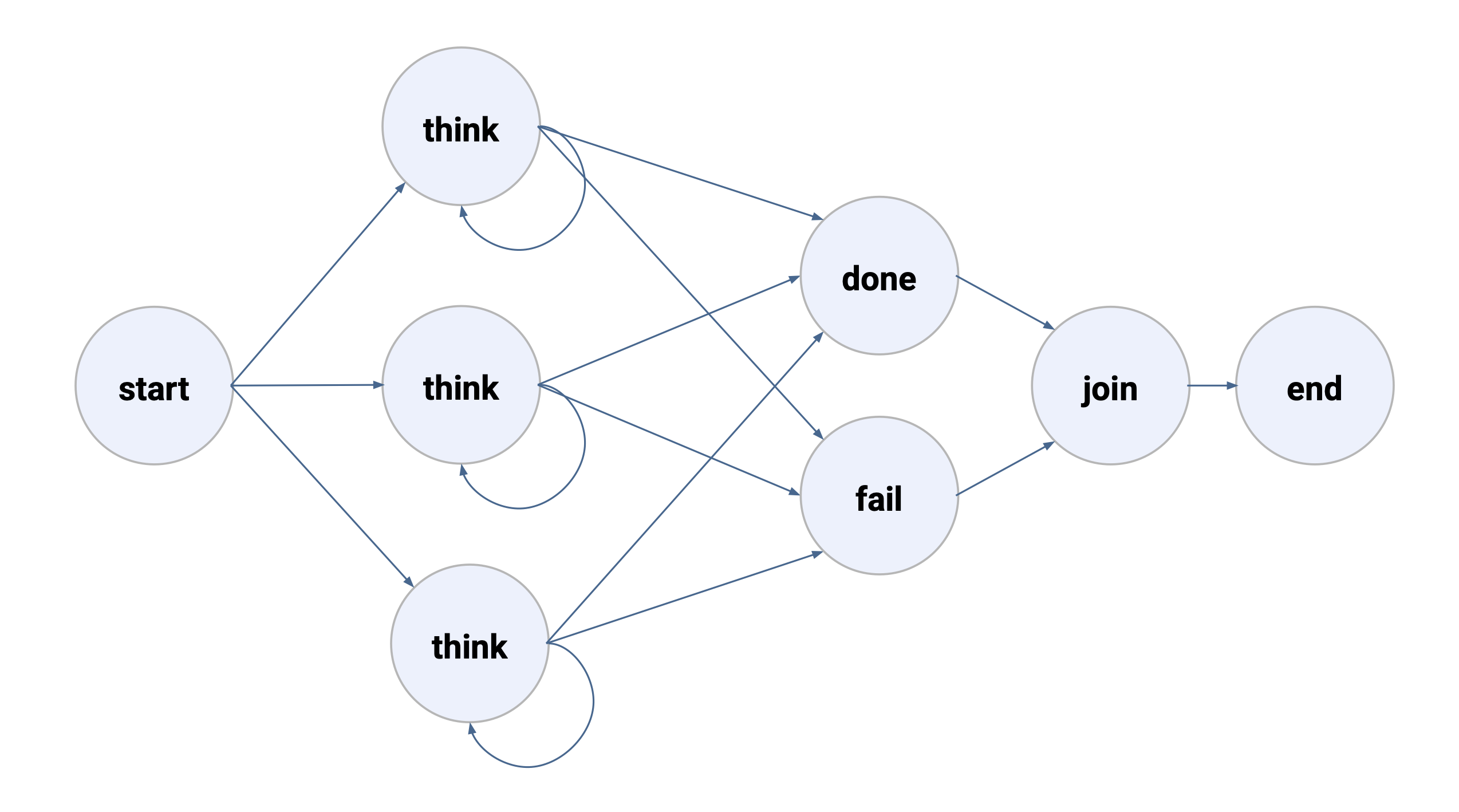

With Metaflow 2.18, you are now able to construct flows like this:

Here, within a foreach, the think step repeatedly calls itself until a stopping condition is met. Once that condition is satisfied, the flow transitions either to a done step or a fail step, wrapping up the processing. Naturally this is just an example flow - you can use conditionals and recursion almost anywhere in your flows, and nest them freely.

While recursion and conditionals introduce additional elements of runtime dynamism in Metaflow, the intent is to keep flows understandable and predictable, rather than fully emergent at runtime. With agentic systems in particular, which are inherently stochastic, it is crucial that the system remains debuggable and maintainable. Quoting Claude Code as an example of a very successful agent, “Debuggability >>> complicated multi-agent mishmash”.

Switching @steps

As always in Metaflow, the syntax is straightforward: Instead of specifying a single step transition in self.next, you specify a dictionary of options - essentially a switch statement - keyed by a condition artifact:

@retry

@step

def agent_loop(self):

previous_state = getattr(self, 'state', None)

self.result, self.state = agent_framework(state=previous_state)

self.continue_thinking = self.result['continue_thinking']

self.next({True: self.agent_loop, False: self.end},

condition='continue_thinking')This snippet shows a typical integration between an agent_framework - pick your favorite - and Metaflow. The framework runs the agent as usual, with its native session and memory management keeping the conversation state in a Python object. Periodically, the loop exits, for example, after reaching a maximum number of turns or a time limit, and the session state is returned and stored as a Metaflow artifact. When the task resumes, the agent continues from the previously stored state which Metaflow manages safely (and inexpensively!) in a cloud-native object storage.

While this looks deceptively simple - by design - the power of this setup comes from the fact that you can enrich the agent_loop with all the functionality of Metaflow, including

- Managing and versioning prompts and other configuration with

Config. - Handling failures with

@retry. - Adding real-time observability with

@card. - Containerizing your agent code with Metaflow’s built-in dependency management.

- Executing any number of agents in parallel with Metaflow’s robust and scalable compute layer.

- Triggering agents in real-time with events.

- Provisioning ephemeral, sandboxed services and MCP servers on the compute layer, as exemplified below.

Crucially, Metaflow lets you develop and test everything locally, offering a smoother and faster development experience than most cloud-based deployment options.

Example: Autonomous deep research agents

Let’s walk though a complete and realistic system demonstrating the power of autonomous agents, running on Metaflow. Find the source code here.

We want to conduct deep research on a topic, in this case, compiling biographies of music artists. To ensure accuracy, we can’t just ask an LLM to spin up imaginative life stories. Instead, we equip an agent with the complete MusicBrainz database, consisting of over 200M rows of facts about artists and releases. The agent is to use the database to retrieve facts about releases as well as validate results obtained from web searches, which it will use autonomously to compile a timeline of life events for an artist.

Watch the screencast below to see the agent in action - the resulting timelines are rendered as @cards that are also authored by GPT-5:

As the agent framework, we use OpenAI Agent SDK - see our Metaflow wrapper for it here - but any other framework would work equally well. A convenient detail about OpenAI’s SDK is that it outputs traces of agent behavior automatically in the OpenAI dashboard, as shown in the video above, with links back to the corresponding agent task in the Metaflow UI.

The example leverages the new conditionals and recursive steps to let the agent decide if it wants to continue researching, if the work is done, or if the artist is unknown. If an agent fails repeatedly, we mark the work as failed:

@pypi(packages={'openai-agents': '0.2.9', 'pydantic': '2.11.7'})

@secrets(sources=["outerbounds.music-research-agent"])

@retry

@step

def research(self):

...

self.next({

"continue": self.research,

"done": self.done,

"unknown": self.unknown,

"failed": self.failed,

}, condition="decision")The complete agent state and all results are stored and versioned as Metaflow artifacts, so they can be replayed and retrieved for later analysis. Also, this allows us to use Metaflow’s resume command to resume agent sessions at any point in time, saving money and time by letting agents remember and reuse past context.

Remarkably, we can run any number of agents in parallel (rate limiting is handled automatically), orchestrated by Argo Workflows and @kubernetes in a highly available manner. As a result, we can conduct deep research for hundreds of artists in 15 minutes.

By using this pattern - potentially combined with custom model endpoints to work around rate limits and cost issues - you can construct arbitrarily sophisticated autonomous agents to process arbitrary amounts of information as quickly as needed - just add compute power to get results faster.

Example: Ephemeral, sandboxed MCP services

There has been a lot of buzz about enabling agents to discover tools and access diverse data sources through MCP. The concept is certainly powerful but with great power comes great responsibility. As any engineer or DevOps person managing distributed systems knows, letting chaos agents run unchecked against an API or database is a recipe for disaster.

As an illustrative example, the MusicBrainz database consists of over 200 million rows over a whopping 375 tables, intricately interconnected via foreign keys and constraints. Letting an agent run poorly optimized SQL queries against a production database like this is a Bad Idea. While this problem concerns scale and performance, enterprise environments also introduce other critical requirements around security and data governance.

A better approach is to provide agents with fully isolated, controlled, and potentially sandboxed services. A key challenge is that few are willing to deploy and maintain duplicate infrastructure. A large database like MusicBrainz also demands large resources. Hosting a single instance on AWS RDS can easily exceed $500/month, making multiple deployments cost-prohibitive.

Metaflow SessionService

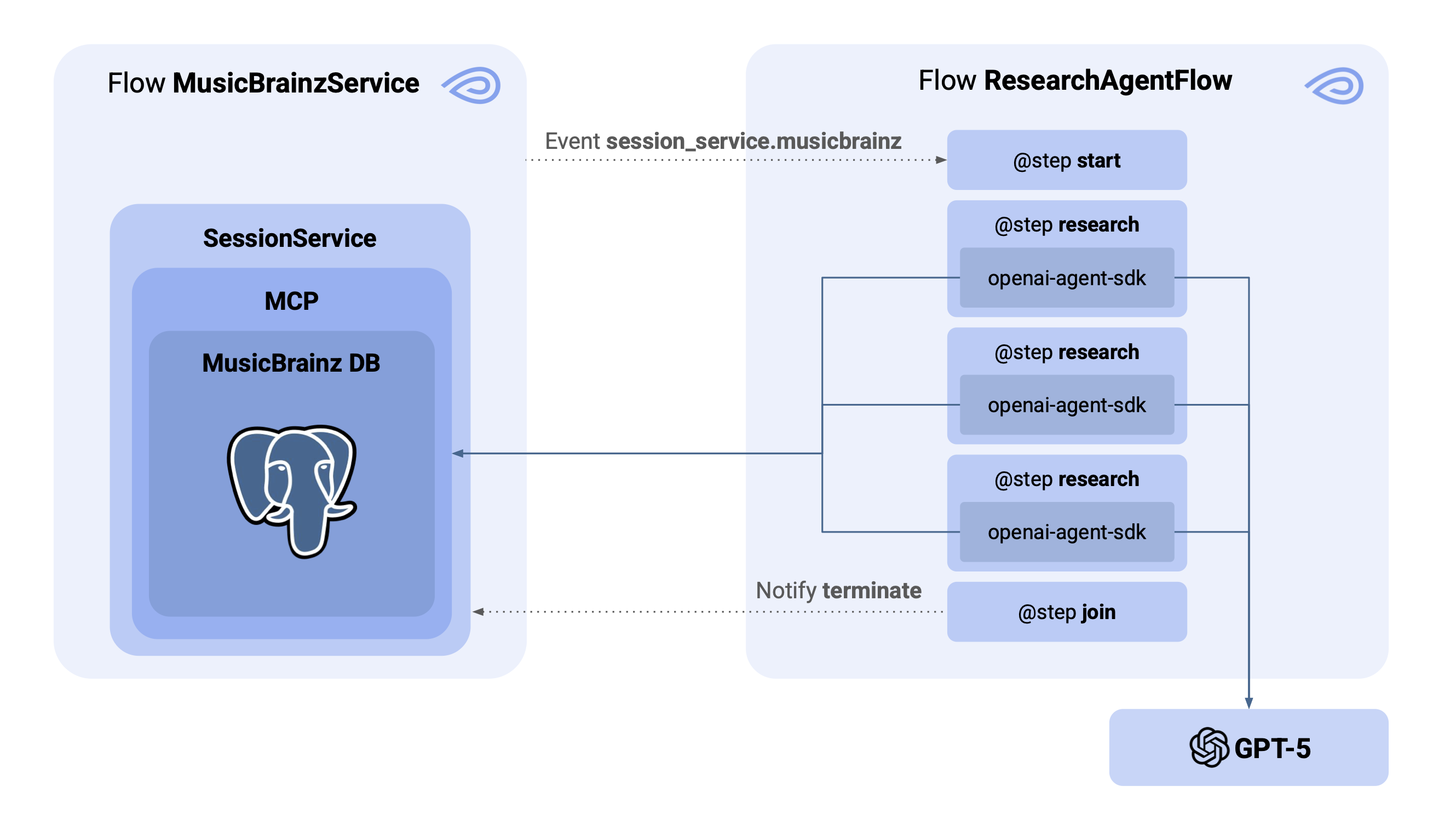

To demonstrate how you can leverage Metaflow to address the issue of ephemeral services, wrapped in MCP endpoints, we introduce a helper library for Metaflow, SessionService. The library is responsible for setting up and supervising services - such as a PostgreSQL database and a Google MCP Toolbox in front of it, so that they can be accessed by agents on the fly.

Here’s what it takes to set up a full database and an MCP service for it in a Metaflow step, and broadcast their availability to agents:

@kubernetes(cpu=12, memory=100_000, disk=80_000)

@conda(packages={'postgresql': '17.4'})

@timeout(hours=5)

@step

def start(self):

self.download_db('db.dump')

with SessionService('musicbrainz') as ss:

ss.broadcast({'mcp_port': 9999})

while True:

if ss.wait(120):

print("Shutting down the service")

break

print("Service is still alive..")

self.next(self.end)This snippet leverages a number of features in Metaflow:

- We use

@kubernetesto request a sufficiently large instance for the database. The compute backend will autoscale instances up and down on demand basis. - We use

@condato install PostgresSQL on the fly. Additionally, by leveraging Fast Bakery, we can get a complete container image created in seconds, without having to worry about creating and hosting container images. - We leverage Metaflow’s fast S3 client to load a large database dump in seconds.

- The

SessionServiceand its client are implemented as custom decorators and mutators. - We broadcast the service availability to all agents using event-triggering.

As a result, we are able to set up a full clone of the massive MusicBrainz database on the fly - securely in our private environment, alleviating auth concerns. Restoring the database of 200M rows and 375 tables takes about 10 minutes from scratch. A benefit of this approach is that you can scale services both vertically - just up @resources - and horizontally to match the scale of your use case.

After the MCP service is up and running, we broadcast an event containing the MCP server address, which triggers downstream consumers, in our case, ResearchAgentFlow:

The ResearchAgentFlow will then spin up a desired number of agents, which start communicating with the database over MCP, as well as with the LLM. Once all the agents have completed, the client notifies MusicBrainzService that it is not needed anymore, which will tear down all the services as well as the instance behind them, making sure that we don’t have to pay for idling services.

The SessionService pattern can be used to expose any tools and services over MCP. Take a look at the code and adapt it to your needs. Contributions are welcome!

Every agent deserves a stable home

Metaflow not only provides a stable foundation for your agentic systems but also powers the rest of the stack: from maintaining up-to-date RAG pipelines to fine-tuning custom LLMs to back your agents. Agentic systems are, after all, software systems, integrated with other software systems, and they shouldn’t be treated as an exotic island. Metaflow gives you the building blocks to assemble complete AI systems with composable, stable, and developer-friendly APIs and adapt the stack to your specific needs.

If you are curious to learn more about building agents with Metaflow - or using the new conditional and recursive steps for other use cases - join over 5k AI and ML engineers on the Metaflow Community Slack. While you can certainly ask your favorite AI co-pilot to craft flows for you, there is no substitute for a vibrant community of helpful and happy humans!

Start building today

Join our office hours for a live demo! Whether you're curious about Outerbounds or have specific questions - nothing is off limits.